This page describes multi-core Chronos --- a worst case execution time (WCET) analyzer tool for multi-core platforms. Originally, the tool has been built upon Chronos --- a WCET analyzer tool for single core platforms. The single core version of Chronos is still actively maintained and distributed here.

Starting from the last decade, the following developers have contributed to Chronos (single core and multi-core) by implementing some of its crucial components:

Chronos for multi-cores extends its single core counterpart

through two crucial analyses: (1) shared Level 2

instruction cache and (2) shared TDMA round robin bus. Additionally,

multi-core version of Chronos applies innovative analyses techniques, which

capture the key interactions of shared cache and shared bus with different other

micro-architectural features (e.g. pipeline and branch prediction). Therefore,

multi-core version of Chronos currently supports the following

micro-architectural features:

Multi-core version of Chronos does not drop any of the functionalities in pipeline and branch prediction, provided by the single core version of Chronos. Consequently, we can also handle the timing anomaly problem in multi-cores due to the interaction of shared cache and shared bus with pipeline and branch prediction.

Current / Future work:

The current distribution for multi-core Chronos does not include the timing effect of data caches. Integration of data cache analysis into the multi-core extension is under development and will be available for next release.

Publication related to the multi-core Chronos tool:

The technical aspect of our tool has been described in the following research report:

A Unified

WCET Analysis Framework for Multi-core Platforms

Sudipta Chattopadhyay, Chong Lee Kee,

Abhik Roychoudhury,

Timon Kelter,

Peter Marwedel and

Heiko Falk

Technical Report, October 2011 [A shorter version

is going to appear in the 18th IEEE Real-time and Embedded Technology and

Applications Symposium (RTAS) 2012]

Related publications:

1.

Chronos: A Timing Analyzer for Embedded Software

Xianfeng Li, Yun Liang, Tulika Mitra and Abhik

Roychoudhury

Science of Computer Programming, Volume 69, December 2007

2.

Modeling

Out-of-Order Processors for Software Timing Analysis

Xianfeng Li, Abhik Roychoudhury and Tulika Mitra

IEEE Real-Time Systems Symposium (RTSS) 2004

3.

Timing Analysis of Concurrent Programs Running on Shared Cache Multi-cores

Yan Li, Vivy Suhendra, Yun

Liang, Tulika Mitra and Abhik

Roychoudhury

IEEE Real-time System Symposium (RTSS) 2009.

4.

Modeling shared Cache and Bus in Multi-core Platforms for Timing Analysis

Sudipta Chattopadhyay , Abhik

Roychoudhury and Tulika Mitra

13th International

Workshop on Softwares and Compilers for Embedded Systems (SCOPES) 2010

5.

Bus aware Multicore WCET analysis through TDMA Offset Bounds

Timon Kelter, Heiko Falk, Peter Marwedel, Sudipta Chattopadhyay and Abhik

Roychoudhury

23rd Euromicro Conference on Real-time Systems

(ECRTS) 2011

Contact Sudipta Chattopadhyay (sudiptaonline@gmail.com) to get the first prototype version of Chronos multi-core WCET analysis tool. We also provide the precompiled binary of a simulator (a multi-core extension of simplescalar simulator) to validate the obtained WCET result.

There is a version available in bitbucket - from here https://bitbucket.org/sudiptac/multi-core-chronos

The prototype of multi-core Chronos contains the following directories:

chronos-multi-core

This directory contains the WCET analyzer targeting simplescalar PISA. The 32 bit precompiled analyzer binary is chronos-multi-core/est/est. The analyzer has been built in an Intel Pentium 4 machine, running Ubuntu 8.10 operating systems.

CMP_SIM

This directory contains the multi-core simulator supporting shared cache and shared TDMA bus. Target architecture is simplescalar PISA (i.e. same as the WCET analyzer). The 32 bit precompiled analyzer binary is CMP_SIM/simplesim-3.0/sim-outorder. The simulator has also been built in an Intel Pentium 4 machine, running Ubuntu 8.10 operating systems.

benchmarks

Contains precompiled binaries of sample benchmarks targeting simplescalar PISA. Each subdirectory say, <bm> in the benchmarks directory contains the following relevant files

<bm>.c : C source file of the benchmark

<bm> : Simplescalar PISA binary of <bm>

<bm>.cfg : Internal representation of the <bm> control flow graph

<bm>.loopbound : Loop bound specifier. Each line contains the loop bound of a specific loop in the source file in following format:

<procedure id> <loop id> <relative loop bound>

where <procedure id> represents the id of the procedure in source code order. Similarly, <loop id> represents the relative id of the loop (inside procedure) in source code order.

<bm>.dis : Disassembled file from <bm> binary.

<bm>.cons : user specified ILP constraints for helping WCET analysis process.

<bm>.lp : ILP problem formulated to solve the WCET of <bm>. This <bm>.lp file can be solved by lp_solve.

<bm>.ilp : Same as <bm>.lp but with CPLEX support. If you use CPLEX, the input file should be <bm>.ilp instead of <bm>.lp

lp_solve

This is a freely available LP solver which we used for our experiments. LP solver is used to get the WCET value, which is formulated as an ILP problem. "lp_solve/lp_solve" is the binary which invokes the solver. Although, we strongly recommend using commercial solver CPLEX, as we found it much faster than lp_solve.

Download the package "chronos-mc-v1-exe.zip" (contact sudiptaonline@gmail.com to download this package) and extract it in a directory say $IDIR:

cmd> unzip chronos-mc-v1-exe.zip

After extracting, it will produce four directories as follows:

1) chronos-multi-core

2) CMP_SIM

3) benchmarks

4) lp_solve

Go inside the "chronos-multi-core" directory and execute the following commands to test the WCET analyzer:

cmd> ./est/est -config processor_config/exp_cache/processor_l2.opt task_config/exp_cache/tgraph_mrtc_est_2

If the above command executes successfully and gives a valid WCET value, then you have installed the analyzer successfully.

Execute the following command from CMP_SIM/simplesim-3.0 directory to test the simulator:

cmd> ./sim-outorder -sconfig sim-test/exp_cache/processor.opt sim-test/exp_cache/cnt.arg sim-test/exp_cache/jfdcint.arg

If executing the above command produces valid simulation results at end, then you have successfully installed the multi core simulator.

From the directory "chronos-multi-core", you can run the run the chronos multicore analyzer using the following command:

cmd> ./est/est -config <processor_config> <task_config>

where <processor_config> is the file providing micro-architectural configuration and <task_config> is the file providing task mapping to different cores. Please follow the description below which describes the format of these files:

processor_config:

processor_config describes the micro-architectural configurations. The micro-architectural configuration follow the same format as simplescalar except a few modifications introduced to handle multi-core specific features. A typical processor configuration file looks as follows (description shown beside after # sign):

-cache:il1 il1:16:32:2:l # 2-way associative, 1 KB L1 cache

-cache:dl1 none # perfect L1 data cache

-cache:il2 il2:32:32:4:l # 4-way associative, 4 KB L2 cache

-cache:il2lat 6 # L2 cache hit latency = 6 cycles

-mem:lat 30 2 # memory latency = 30 cycles

-bpred 2lev # 2 level branch predictor

-fetch:ifqsize 4 # 4-entry instruction fetch queue

-issue:inorder false # out-of-order processor

-decode:width 2 # 2-way superscalar

-issue:width 2 # 2-way superscalar

-commit:width 2 # 2-way superscalar

-ruu:size 8 # 8-entry reorder buffer

-il2:share 2 # 2 cores share an L2 cache

-core:ncores 2 # total number of cores = 2

-bus:bustype 0 # TDMA round robin shared bus

-bus:slotlength 50 # bus slot length assigned to each core = 50 cycles

Note that except the last four

paramters, all other parameters are identical to simplescalar. A more

detailed description of the parameters can be

found by running the sim-outorder without any input. Last four parameters are introduced

for multi-core WCET analysis and are detailed as follows:

-core:ncores

Total number of cores in the processor, default value is 1

-il2:share

Total number of cores sharing an L2 cache. Default value is 1. Therefore providing only "-core:ncores 2" does not mean that the two cores share an L2 cache. We need to additionally provide "-il2:share 2" (as shown in the example above). Some examples of using the above two arguments:

-core:ncores 2 , -il2:share 1 : 2 cores with private L2 caches

-core:ncores 2 , -il2:share 2 : 2 cores with shared L2 cache

-core:ncores 4 , -il2:share 2 : 4 cores with a group of two cores sharing an L2 cache

-bus:bustype

Default value is -1 which means a perfect shared bus and introduces zero bus delay for any possible bus transaction. If the value is 0 (as in the example), it resembles a round robin TDMA bus where a fixed length bus slot is assigned to each available core.

-bus:slotlength

Only relevant if -bus:bustype is set to 0. Represents the bus slot length assigned to each core in round robin bus schedule.

Example processor configurations:

Numerous processor configuration examples are provided in directories chronos-multi-core/processor_config/exp_*. Those can be tried for running example programs.

task_config:

task_config represents the task names and their mapping to different cores. This file is viewed by the analyzer front end as a task graph. The abstract syntax of any <task_config> is as follows:

<Total number of tasks>

<Path to task 1>

<priority of task 1> <assigned core to task 1> <MSC id to task 1> <list of successor task ids of task 1 according to the partial order>

<Path to task 2>

<priority of task 2> <assigned core to task 2> <MSC id to task 2> <list of successor task ids of task 2 according to the partial order>

.........(for all the tasks)

Therefore, each 2 lines in the task_config file represent the task name and its parameters (priority, assigned cores etc). Although, the front end supports reading the file in a task graph format, we currently do not have any support for scheduling task graph. We currently derive the WCETs of interfering tasks in different cores. Therefore, for the current release of the tool, only the following parameters are important:

<Total number of tasks> : Total number of tasks running on different cores

<path to task i> : Relative or full path name pointing to the simplescalar PISA binary of the task.

<assigned core to task i> : The assigned core number to the task.

Following is an example of <task_config> file:

2

../benchmarks/cnt/cnt

0 0 0 0

../benchmarks/jfdcint/jfdcint

0 1 1 0

The above task_config file states that

we are running "../benchmarks/cnt/cnt"

on core 0 and "../benchmarks/jfdcint/jfdcint"

on

core 1 concurrently.

Example task configurations:

Numerous task configuration examples are provided in directories chronos-multi-core/task_config/exp_*. Those can be tried for running example programs. Nevertheless, it should verified whether the path names of the benchmarks/tasks are provided correctly in the task_config file.

(Note: MSC id is the message sequence chart id. It is mainly retained for some compatibility reason and can be removed later. MSC id can be put same for each task (say 0). List of successor task ids are the list of integers representing different task identities.)

From the directory "CMP_SIM/simplesim-3.0", you can run the run the multi-core simulator using the following command:

cmd>

./sim-outorder -sconfig <processor_config> <arg_file 1>

<arg_file 2> .....

Above command runs

the simulation of task provided by <arg_file 1> in core 0, task

provided by <arg_file 1> in core 1 and so on with some

shared configurations given through <processor_config>

(explained below in more detail). Here <arg_file 1>.... represents

the tasks running on a dedicated core and the configurations of the

corresponding core.

A typical arg file looks like this:

../../benchmarks/cnt/cnt > sim-test/exp_cache/cnt.out

-issue:inorder true

-issue:wrongpath false

-bpred 2lev

-ruu:size 8

-fetch:ifqsize 4

-cache:il1 il1:16:32:2:l

-cache:il2 il2:32:32:4:l

-cache:il2lat 6

-mem:lat 30 2

-decode:width 1

-issue:width 1

-commit:width 1

The first line of the above arg file provides the benchmark name running on the core (i.e. ../../benchmarks/cnt/cnt). The benchmark name must point to the simplescalar PISA binary of the corresponding benchmark (check that path). The detailed output of the simulation will be redirected to the file "sim-test/exp_cache/cnt.out". The simulation also provide the output in standard output. Note that to compare result with WCET analyzer, comparison must be made with "effective" cycles as the effective cycles ignore the time spent in libraries (so as the WCET analyzer).

Rest of the lines in arg file

resembles the micro-architectural configuration of the core which has

exactly similar format with single core simplescalar release. <processor_config> file provides

(given with -sconfig option) the shared configurations among cores.

Following is an

example:

-core:ncores 2 # total number of cores

-bus:bustype 0 # TDMA round robin bus

-bus:slotlength 50 # bus slot length assigned to each core

-il2:share 2 # number of cores sharing an instruction L2 cache

-dl2:share 2 # number of cores sharing an data L2 cache

Note that the above

parameters have the same interpretation as in the multi core WCET

analyzer.

Example simulations:

Numerous examples have been provided in directory CMP_SIM/simplesim-3.0/sim-test/exp_*. Check out the "run_one" script in directories CMP_SIM/simplesim-3.0/sim-test/exp_* to get some sample commands for simulations.

Please contact Sudipta Chattopadhyay (sudiptaonline@gmail.com) for any feedback, comments or difficulties in running the tools.

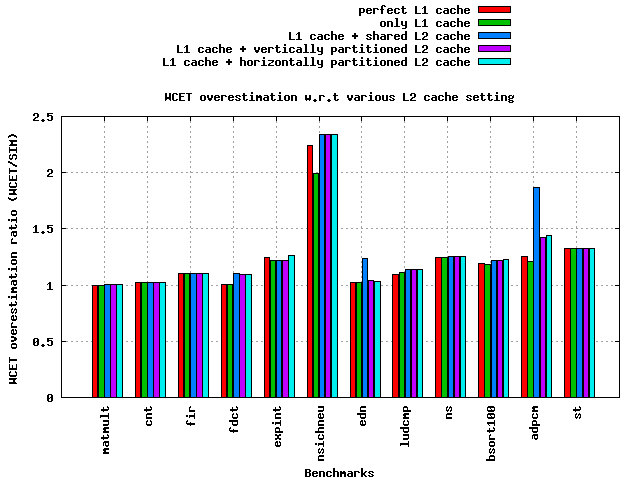

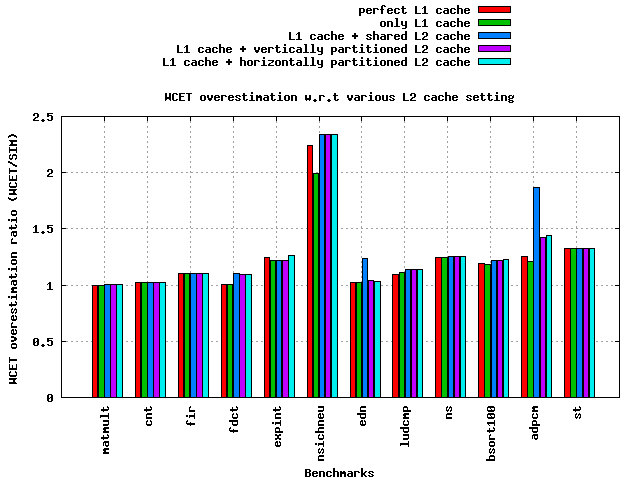

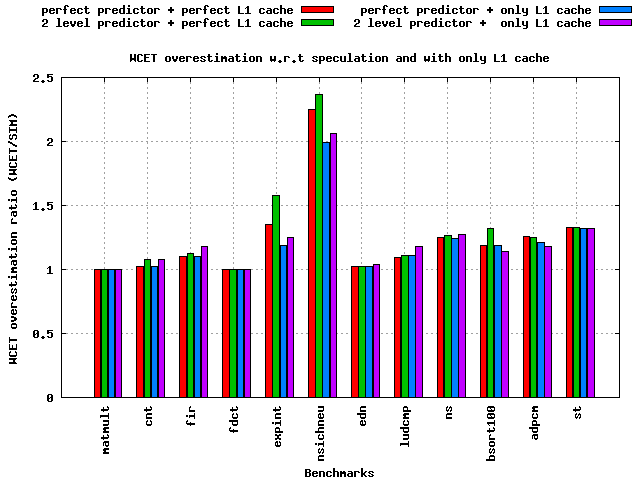

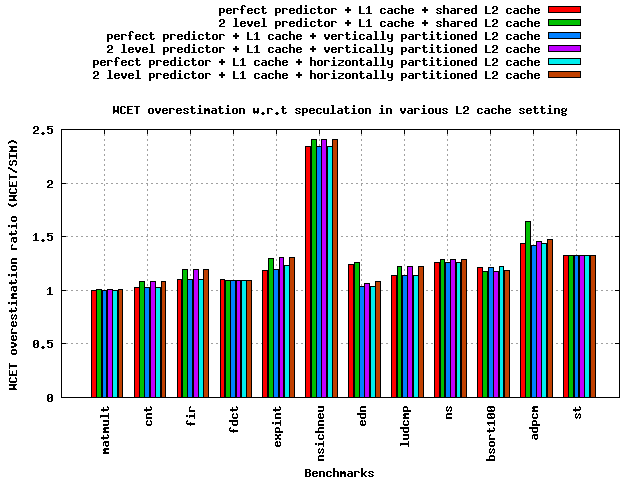

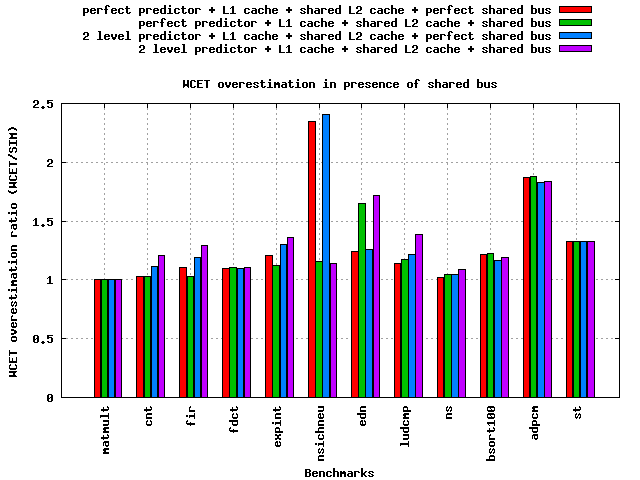

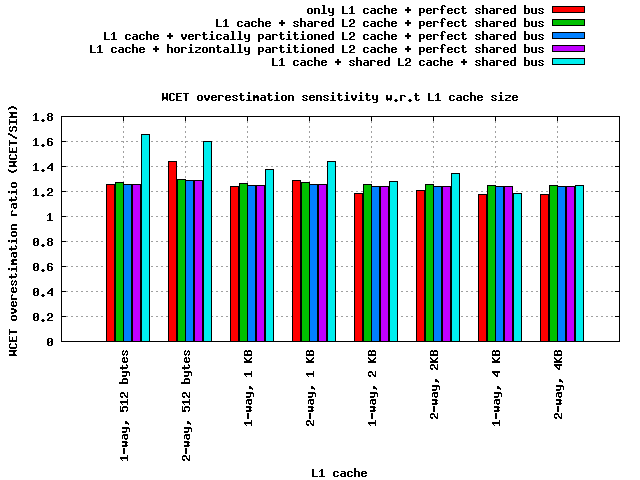

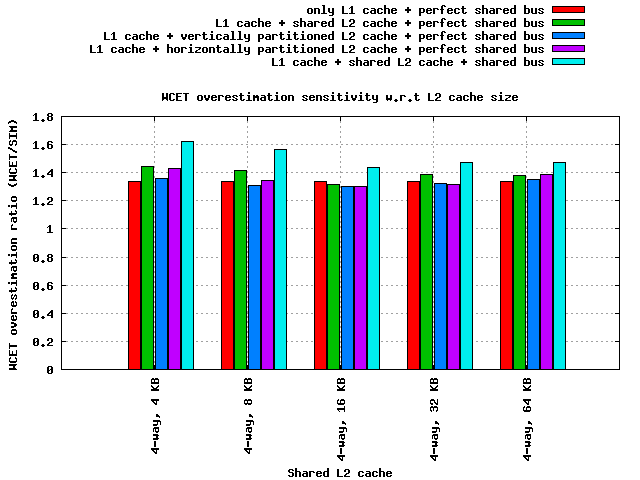

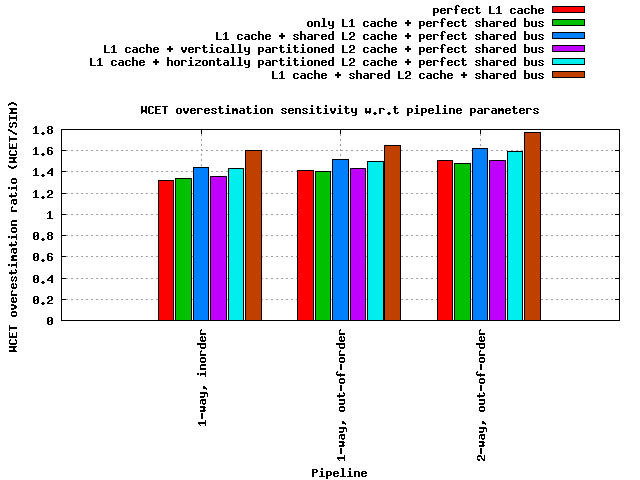

| Component | Default Setting | Perfect Setting |

| Cores | 2 | NA |

| pipeline | 1 way, inorder 4-entry instruction fetch queue 8 entry reorder buffer |

NA |

| L1 cache | 2-way associative, 1

KB miss penalty = 6 cycles |

All accesses are

L1 hit |

| L2 cache | 4-way associative, 4

KB miss penalty = 30 cycles |

NA |

| shared bus | slot length = 50 cycles | Zero bus delay |

| Branch predictor | 2 level predictor,

L1 size = 1, L2 size = 4, history size = 2 |

Branch prediction is always correct |

| Core 1 | Core 2 |

| matmult | jfdctint |

| cnt | jfdctint |

| fir | jfdctint |

| fdct | jfdctint |

| expint | jfdctint |

| nsichneu | jfdctint |

| edn | statemate |

| ludcmp | statemate |

| ns | statemate |

| bsort100 | statemate |

| adpcm | statemate |

| st | statemate |

To reproduce the experimental results please follow this README.