CGI Seminar Series at I2R

Seminar 25

Title: Design of an Intelligible Mobile Context-Aware Application

Speaker: Brian Lim, Human-Computer Interaction Institute, CMU

Chaired by: Jamie Ng Suat Ling

Venue: Franklin, 11 South, Connexis,

Fusionopolis

Venue: Franklin, 11 South, Connexis,

Fusionopolis

Time: 15:00-15:45, January 5, Thursday, 2012

Abstract

Context-aware

applications are increasingly complex and autonomous, and research has

indicated that explanations can help users better understand and ultimately

trust their autonomous behavior. However, it is still

unclear how to effectively present and provide these explanations. This work

builds on previous work to make context-aware applications intelligible by

supporting a suite of explanations using eight question types (e.g., Why, Why

Not, What If). We present a formative study on design and usability issues for

making an intelligible real-world, mobile context-aware application, focusing

on the use of intelligibility for the mobile contexts of availability, place,

motion, and sound activity. We discuss design strategies that we considered,

findings of explanation use, and design recommendations to make intelligibility

more usable.

Bio-data

Brian Lim is a 5th

year Ph.D. student at the Human-Computer Interaction Institute in Carnegie

Mellon University. He has been working with Prof. Anind

Dey to investigate how to make context-aware

applications intelligible, so that they can explain how they function, and

users would find their behavior more believable, and

find them more usable. He received his B.S. from Cornell University under the

A*STAR National Science Scholarship (BS) and is currently funded by the A*STAR

National Science Scholarship (PhD). Previously he was a research officer with I2R

from 2006-2007.

Seminar 24

Title: Evaluating Gesture-based Games with Older Adults on a Large Screen Display

Speaker: Dr Mark David Rice

Chaired by: Jamie Ng Suat Ling

Venue: Franklin, 11 South, Connexis,

Fusionopolis

Time: 10:00-10:30, July 6, Wednesday, 2011

Abstract

Focusing on

designing games for healthy older adults, we present a study exploring the usability

and acceptability of a set of three gesture-based games. Designed for a large

projection screen display, these games employ vision-based techniques that center on physical embodied interaction using a graphical

silhouette. Infrared detection, accompanied by back-projection is used to

reduce the effects of occluded body movements. User evaluations with 36 older

adults were analyzed using a combination of pre- and post-game questionnaires,

direct observations and semi-structured group interviews. The results

demonstrate that while all the games were usable, they varied in their physical

and social engagement, perceived ease of use and perceived usefulness. In

particular, items associated with physical wellbeing were rated highly.

Bio-data

Mark is a member of

the Human Factors Engineering Programme in the CGI department. Previously, he

was employed at the University of Dundee (UK) where he

graduated with a Ph.D. in Computer Science in 2009. In 2002 he joined the

Interactive Technologies Research Group at the University of

Brighton (UK), and in 2005 completed a research placement at the Advanced

Telecommunication Research Institute (Japan). During his research career, Mark

has worked on funded projects by the Alzheimer’s Association (US) and Intel

Corporation, and the European Commission (FP7). He has co-organized two

international workshops on inclusive design at EuroITV

2008 and Interact 2009, and has been a board member of Scotland’s largest

disability organization

- Capability Scotland.

Seminar 23

Title: Intrinsic Images Decomposition Using a Local and Global Sparse Representation of Reflectance

Speaker: Dr Shen Li

Chaired by: Dr Huang Zhiyong

Venue: Franklin, 11 South, Connexis,

Fusionopolis

Time: 10:00-11:00, May 5, Thursday, 2011

Abstract

Abstract

Intrinsic image

decomposition is an important problem that targets the recovery of shading and

reflectance components from a single image. While this is an ill-posed problem

on its own, we propose a novel approach for intrinsic image decomposition using

a reflectance sparsity prior that we have developed.

Our method is based on a simple observation: neighboring

pixels usually have the same reflectance if their chromaticities

are the same or very similar.

We formalize this sparsity constraint on local reflectance, and derive a

sparse representation of reflectance components using data-driven

edge-avoiding-wavelets. We show that the reflectance component of natural

images is sparse in this representation. We also propose and formulate a novel

global reflectance sparsity constraint. Using the sparsity prior and global constraints, we formulate a l1-regularized least squares minimization problem for intrinsic

image decomposition that can be solved efficiently. Our algorithm can

successfully extract intrinsic images from a single image, without using other

reflection or color models or any user interaction.

The results on challenging scenes demonstrate the power of the proposed

technique.

Bio-data

Shen

Li received her PhD 2006 and M.Eng 2002, both in

computer science from Osaka University, Japan. Before joining I2R, she worked

as researcher at Microsoft Research Asia, and ECE Department in National University

of Singapore. Her main research interests are in computer graphics &

compute vision, especially in reflectance and illumination modeling,

image-based rendering/modeling and low-level vision.

Seminar 22

Title: Projected Interfaces and Virtual Characters

Speaker: Dr Jochen Walter Ehnes

Speaker: Dr Jochen Walter Ehnes

Chaired by: Dr Corey Manson Manders

Venue: Franklin, 11 South, Connexis,

Fusionopolis

Time: 14:00-15:00, April 7, Thursday, 2011

Abstract

During

this seminar I will provide an overview of my previous work with computer

graphics, and 3D user interfaces at Fraunhofer IGD,

Tokyo University, as well as the University of Edinburgh. In particular I will

describe what motivated me to start working with projected displays.

Furthermore,

I would like to start a discussion about how my previous work with controllable

projectors could be turned into a project here at A*Star. I certainly see a lot

of possibilities for collaborations, so I am looking forward to an interesting

discussion.

Bio-data

Before

joining I2R, Jochen worked for

four years at the School of Informatics at the University of Edinburgh. While

he was a member of the Centre for Speech Technology Research (CSTR), he did

work on the graphical side of multimedia interfaces, complementing speech

technology developed by his colleagues. He also collaborated with people at the

Edinburgh College of Art.

Originally from

Germany, he obtained his Diplom (German equivalent to

the masters) in Informatics at the Technical University of Darmstadt in 2000.

During his studies he already worked part time at the Fraunhofer

Institute of Computer Graphics (Fraunhofer IGD). He

did his masters project at CAMTech, a shared lab

between Fraunhofer IGD and NTU at NTU. After that he

worked full time at IGD for two and a half years before he went to Tokyo to

work on his PhD, which he obtained in 2006.

Seminar 21

Seminar 21

Title: Real-Time Data Driven Deformation Using Kernel Canonical Correlation Analysis

Speaker: Dr Kim Byung Uck

Chaired by: Dr Ishtiaq Rasool Khan

Venue: Franklin, 11 South, Connexis,

Fusionopolis

Time: 14:00-15:00, April 1, Friday, 2011

Abstract

Achieving intuitive

control of animated surface deformation while observing a specific style is an

important but challenging task in computer graphics.

Solutions to this task can find many applications in data-driven skin

animation, computer puppetry, and computer games. In this paper, we present an

intuitive and powerful animation interface to simultaneously control the

deformation of a large number of local regions on a deformable surface with a

minimal number of control points. Our method learns suitable

deformation subspaces from training examples, and generate new

deformations on the fly according to the movements of the control points. Our

contributions include a novel deformation regression method based on kernel

Canonical Correlation Analysis (CCA) and a Poisson-based translation solving

technique for easy and fast deformation control based on examples. Our run-time

algorithm can be implemented on GPUs and can achieve a few hundred frames per

second even for large datasets with hundreds of training examples.

Bio-data

Byung-Uck

Kim is a Senior Research Fellow in the Department of Computer Graphics &

Interface (CGI) at I2R. He is a voting member of ACM SIGGRAPH Singapore Chapter

and serves as an ACM SIGGRAPH reviewer. He received his B.S. (1996), M.S(1995), and Ph.D.(2004) from Yonsei

University, Seoul, Korea. He worked for Samsung Electronics Co. Ltd as a Senior

Engineer until 2007 and was a Postdoctoral Research Associate in the Department

of Computer Science at the University of Illinois, Urbana-Champaign until 2010.

Seminar 20

Seminar 20

Title: Smoothly Varying Affine stitching

Speaker: Daniel Lin Wenyen

Chaired by: Dr Ng Tian Tsong

Venue: Franklin, 11 South, Connexis,

Fusionopolis

Time: 14:00-15:00, March 24, Thursday, 2011

Abstract

We design a new image stitching algorithm.

Traditional image stitching using parametric transforms such as homography, only produces perceptually correct composites for

planar scenes or parallax free camera motion between source frames. This limits

mosaicing to source images taken from the same

physical location. In this paper, we introduce a smoothly varying affine

stitching field which is flexible enough to handle parallax while retaining the

good extrapolation and occlusion handling properties of parametric transforms.

Our algorithm which jointly estimates both the stitching field and correspondence, permits the stitching of general motion

source images, provided the scenes do not contain abrupt protrusions.

Bio-data

Daniel Lin

undertook his undergraduate and PhD studies at the National University of

Singapore. His research interest is structure from motion and large

displacement matching.

Seminar 19

Title: Toolkit to Support Intelligibility in Context-Aware Applications

Speaker: Brian Lim, Human-Computer Interaction Institute, CMU

Chaired by: Tan Yiling Odelia

Venue: Transform 1 & 2 @ Level 13, North, Connexis, Fusionopolis

Time: 14:00-15:00, December 21, Tuesday, 2010

Time: 14:00-15:00, December 21, Tuesday, 2010

Abstract

Context-aware applications should be

intelligible so users can better understand how they work and improve their

trust in them. However, providing intelligibility is non-trivial and requires

the developer to understand how to generate explanations from application

decision models. Furthermore, users need different types of explanations and

this complicates the implementation of intelligibility. We have developed the

Intelligibility Toolkit that makes it easy for application developers to obtain

eight types of explanations from the most popular decision models of

context-aware applications. We describe its extensible architecture, and the

explanation generation algorithms we developed. We validate the usefulness of

the toolkit with three canonical applications that use the toolkit to generate

explanations for end-users.

Bio-data

Brian Lim is a 3rd year Ph.D. student at

the Human-Computer Interaction Institute in

Seminar 18

Title: User Experience Design in a MNC

Speaker: Dr Steven John Kerr

Chaired by: Jamie Ng Suat Ling

Venue:

Time: 16:00-17:00, November 8, Monday, 2010

Abstract

User experience design is a term some can

find confusing especially when other terms such as user centered design,

interaction design, information architecture, human computer interaction, human

factors engineering, usability, and user interface design are commonly used.

There are many overlaps, but these terms essentially refer to a toolbox of

processes and techniques, which can be applied depending on the situation and

available resources. They address the same goal of making things more simple

and enjoyable for users by making them the focus rather than the technology.

User Experience (UX for short) is associated heavily with the commercial sector

and is used as an encompassing term for the profession and professionals who

attempt to improve all aspects of the end users interaction with a product or

service. This presentation will discuss how UX is incorporated in a design

engineering environment for a large MNC and the different processes used in the

design and evaluation of customer products.

Bio-data

Before joining I2R, Steven

spent 3 years as the UX Lead for Software in Dell’s Experience Design Group in

Seminar 17

Title: Scenario-based Design: Methods & Applications in Domestic Robots

Speaker: Dr Xu Qianli

Chaired by: Tan Yiling Odelia

Venue:

Time: 14:00-15:00, October 14, Thursday, 2010

Abstract

Requirement development has been

considered as an important issue in product and systems design. It is of

particular interest to emerging products which market acceptance is untested

yet. It becomes essential to elicit user needs of these products from a design

perspective. However, existing requirement analysis methods such as card

sorting, focus groups, and ethnography study have not been able to include the

usage context in the design loop, thus jeopardizing the quality of the

developed requirements. In this regard, this research proposes a scenario-based

design approach to develop customer requirements. In line with the concept of

product ecosystems, scenarios are designed to facilitate the elicitation and

analysis of customer requirements. The scenario-based design approach includes

three major steps, namely, (1) scenario construction, (2) scenario deployment,

and (3) scenario evaluation. The proposed method is anticipated to tackle the

requirement development issues in the on-going project of robotic product

design. It is expected that the scenario-based approach will be useful to

include the customers experience in the requirement development, and enhance

the product development cycle so as to attain innovative solutions.

Bio-data

Before joining the CGI Department, I2R,

Qianli was a research fellow in the School of

Mechanical & Aerospace Engineering,

Seminar 16

Title: Designing for diversity - Exploring the challenges and opportunities of working with older adults

Speaker: Dr Mark David Rice

Chaired by: Jamie Ng Suat Ling

Venue:

Time: 14:30-15:30, September 3, Friday, 2010

Abstract

It is well documented that older people

provide much greater challenges to user-centered design than more traditional

mainstream groups. These can be related

to a wealth of different reasons – e.g. sensory loss, culture, language and

differing attitudes and experience to technology. Similarly, there is a great

necessity to understand how technology can support a rapidly ageing society,

requiring user-centered innovation by both private and public sectors to

develop new products and services for older people. Nevertheless, recent

research indicates that the ageing market lacks a growing need for human

dimension and a focus on individuals and relationships rather than

technological systems per se. During this talk I will give an overview of the

most significant aspects of my work in human-computer interaction with older

people. Amongst the various examples given, I will present some results of a

three year European study utilizing the digital television platform to deliver

a ‘brain training’ program for older adults. This includes presenting some of

the practical issues of working with an extensive panel of volunteers (+ 300),

the user-centered design methods applied and how they fitted within the

technical development work undertaken, as well as highlighting the business and

exploitation plan for the potential target market.

Bio-data

Mark joined the Human Factors Engineering

group in CGI this August. Previous to moving to

Seminar 15

Title: Product Ecosystem Design: Affective-Cognitive Modeling and Decision-making

Speaker: Dr Xu Qianli

Chaired by: Jamie Ng Suat Ling

Venue:

Time: 10:30-11:30, June 17, Thursday, 2010

Abstract

Design innovation that accommodates

customers’ affective needs along with their cognitive processes is of primary

importance for a product ecosystem to convey more added-value than individual

products. Product ecosystem design involves sophisticated interactions among

human users, multiple products, and the ambience, and thus necessitates

systematic modelling of ambiguous affective states in

conjunction with the cognitive process. This research formulates the

architecture of product ecosystems with a particular focus on activity-based

user experience. An ambient intelligence environment is proposed to elicit user

needs, followed by fuzzy association rule mining techniques for knowledge

discovery. A modular colored fuzzy Petri net (MCFPN) model is developed to

capture the causal relations embedded in users’ affective perception and

cognitive processes. The method is illustrated through the design of a product

ecosystem of a subway station. Initial findings and simulation results indicate

that the product ecosystem perspective is an innovative step toward

affective-cognitive engineering and the MCFPN formulism excels in incorporating

user experience into the product ecosystem design process.

Bio-data

Before joining the CGI Department, I2R,

Qianli was a research fellow in the School of

Mechanical & Aerospace Engineering,

Seminar 14

Title: Category Level Object Detection and Image Classification using Contour and Region Based Features

Speaker: Alex Yong-Sang Chia

Chaired by: Huang Zhiyong

Venue:

Time: 10:30-11:30, May 13, Thursday, 2010

Time: 10:30-11:30, May 13, Thursday, 2010

Abstract

Automatic recognition of object categories

from complex real world images is an exciting problem in computer vision. While

humans can perform recognition tasks effortlessly and proficiently, replicating

this recognition ability of humans in machines is still an incredibly difficult

problem. On the other hand, successful automatic recognition technology will

have significant and mostly positive impacts in a plethora of important

application domains like image retrieval, visual surveillance and automotive

safety systems. In this talk, we present our novel contributions towards two

main goals of recognition: image classification and category level object

detection. Image classification seeks to separate images which contain an

object category from other images, where the focus is on identifying the

presence or absence of an object category in an image. Object detection

concerns the identification and localization of object instances of a category

across scale and space in an image, where the goal is to localize all instances

of that category from an image. We will present our method which exploits

contour only features for recognition. This method has achieved robust

detection accuracy, where it obtained the best contour based detection results

published so far for the challenging Weizmann horse dataset. Additionally, we

will also present a flexible recognition framework which fuse contour with

region based features. We show that by exploiting these complementary feature

types, better recognition results can be obtained.

Bio-data

Alex Yong-Sang Chia

received the Bachelor of Engineering degree in computer engineering with

first-class honours from Nanyang

Technological University (NTU),

He was awarded the Tan Sri Dr. Tan Chin Tuan

Scholarship during his undergraduate studies. In 2006, he received the A*STAR

Graduate Scholarship to pursue the Ph.D. degree in the field of computer

vision. He was also awarded the Tan Kah Kee Young Inventors' Merit Award in the Open Category in

2009 for his contribution towards object detection algorithms.

Alex Chia's research

interests are focused on computer vision for object recognition and abnormality

detection, feature matching under large viewpoint changes, and machine learning

techniques.

Seminar 13

Title: Supporting Intelligibility in Context-Aware

Applications

Title: Supporting Intelligibility in Context-Aware

Applications

Speaker: Brian Lim, Human-Computer Interaction Institute, CMU

Chaired by: Jamie Ng Suat Ling

Venue: Turing at 13 South, Connexis,

Fusionopolis

Time: 10:30-11:30, December 23, Wednesday, 2009

Abstract

Context-aware applications employ implicit

inputs, and make decisions based on complex rules and machine learning models

that are rarely clear to users. Such lack of system intelligibility can lead to

the loss of user trust, satisfaction and acceptance of these systems.

Fortunately, automatically providing explanations about a system’s decision

process can help mitigate this problem. However, users may not be interested in

all the information and explanations that the applications can produce. Brian

will be presenting his work on what types of explanations users are interested

in when using context-aware applications. This work was conducted as an online

user study with over 800 participants. He will discuss why users demand certain

types of information, and provide design implications on how to provide

different intelligibility types to make context-aware applications intelligible

and acceptable to users.

Bio-data

Brian Lim is a 3rd year Ph.D. student at

the Human-Computer Interaction Institute in

Website: www.cs.cmu.edu/~byl

Seminar 12

Title: Automatic and Real-time 3D face reconstructions

Speaker: Dr Nguyen Hong Thai, CGI, I2R

Chaired by: Chin Ching Ling

Chaired by: Chin Ching Ling

Venue: Potential 2 at 13 North, Connexis,

Fusionopolis

Time: 16:30-17:00, December 9, Wednesday, 2009

Abstract

Automatic generation of realistic 3D human

face has been a challenging task in computer vision and computer graphics for

many years with numerous research papers published in this field attempting to

address this problem. With growing number of social networks and online-game,

the need of fast, good and simple system to reconstruct 3D face is rising. This paper describes a system for automatic

and real-time 3D photo-realistic face synthesis from a single frontal face

image. This system employs a generic 3D

head model approach for 3D face synthesis which can generate the 3D mapped face

in real-time.

Bio-data

Hong Thai received his Ph.D. in Electrical

Engineering and Information Technology from

Seminar 11

Title: Are Working Adults Ready to Accept e-Health at Home?

Speaker: Tan Yiling, Odelia, CGI, I2R

Speaker: Tan Yiling, Odelia, CGI, I2R

Chaired by: Chin Ching Ling

Venue: Potential 2 at 13 North, Connexis,

Fusionopolis

Time: 16:00-16:30, December 9, Wednesday, 2009

Abstract

Many researchers and designers from the

private and government sectors have been paying much attention to the issues,

policies, challenges and opportunities of bringing healthcare services to

patients’ homes. Some believe that this would not only ease the workload of the

healthcare practitioners but also reduce the cost of healthcare treatments.

Many topics surround the older adults but few focus on current working adults,

who may be the users of the future e-Health systems and policies. Compared to

older adults, the younger working adults are more tech-savvy and hence more

likely to adopt and afford technology-mediated health services. This paper

discusses the view points of working adults concerning the adoption of e-Health

as well as addresses issues concerning innovation and acceptance in their

future homes.

Bio-data

Odelia received her Masters of

Science in Technopreneurship and Innovation from NTU

in 2008. She joined the Institute for Infocomm Research,

A-STAR, as a Research Officer, and is currently working in the Home2015 (Phase

1) and P3DES Programme. Her research interests

include human factors in smart homes, healthcare and entertainment and human

computer interaction.

Seminar 10

Seminar 10

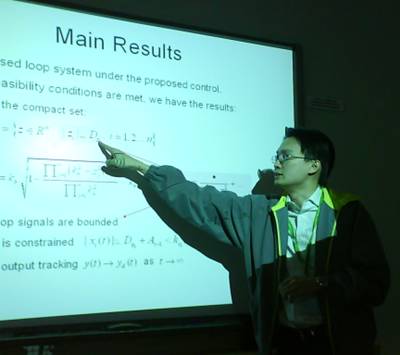

Title: Control of Nonlinear Systems with

Speaker: Dr Tee Keng Peng, CGI, I2R

Chaired by: Dr Huang Zhiyong

Venue: Turing at 13 South, Connexis,

Fusionopolis

Time: 16:00-16:30, November 24, Tuesday, 2009

Abstract

This paper presents a control for

state-constrained nonlinear systems in strict feedback form to achieve output

tracking. To prevent states from violating the constraints, we employ a Barrier

Lyapunov Function, which grows to infinity whenever

its arguments approaches some limits. By ensuring boundedness

of the Barrier Lyapunov Function in the closed loop,

we guarantee that the limits are not transgressed. We show that asymptotic

output tracking is achieved without violation of state constraints, and that

all closed loop signals are bounded, provided that some feasibility conditions

on the initial states and control parameters are satisfied. Sufficient

conditions to ensure feasibility are provided, and they can be checked offline

by solving a static constrained optimization problem. The performance of the

proposed control is illustrated through a simulation example.

Bio-data

Keng Peng received his PhD in

Control Engineering from NUS in 2008. He joined the Institute for Infocomm Research, A-STAR, as a Research Engineer, and is

currently working in the Inter-RI Robotics Programme.

His research interests include adaptive nonlinear control, robotics, and human

motor learning.

Seminar 9

Title: Development of a computational cognitive architecture for intelligent virtual character

Speaker: Dr Liew Pak-San, CGI, I2R

Chaired by: Dr Huang Zhiyong

Venue: Turing at 13 South, Connexis,

Fusionopolis

Venue: Turing at 13 South, Connexis,

Fusionopolis

Time: 14:30-15:00, October 12, Monday, 2009

Abstract

A development of a computational cognitive

architecture for the simulation of intelligent virtual characters is described

in this paper. By specializing and adapting from an existing structure for a

situated design agent, we propose three process models—reflexive, reactive and

reflective—which derive behavioural models that

underlie intelligent behaviours for these characters.

Various combinations of these process models allow intelligent virtual

characters to reason in a reflexive, reactive and/or reflective manner

according to the retrieval, modification and reconstruction of their memory

contents. This paper offers an infrastructure for combining simple reasoning

models, found in crowd simulations, and highly deliberative processing models

or reasoning, found in ‘heavy’ agents with high-level cognitive abilities.

Intelligent virtual characters simulated via this adapted architecture can

exhibit system level intelligence across a broad range of relevant tasks.

Bio-data

Pak-San received his PhD in Design

Computation from the Key Centre of Design Computing and Cognition,

Seminar 8

Title: Tennis Space: An interactive and immersive

environment for tennis simulation

Title: Tennis Space: An interactive and immersive

environment for tennis simulation

Speaker: Dr

Chaired by: Dr Huang Zhiyong

Venue: Turing at 13 South, Connexis,

Fusionopolis

Time: 14:00-14:30, October 12, Monday, 2009

Abstract

This talk reports the design and

implementation of an interactive and immersive environment (IIE) for tennis

simulation. The presented design layout, named Tennis Space, provides the

necessary immersive experience without overly restricting the player. To

address the instability problem of real-time tracking of fast moving objects, a

hybrid tracking solution integrating optical tracking and ultrasound-inertial

tracking technologies is proposed. An L-shaped IIE has been implemented for

tennis simulation and has received positive feedback from users.

Bio-data

Shuhong is currently working on the Interactive Sports Game

Engine (ISGE) project. Before joining the department of computer graphics &

interface, I2R, he worked at

Seminar 7

Seminar 7

Title: Real time tracking technologies for Augmented Reality

Speaker:

Chaired by: Dr Huang Zhiyong

Venue: Turing at 13 South, Connexis,

Fusionopolis

Time: 14:00-14:30, September 18, Friday, 2009

Abstract

Augmented Reality generally refers to the

splicing of the virtual world onto the real one, so that the user perceives

both at the same time. The focus of this talk is on attaching 3D graphics onto

real moving objects. This requires accurate tracking of the position and

orientation of the objects with respect to the viewer or camera. Tracking

systems based on computer vision, inertial sensing and differential GPS will be

presented. In particular, gyroscope triad calibration without

external equipment, a novel differential GPS technique, and accurate computer

vision tracking of planar objects without markers, which is robust to

illumination interferences and partial occlusions, are highlighted.

Bio-data

Louis Fong received his B.Comp from the

Seminar 6

Title: Computer Photomatic Stereo

and Weather Estimation with Internet Image

Title: Computer Photomatic Stereo

and Weather Estimation with Internet Image

Speaker: Dr

Chaired by: Dr Huang Zhiyong

Venue: Turing at 13 South, Connexis,

Fusionopolis

Time: 10-10:30, August 25, Tuesday, 2009

Abstract

With the growth and maturity of search

engine, there is a trend to exploit how to make use of internet data to tackle

difficult classical vision problems, and enhance the performances of current

vision techniques. This talk will present a technique which extends photometric

stereo to make it work with much diversified internet images. For popular

tourism sites, thousands of images can be obtained from internet search

engines. With these images, our method computes the global illumination for

each image as well as the scene geometry information. The weather conditions of

the photos can then be estimated from the illumination information by using our

lighting model of sky.

Bio-data

Seminar 5

Title: Computer vision-based interaction and registration

for augmented reality systems

Title: Computer vision-based interaction and registration

for augmented reality systems

Speaker: Dr Yuan Miaolong, CGI, I2R

Chaired by: Dr Huang Zhiyong

Venue: Three Star Theatrette

Time: 11-11:30, May 30, Friday, 2008

Abstract

Augmented reality (AR) is a novel

human-machine interaction that overlays virtual computer-generated information

on a real world environment. It has found good potential applications in many

fields, such as military training, surgery, entertainment, maintenance and

manufacturing operations. Registration and interaction are two key issues which

currently limit AR applications in many areas. In this talk, I will introduce

some vision-based interaction tools and registration methods which have been

integrated into our augmented reality system. Some videos will be shown to

demonstrate the related methods.

Bio-data

Yuan Miaolong

received his BS degree in Mathematics from  interactive tools.

interactive tools.

Seminar 4

Title: A model of human motor adaptation to stable and unstable interactions

Speaker: Dr Tee Keng Peng, CGI, I2R

Chaired by: Dr Huang Zhiyong

Venue: Three Star Theatrette

Time: 11:30-12, May 23, Friday, 2008

Abstract

Humans have striking capabilities to perform

many complex motor tasks such as carving and manipulating objects. This means

that humans can learn to compensate skillfully for the forces arising from the

interaction with the environment. As an attempt to understand motor adaptation,

this work introduces a model of neural adaptation to novel dynamics and

simulates its behavior in representative stable and unstable environments. The

proposed adaptation mechanism, realized in muscle space, utilizes the stretch

reflex to update the feedforward motor command, and

selective deactivation to decrease coactivation of

agonist-antagonist muscles not required to stabilize movement. Simulations on a

2-link 6-muscle model show that motion trajectories, evolution of muscle

activity, and final endpoint impedance are consistent with experimental

results. Such computational models, using only  measurable variables and simple computation, may be

used to simulate the effect of neuro-muscular

disorders on movement control, to develop better controllers for haptic devices and neural prostheses, as well as to design

novel rehabilitation approaches.

measurable variables and simple computation, may be

used to simulate the effect of neuro-muscular

disorders on movement control, to develop better controllers for haptic devices and neural prostheses, as well as to design

novel rehabilitation approaches.

Bio-data

Tee Keng Peng received the B.Eng degree

and the M.Eng degree from the National University of

Singapore, in 2001 and 2003 respectively, both in mechanical engineering. Since

2004, he has been pursuing the Ph.D. degree at the Department of Electrical and

Computer Engineering, National University of Singapore. In 2008, he joined the

Neural Signal Processing Group at I2R as a research engineer. His

current research interests include adaptive control theory and applications,

robotics, motor control, and brain-computer interfaces.

Seminar 3

Seminar 3

Title: Personalising a Talking Head

Speaker: Dr Arthur Niswar,

CGI/P3DES, I2R

Chaired by: Dr Huang Zhiyong

Venue: Three Star Theatrette

Time: 11:30-12, May 9, Friday, 2008

Abstract

Talking head is a facial animation system

which is combined with a TTS (Text-To-Speech) system to produce audio-visual

speech. The facial animation system is built by creating the head/face model with

the required parameters for speech animation. To build the head model, the

facial data of the subject have to be recorded. For this, usually hundreds of

markers have to be put on the subject's face, which is a laborious process,

especially if one wants to create the head model for another person. This

process can be simplified by modifying the previously constructed head model

using two images of the person, which is the subject of this talk.

Bio-data

Arthur Niswar

got his B.Eng. in Electrical Engineering from Bandung Institute of Technology (

Seminar 2

Title: How Creating Pervasive and Smart Projected Displays

Title: How Creating Pervasive and Smart Projected Displays

Speaker: Dr Song Peng, CGI/P3DES, I2R

Chaired by: Dr Huang Zhiyong

Venue: Three Star Theatrette

Time: 11:30-12, May 2, Friday, 2008

Abstract

Projectors and cameras nowadays are

becoming less expensive, more compact and mobile. With off-the-shelf equipment and

software support, projected displays can be set up quickly anywhere on any

surfaces, breaking the traditional confines of space limits and projection

surfaces. In order to create desirable displays using projectors and cameras,

there are issues to be addressed, such as geometric and photometric

distortions, out-of-focus blurring, etc. In this talk, an overview of the

projector-camera systems will be introduced, followed by the problems and

solutions in creating pervasive and smart projected displays.

Bio-data

Bio-data

Song Peng

received his B.S. in Computer Science and B.A. in English Language from

Seminar 1

Title: How is image information processed in the human visual system (HVS)

Speaker: Dr Tang Huajin, CGI/P3DES,

I2R

Chaired by: Dr Huang Zhiyong

Venue: Three Star Theatrette

Venue: Three Star Theatrette

Time: 11:30-12, April 18, Friday, 2008

Abstract

This talk will cover some important

discoveries so far on the structure and functions of human visual system. The

core area in the brain, the primary visual cortex is believed to organize to

realize some fundament functions, such as edge detection and motion detection

and then to fulfill the high level perception including object recognition. The

experimental procedure to investigate the visual cortex is introduced, and the

computational model that underlies the computational principles of visual

system is also presented. The aim of the research work is to establish a bridge

between neuroscience and computer science in the area of visual perception, and

to elicit novel, intelligent and efficient methods for image analysis, computer

vision, etc.

Bio-data

Huajin Tang received the B.Eng and

M.Eng degrees from

He has authored or coauthored a number of papers in

peer-reviewed international journals, including IEEE Trans. on Neural Networks,

Circuits & Systems, Neural Computation, Neurocomputing,

etc. He has also coauthored one monograph in 2007 published by Springer in his

research area. His research interests include machine learning, neural  networks, computational and biological intelligence.

networks, computational and biological intelligence.