Deep Reinforcement Learning

NUS SoC, 2018/2019, Semester II

CS 6101 - Exploration of Computer Science Research, Thu 15:00-17:00 @ MR6 (AS6 #05-10)

This course is taken almost verbatim from CS 294-112 Deep Reinforcement Learning – Sergey Levine’s course at UC Berkeley. We are following his course’s formulation and selection of papers, with the permission of Levine.

This is a section of the CS 6101 Exploration of Computer Science Research at NUS. CS 6101 is a 4 modular credit pass/fail module for new incoming graduate programme students to obtain background in an area with an instructor’s support. It is designed as a “lab rotation” to familiarize students with the methods and ways of research in a particular research area.

Our section will be conducted as a group seminar, with class participants nominating themselves and presenting the materials and leading the discussion. It is not a lecture-oriented course and not as in-depth as Levine’s original course at UC Berkeley, and hence is not a replacement, but rather a class to spur local interest in Deep Reinforcement Learning.

This course is offered in Session I (Weeks 3-7) and Session II (Weeks 8-13), although it is clear that the course is logically a single course that builds on the first half. Nevertheless, the material should be introductory and should be understandable given some prior study.

A mandatory discussion group is on Slack. Students and guests, please login when you are free. If you have a @comp.nus.edu.sg, @u.nus.edu, @nus.edu.sg, @a-star.edu.sg, @dsi.a-star.edu.sg or @i2r.a-star.edu.sg. email address you can create your Slack account for the group discussion without needing an invite.

For interested public participants, please send Min an email at kanmy@comp.nus.edu.sg if you need an invite to the Slack group. The Slack group is being reused from previous semesters. Once you are in the Slack group, you can consider yourself registered for the course.

Details

Registration FAQ

-

What are the pre-requisites?

From the original course: Machine Learning or equivalent is a prerequisite for the course. This course will assume some familiarity with reinforcement learning, numerical optimization, and machine learning. For introductory material on RL and MDPs, see the CS188 EdX course, starting with Markov Decision Processes I, as well as Chapters 3 and 4 of Sutton & Barto.

For our NUS course iteration, we believe you should also follow the above pre-requisites, where possible.

there are no formal prerequisites for the course.As with many machine learning courses, it would be useful to have basic understanding of linear algebra, machine learning, and probability and statistics. Taking online, open courses on these subjects concurrently or before the course is definitely advisable, if you do not have to requisite understanding. You might try to follow the preflight video lectures, and if these are understandable to you, then you’re all good. -

Is the course chargeable? No, the course is not chargeable. It is free (as in no-fee). NUS allows us to teach this course for free, as it is not “taught”, per se. Students in the class take charge of the lectures, and complete a project, while the teaching staff facilitates the experience.

-

Can I get course credit for taking this? Yes, if you are a first-year School of Computing doctoral student. In this case you need to formally enroll in the course as CS6101, And you will receive one half of the 4-MC pass/fail credit that you would receive for the course, which is a lab rotation course. Even though the left rotation is only for half the semester, such students are encouraged and welcome to complete the entire course.

No, for everyone else. By this we mean that no credits, certificate, or any other formal documentation for completing the course will be given to any other participants, inclusive of external registrants and NUS students (both internal and external to the School of Computing). Such participants get the experience of learning deep learning together in a formal study group in developing the camaraderie and network from fellow peer students and the teaching staff.

- What are the requirements for completing the course? Each student must achieve 2 objectives to be deemed to have completed the course:

- Work with peers to assist in teaching two lecture sessions of the course: One lecture by co-lecturing the subject from new slides that you have prepared a team; and another lecture as a scribe: moderating the Slack channel to add materials for discussion and taking public class notes. All lecture materials by co-lecturers and scribes will be made public.

- Complete a deep reinforcement learning project. For the project, you only need to use any deep learning framework to execute a problem against a data set. You may choose to replicate previous work from others in scientific papers or data science challenges. Or more challengingly, you may decide to use data from your own context.

-

How do external participants take this course? You may come to NUS to participate in the lecture concurrently with all of our local participants. You are also welcome to participate online through Google Hangouts. We typically have a synchronous broadcast to Google Hangouts that is streamed and archived to YouTube.

During the session where you’re responsible for co-lecturing, you will be expected to come to the class in person.

As an external participant, you are obligated to complete the course to best your ability. We do not encourage students who are not committed to completing the course to enrol.

Meeting Venue and Time

For both Sessions (I and II): 15:00-17:00, Thursdays at Meeting Room 6 (AS6 #05-10)

For directions to NUS School of Computing (SoC) and COM1: please read the directions here, to park in CP13 and/or take the bus to SoC. and use the floorplan

People

Welcome. If you are an external visitor and would like to join us, please email Kan Min-Yen to be added to the class role. Guests from industry, schools and other far-reaching places in SG welcome, pending space and time logistic limitations. The more, the merrier.

External guests will be listed here in due course once the course has started. Please refer to our Slack after you have been invited for the most up-to-date information.

NUS (Postgraduate): Session I (Weeks 3-7): Yong Liang Goh, Yihui Chong, Qian Lin, Yu Ning, Saravanan Rajamanickam, Ying Kiat Tan

NUS (Postgraduate): Session II (Weeks 8-13):

NUS (Undergraduate, Cross-Faculty and Alumni): Daniel Biro, Joel Lee, Yong Ler Lee

WING: Kishaloy Halder, Min-Yen Kan, Jethro Kuan, Liangming Pan, Chenglei Si, Weixin Wang,

Guests: Shen Ting Ang, Takanori Aoki, Vicky Feliren, Theodore Galanos, Alexandre Gravier, Markus Kirchberg, Joash Lee, Joo Gek Lim, Wuqiong Luo (Nick), Xiao Nan, Shi Kang Ng, Vikash Ranjan, Praveen Sanap, Kok Keong Teo

Schedule

Schedule

Don’t limit yourself to the materials provided by Levine when preparing. There are also other good resources such as those prepared by Silver on traditional RL. We’ll try to post links and synchronize where possible.

| Date | Description | Deadlines |

|---|---|---|

| Preflight Week of 17, 24 Jan |

Introduction and Course Overview

[Video (@UCB),

Slides (@UCB)]

Supervised Learning and Imitation [Video (@UCB), Slides (@UCB)] , & TensorFlow and Neural Nets Review Session (notebook) [Video (@UCB), Slides (@UCB)] |

|

| Week 3 31 Jan |

Reinforcement Learning Introduction

[Video (@UCB),

Slides (@UCB)]

,

Policy Gradients Introduction [Video (@UCB), Slides (@UCB)] , & [ » Scribe Notes ] [ » Recording @ YouTube ] |

|

| Week 4 7 Feb |

Actor-Critic Introduction

[Video (@UCB),

Slides (@UCB)]

Value Functions and Q-Learning [Video (@UCB), Slides (@UCB)] , & [ » Scribe Notes ] [ » Recording @ YouTube ] |

|

| Week 5 14 Feb |

Advanced Q-Learning Algorithms

[Video (@UCB),

Slides (@UCB)]

[ » Scribe Notes ] [ » Recording @ YouTube ] |

|

| Week 6 21 Feb |

Advanced Policy Gradients

[Video (@UCB),

Slides (@UCB)]

[ » Scribe Notes ] [ » Recording @ YouTube ] |

Preliminary project titles and team members due on Slack's #projects

|

| Recess Week 28 Feb |

Optimal Control and Planning

[Video (@UCB),

Slides (@UCB)]

[ » Scribe Notes ] [ » Recording @ YouTube ] |

|

| Week 7 7 Mar |

Model-Based Reinforcement Learning

[Video (@UCB),

Slides (@UCB)]

Advanced Model Learning and Images [Video (@UCB), Slides (@UCB)] [ » Scribe Notes ] [ » Recording @ YouTube ] |

Preliminary abstracts due to #projects

|

| Week 8 14 Mar |

Learning Policies by Imitating Other Policies

[Video (@UCB),

Slides (@UCB)]

[ » Scribe Notes ] [ » Recording @ YouTube ] |

|

| Week 9 21 Mar |

Probability and Variational Inference Primer

[Video (@UCB),

Slides (@UCB)]

Connection between Inference and Control [Video (@UCB), Slides (@UCB)] [ » Scribe Notes ] [ » Recording @ YouTube ] |

|

| Week 10 28 Mar |

Inverse Reinforcement Learning

[Video (@UCB),

Slides (@UCB)]

[ » Recording @ YouTube ] |

|

| Week 11 4 Apr |

(warning: we skip a few lectures to get here) Exploration: Part 1 [Video (@UCB), Slides (@UCB)] , & Exploration: Part 2 [Video (@UCB), Slides (@UCB)] [ » Recording @ YouTube ] |

|

| Week 12 11 Apr |

Parallelism and RL System Design

[Video (@UCB),

Slides (@UCB)]

[ » Slides (local, .pdf) ] [ » Recording @ Youtube ] |

|

| Week 13 18 Apr |

Recent Advances in Game RL: OpenAI 5's DotA 2

[ » Slides (local, .pdf) ]

[ » Recording @ Youtube ] |

Participation on evening of 14th STePS |

Projects

Student Projects

We had 5 final student projects executed out of 11 proposed that were featured as part of the class’ participation in the 14th School of Computing Term Project Showcase (14 STePS).

Thank you to all of the students who worked on the project and finished theirs to completion! We had a single prize and two runner ups:

-

Deep Q Network Flappy Bird by Lee Yong Ler.

Award Winner, 1st Prize

-

A curriculum-based approach to learning a universal policy by Prasanna (Bala), Joash Lee, Kishaloy Halder, Lim Joo Gek.

Runner Up

Due to a mismatch between simulation and real-world domains (commonly termed the ‘reality gap’), robotic agents trained in simulation often may not perform successfully when transferred to the real-world. This problem may be addressed through domain randomisation, such that a single universal policy is trained to adapt to different environmental dynamics. However, existing methods do not prescribe a priori the order in which tasks are presented to the learner. We present a curriculum-based approach where the difficulty level of training corresponds to the number of variables that are randomised. Experiments are conducted on the inverted pendulum, a classic control problem.

Due to a mismatch between simulation and real-world domains (commonly termed the ‘reality gap’), robotic agents trained in simulation often may not perform successfully when transferred to the real-world. This problem may be addressed through domain randomisation, such that a single universal policy is trained to adapt to different environmental dynamics. However, existing methods do not prescribe a priori the order in which tasks are presented to the learner. We present a curriculum-based approach where the difficulty level of training corresponds to the number of variables that are randomised. Experiments are conducted on the inverted pendulum, a classic control problem. -

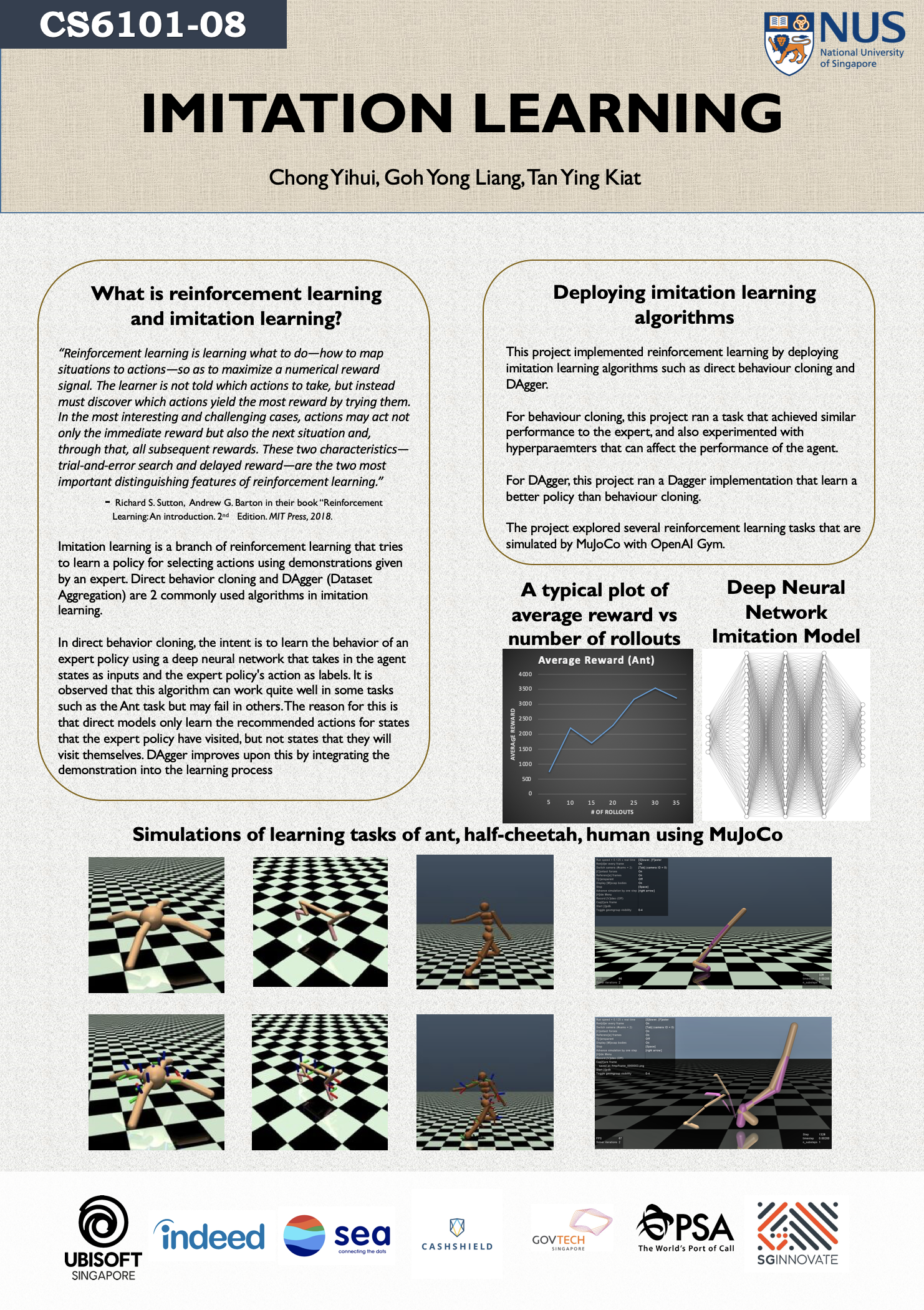

Imitation Learning by Liangming Pan, Tan Ying Kiat, Chong Yihui, Goh Yong Liang.

Runner Up

This project explores reinforcement learning by implementing and deploying imitation learning algorithms such as direct behavior cloning and DAgger. Imitation learning is a branch of reinforcement learning that tries to learn a policy for selecting actions using demonstrations given by an expert. In this project, we explored several reinforcement learning tasks that are simulated by MuJoCo with OpenAI Gym. Direct behavior cloning and DAgger are 2 commonly used algorithms in imitation learning. In direct behavior cloning, we attempted to learn the behavior of an expert policy using a deep neural network that takes in the agent states as inputs and the expert policy’s action as labels. It is observed that this algorithm can work quite well in some tasks such as the Ant task and fail in others. The reason for this is that direct models only learn the recommended actions for states that the expert policy have visited and not states that they will visit themselves. DAgger improves upon this by integrating the demonstration into the learning process.

This project explores reinforcement learning by implementing and deploying imitation learning algorithms such as direct behavior cloning and DAgger. Imitation learning is a branch of reinforcement learning that tries to learn a policy for selecting actions using demonstrations given by an expert. In this project, we explored several reinforcement learning tasks that are simulated by MuJoCo with OpenAI Gym. Direct behavior cloning and DAgger are 2 commonly used algorithms in imitation learning. In direct behavior cloning, we attempted to learn the behavior of an expert policy using a deep neural network that takes in the agent states as inputs and the expert policy’s action as labels. It is observed that this algorithm can work quite well in some tasks such as the Ant task and fail in others. The reason for this is that direct models only learn the recommended actions for states that the expert policy have visited and not states that they will visit themselves. DAgger improves upon this by integrating the demonstration into the learning process. -

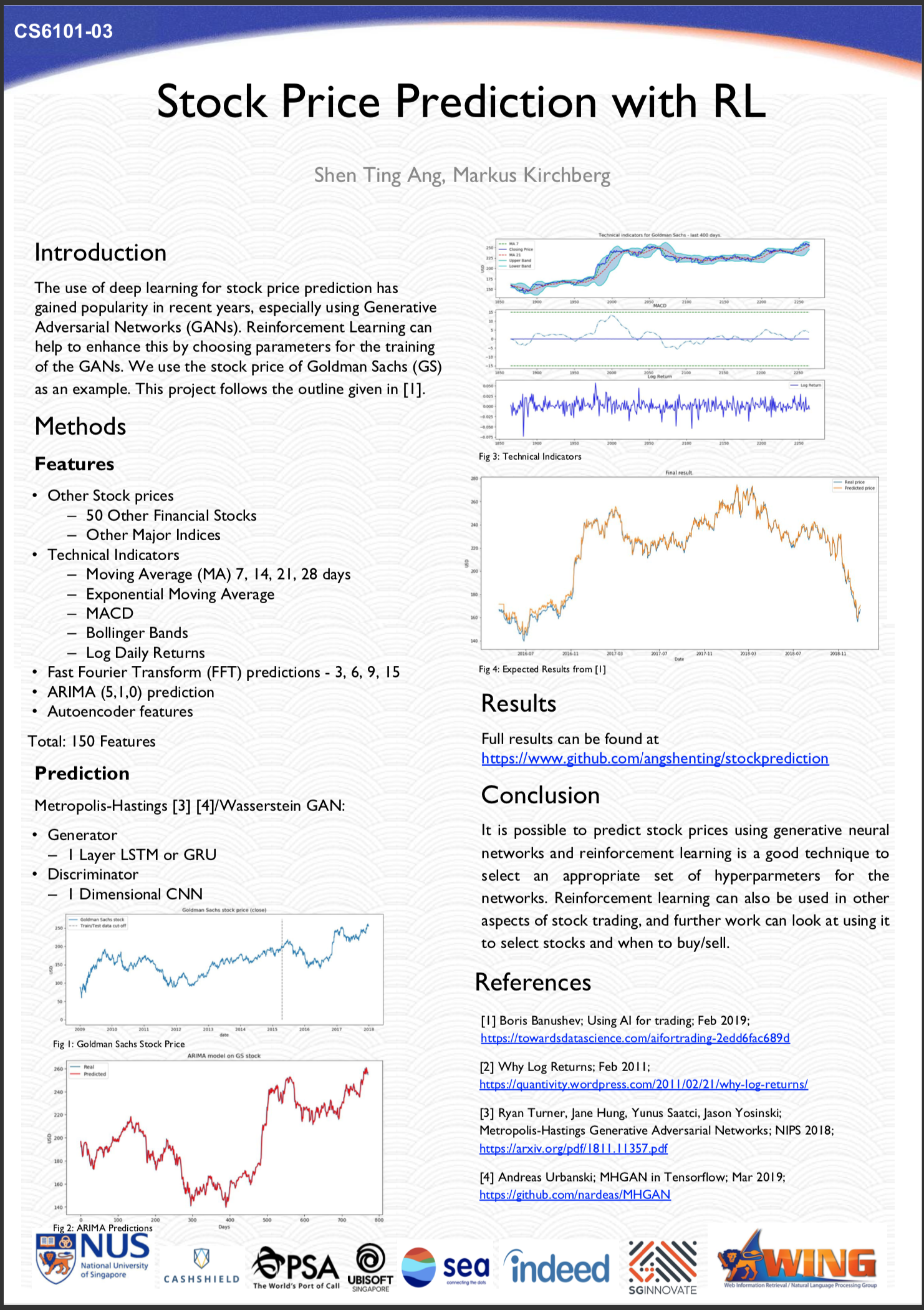

Stock Price Prediction with RL by Ang Shen Ting, Markus Kirchberg.

Deep Learning can be used to learn patterns in stock prices and volume, and also to understand news data surrounding stocks. We follow an outline listed by Boris Banushev using Reinforcement Learning to control the hyperparameters of GANs and LSTMs used for predicting stock price movement.

Deep Learning can be used to learn patterns in stock prices and volume, and also to understand news data surrounding stocks. We follow an outline listed by Boris Banushev using Reinforcement Learning to control the hyperparameters of GANs and LSTMs used for predicting stock price movement. -

Model-based Reinforcement Learning by Lin Qian, Weixin Wang.

Model-based reinforcement learning refers to learning a model of the environmental by taking action and observing the results including the next state and the immediate rewards, and indirectly learning the optimal behavior. The model predicts the outcome of the action and is used to replace or supplement the interaction with the environment to learn the optimal policy. Model-based reinforcement learning consists of two main parts: learning a dynamics model, and using a controller to plan and execute actions that minimize a cost function. In this project, we will explore model-based reinforcement learning in terms of dynamics models and control logics.

Model-based reinforcement learning refers to learning a model of the environmental by taking action and observing the results including the next state and the immediate rewards, and indirectly learning the optimal behavior. The model predicts the outcome of the action and is used to replace or supplement the interaction with the environment to learn the optimal policy. Model-based reinforcement learning consists of two main parts: learning a dynamics model, and using a controller to plan and execute actions that minimize a cost function. In this project, we will explore model-based reinforcement learning in terms of dynamics models and control logics.

Other Links

If you are already registered for the course, please find (and post) resources to the #general Slack channel.

- UCB’s Subreddit r/berkeleydeeprlcourse - https://www.reddit.com/r/berkeleydeeprlcourse/

RL Textbooks and Lectures:

- Sutton and Barto, Reinforcement Learning: An Introduction - free e-book on general RL inclusive of Deep RL. Becoming a standard text. http://incompleteideas.net/book/the-book-2nd.html

- Szepesvari, Algorithms for Reinforcement Learning - https://sites.ualberta.ca/~szepesva/RLBook.html

- **David Silver’s lectures (some on YouTube): http://www0.cs.ucl.ac.uk/staff/d.silver/web/Teaching.html. Some have expressed that this is easier to start with.

- (Easier) CS 188, UC Berkeley. Two introduction lectures on Reinforcement Learning (scroll down to Week 5), video links included - http://inst.eecs.berkeley.edu/~cs188/fa18/

- For others, please see the original list from Levine:

General Texts:

- Ian Goodfellow, Yoshua Bengio and Aaron Courville Deep Learning - an MIT Press book http://www.deeplearningbook.org/

- Michael A. Nielsen, Neural Networks and Deep Learning - free, general e-book - http://neuralnetworksanddeeplearning.com/

RL computing resources / frameworks:

- Open AI Gym - https://gym.openai.com/

- Google Dopamine - https://github.com/google/dopamine/

Previous CS6101 versions run by Min:

- Deep Learning for NLP (reprise) - http://www.comp.nus.edu.sg/~kanmy/courses/6101_1810

- Deep Learning via Fast.AI - http://www.comp.nus.edu.sg/~kanmy/courses/6101_2017_2/

- Deep Learning for Vision - http://www.comp.nus.edu.sg/~kanmy/courses/6101_2017/

- Deep Learning for NLP - http://www.comp.nus.edu.sg/~kanmy/courses/6101_2016_2/

- MOOC Research - http://www.comp.nus.edu.sg/~kanmy/courses/6101_2016/