Seminars

by the visitors

Title: Cognitive Affordances of Envisionment

Scenarios

Speaker: Professor John M. Carroll

Pennsylvania State

University

Venue: Franklin, Floor 11 South, Fusionopolis, I2R

Time: 9:30-10:15,

Thu, February 2, 2012

Chaired by: Jamie Ng Suat Ling

Abstract

Professor John M. Carroll will be presenting his argument on scenarios, as

design artifacts, support cognitive processes that strengthen the strengths and

mitigate the weaknesses of a variety of design approaches, including

solution-first design, opportunistic design, requirements/specification design,

participatory design, and positive design.

Biographical sketch

Professor John M. Carroll is Edward Frymoyer

Professor of Information Sciences and Technology at the Pennsylvania State

University. His research is in methods and theory in human-computer

interaction, particularly as applied to networking tools for collaborative

learning and problem solving, and design of interactive information systems.

Books include Making Use (MIT, 2000), Usability Engineering (Morgan-Kaufmann,

2002, with M.B. Rosson), Rationale-Based Software

Engineering (Springer, 2008, with J. Burge, R. McCall and I. Mistrik), Learning in Communities (Springer, 2009), and The

Neighborhood in the Internet (Routledge,

2012). Carroll serves on several editorial boards for journals, handbooks, and

series. He is Editor of the Synthesis Lectures on Human-Centered

Informatics. Carroll has received the Rigo Award and

the CHI Lifetime Achievement Award from ACM, the Silver Core Award from IFIP, the Goldsmith Award from IEEE. He is a fellow of AAAS, ACM,

IEEE, HFES and APS.

Title: Exploring the Physical Foundations of Haptic Perception

Speaker: Professor

Vincent Hayward

Universite Pierre et

Marie CURIE

Universite Pierre et

Marie CURIE

Venue: Franklin, Floor 11 South, Fusionopolis, I2R

Time: 16:00-16:45,

Thu, June 2, Part 1, Small scale interaction; 14:00-14:45, Fri, June 3, Part 2,

Large scale interactions and vibrations

Chaired by: Dr Louis Fong Wee Teck

Abstract

During mechanical interaction with our environment, we

derive a perceptual experience which may be compared to that resulting from

acoustic or optical stimulation. New mechanical stimulation delivery equipment

capable of fine segregation of distinct cues at different length scales and

different time scales now allows us to study the many aspects of haptic perception including its physics, its biomechanics,

and the computations that the nervous system must perform to achieve a

perceptual outcome. This knowledge is rich in applications ranging from

improved diagnosis of pathologies, to rehabilitation devices, to consumer

electronics and virtual reality systems.

Biographical sketch

Vincent Hayward, Ing. Ecole Centrale

de Nantes 1978; Ph.D. Computer Science 1981, University of Paris. Charge de recherches at CNRS, France

(1983-86). Professor of Electrical and Computer

Engineering at McGill University. Hayward is interested in haptic device design and applications, perception, and

robotics. He is leading the Haptics Laboratory at

McGill University and was the Director of the McGill Center

for Intelligent Machines (2001-2004). Over 160 reviewed papers, 8 patents, 4

pending. Spin-off companies: Haptech (1996), now

Immersion Canada Inc. (2000), RealContact (2002). Co-Founder

Experimental Robotics Symposia, Program Vice-Chair 1998 IEEE Conference on

Robotics and Automation, Program Vice-Chair ISR2000, past Associate Editor IEEE

Transactions on Robotics and Automation, Governing board Haptics-e,

Editorial board of the ACM Transaction on Applied Perception and of the IEEE

Transactions on Haptics. Three

times leader of national projects of IRIS, the Institute for Robotics and

Intelligent Systems (Canada's Network of Centers of

Excellence Program-NCE), and past member of the IRIS Research Management Board.

Keynote Speaker, IFAC Symposium on Robot Control 2006. Keynote Speaker, Eurohaptics 2004, The E. (Ben) & Mary Hochhausen Award for Research in Adaptive Technology For

Blind and Visually Impaired Persons (2002). Plenary Speaker, Workshop on

Advances in Interactive Multimodal Telepresence

Systems, Munich, Germany (2001) Keynote, IEEE ICMA , Osaka, Japan (2001),

Distinguished Lecturer Series, Department of Computing Science, University of

Alberta (2000). Fellow of the IEEE (2008).

Title: Seeing Through Augmented Reality

Speaker: Professor Steven Feiner

Columbia University

Venue: Franklin, Floor 11 South, Fusionopolis, I2R

Time: 10:45-11:45, Wed, March 23, 2011

Time: 10:45-11:45, Wed, March 23, 2011

Chaired by: Dr Mark David Rice

Abstract

Augmented Reality (AR) overlays the real world with a

complementary virtual world, presented through the use of tracked displays,

typically worn or held by the user, or mounted in the environment. Researchers

have been actively exploring AR for over forty years, beginning with Ivan

Sutherland's pioneering development of the first optical see-through head-worn

display. In this talk, I will share my thoughts about where AR has been, where

it is now, and where it might be headed in the future. I will illustrate the

talk with examples from work being done by Columbia's Computer Graphics and

User Interfaces Lab, ranging from assisting users in performing complex

physical tasks, such as equipment maintenance and repair, to creating compelling

games in which players interact with a mix of real and virtual objects.

Biographical sketch

Steven Feiner is Professor of Computer Science

at Columbia University, where he directs the Computer Graphics and User

Interfaces Lab. His research interests include human-computer interaction,

augmented reality and virtual environments, 3D user interfaces, knowledge-based

design of graphics and multimedia, mobile and wearable computing, computer

games, and information visualization. His lab created the first outdoor mobile

augmented reality system using a see-through display in 1996, and has pioneered

experimental applications of augmented reality to fields such as tourism,

journalism, maintenance, and construction. Prof. Feiner

is coauthor of Computer Graphics: Principles and

Practice, received an ONR Young Investigator Award, and was elected to the CHI

Academy. Together with his students, he has won the ACM UIST Lasting Impact

Award and best paper awards at ACM UIST, ACM CHI, ACM VRST, and IEEE ISMAR.

Title: Activity-based Ubicomp - A

New Research Basis for the Future of Human-Computer Interaction

Speaker: Professor James A. Landay

Speaker: Professor James A. Landay

Short-Dooley Professor

Computer Science &

Engineering

Visiting Faculty Researcher

Microsoft Research

Venue: Infuse Theatre, Level 14, Fusionopolis, I2R

Time: 10:30-11:30,

Tue, December 21, 2010

Chaired by: Jamie Ng Suat Ling

Abstract

Ubiquitous computing (Ubicomp)

is bringing computing off the desktop and into our everyday lives. For example,

an interactive display can be used by the family of an elder to stay in

constant touch with the elder’s everyday wellbeing, or by a group to visualize

and share information about exercise and fitness. Mobile sensors, networks, and

displays are proliferating worldwide in mobile phones, enabling this new wave

of applications that are intimate with the user’s physical world. In addition

to being ubiquitous, these applications share a focus on high-level activities,

which are long-term social processes that take place in multiple environments

and are supported by complex computation and inference of sensor data. However,

the promise of this Activity-based Ubicomp is

unfulfilled, primarily due to methodological, design, and tool limitations in

how we understand the dynamics of activities. The traditional cognitive

psychology basis for human-computer interaction, which focuses on our short

term interactions with technological artifacts, is insufficient for achieving

the promise of Activity-based Ubicomp. We are

developing design methodologies and tools, as well as activity recognition

technologies, to both demonstrate the potential of Activity-based Ubicomp as well as to support designers in fruitfully

creating these types of applications.

Biographical sketch

James Landay is the Short-Dooley

Professor of Computer Science & Engineering at the

Landay received his B.S. in EECS from

UC Berkeley in 1990 and M.S. and Ph.D. in CS from

Title: Research and Applications in Affective and

Pleasurable Design

Speaker: Professor Martin Helander

Head, Division of System

and Engineering Management

Nanyang Technological University

Venue:

Time: 13:30-15:00,

Mon, November 29, 2010

Chaired by: Jamie Ng Suat Ling

Abstract

During the last 10 years there has been a rapid growth

in research concerning affective and pleasurable design. This presentation

gives a review of past research and needs for future research. Affect is said

to be the customer’s psychological response to the design details of the

product, while pleasure is the emotion that accompanies the acquisition or

possession of a desirable product (Demirbilek and Sener, 2003). Emotional design has gained significant

attention in interaction design; the iPod is the runaway best seller. To

consumers, it is easy to use and aesthetically appealing - it is cool, it feels

good. And perhaps more important - good emotional design actually improves

usability (Khalid and Helander, 2004). The purpose of

this presentation is to summarize the results of research studies including

research that was conducted at NTU. The main focus is on design: How can a

researcher propose an affective design solution - for example of a mobile

phone? How can a researcher gather information to evaluate the affective

reactions to product design? Examples of affective product design are given.

Biographical sketch

Professor Martin Helander is

a prominent human factors specialist in the region, currently he is the program

director for the Masters of Science program in Human Factors Engineering at

Title: Interacting with Virtual Humans and Social Robots

Speaker: Professor Nadia Magnenat-Thalmann

Speaker: Professor Nadia Magnenat-Thalmann

Director

Institute for Media Innovation

Nanyang Technological

Director

Venue:

Time: 10:00-11:00,

Tue, November 2, 2010

Chaired by: Dr

Abstract

Since a few decades, we have seen the venue of Virtual

Humans and Social Robots. In our presentation, we will describe our research on

how to define a common platform for interacting emotionally with Virtual Humans

and Social Robots. For being able to interact emotionally with them, we need to

define personalities models, memory processes,

relationship models. These features are highly inspired from models defined by

Psychologists. This kind of research is still in its infancy but has a bright

future. Children could play better with social robots that with normal dolls as

these virtual characters will be aware of the child's presence and emotions and

capable of actions or together learning. Indeed, as most populations,

particularly in the Western world, are aging, Virtual Humans and Social robots

could assist and coach aged people, playing the role of therapist, or

companions, and decreasing the sense of loneliness and helping them on the long

run. In our talk, we will show how we create some kind of awareness for these

virtual entities and how they can today start to interact emotionally with us,

recognize and remember us.

Biographical sketch

Prof. Nadia Magnenat Thalmann has pioneered research into virtual humans over

the last 30 years. She obtained several Bachelor's and Master's degrees in

various disciplines (Psychology, Biology and Biochemistry) and a PhD in Quantum

Physics from the

From 1989 to 2010, she has been a Professor at the

Together with her PhD students, she has published more than 550 papers

on virtual humans and virtual worlds with applications in 3D clothes, hair,

body modeling, Interactive emotional Virtual Humans and Social Robots and

medical simulation of articulations.

In 2009, she received a Dr Honoris Causa from the Leibniz University of Hanover in

Title: Review of various methods for 3D reconstruction and

modeling

Speaker: Prof. Narenda Ahuja

Donald Biggar

Willet Professor

Donald Biggar

Willet Professor

and also

Department of Electrical

and Computer Engineering,

Coordinated Science

Laboratory, and

Beckman Institute

Urbana, Illinois, USA

Venue: Nexus, 13N, Connexis, Fusionopolis, I2R

Time: 3:00-4:00,

Fri, October 15, 2010

Chaired by: Dr Louis

Abstract

In this talk, I will present the various methods for

3D reconstruction and modeling that our group has worked on and tested. I will

also share on the lessons learnt. In particular, I will also present on our

human face reconstruction work.

Biographical sketch

Narendra Ahuja received the B.E. degree with honors in

electronics engineering from the Birla Institute of Technology and Science, Pilani, India, in 1972, the M.E. degree with distinction in

electrical communication engineering from the Indian Institute of Science,

Bangalore, India, in 1974, and the Ph.D. degree in computer science from the

University of Maryland, College Park, USA, in 1979. From 1974 to 1975 he was

Scientific Officer in the Department of Electronics, Government of India,

Title: Multi-view 3D Object Reconstruction: Segmentation,

Modeling and Beyond

Speaker: Dr Cai Jianfei

Speaker: Dr Cai Jianfei

Associate Professor

Head, Division of Computer

Communications

Nanyang Technological University

http://www3.ntu.edu.sg/home/asjfcai/

Venue:

Time: 10:30-11:30,

Thu, September 30, 2010

Chaired by: Dr Huang Zhiyong

Abstract

In this talk, I am going to share some of our findings

in a completed A*STAR funded project on multi-view 3D object reconstruction. In

particular, I will touch a few major components in the pipeline of 3D object

reconstruction, including interactive image segmentation and 3D object

reconstruction. Our developed interactive image segmentation tool outperforms

quite a few state-of-the-art methods including GrabCut

and Random Walks. For the 3D reconstruction, we introduce the rate-distortion

concept into the process. In addition, I will also introduce how to efficiently

deliver reconstructed 3D models in bandwidth-limited environment such as

wireless networks.

Biographical

sketch

Cai Jianfei received his PhD degree from

University of Missouri-Columbia in 2002. Currently, he is the Head of the

Division of Computer Communications at

Title: SoundNet: An Environmental Sound

Augmented Online Language Assistant Bridging Communication Barriers

Speaker: Dr Ma Xiaojuan

PhD,

PhD,

http://www.cs.princeton.edu/~xm/home/

Research Fellow

Department of Information Systems

Venue:

Time: 3:00-4:00,

Tue, August 31, 2010

Chaired by: Jamie Ng Suat Ling

Abstract

Daily communication (e.g. conversation and reading

newspapers) is usually carried out in natural languages. However, for people with language disabilities (e.g. aphasia), people

with low literacy, and people with poor command of a language, receiving and

expressing information via a spoken/written language is difficult. In

particular, because of the inability to comprehend words and/or to find words

that express intended concepts, people with communication barriers may face

great challenges performing everyday tasks such as ordering food in a

restaurant and visiting a doctor. As an alternative to words, pictorial

languages have been designed, tested, verified and used to evoke concepts in

computer interfaces, education, industry, and advertisement. However, icons

created by artists and user-uploaded photos cannot always satisfy the need for

communicating everyday concepts across language barriers.

SoundNet, an essential

component of the Online Multimedia Language Assistant (OMLA) which employs

various multimedia stimuli to assist comprehension of common concepts,

introduces a new Augmentative and Alternative Communication Channel (i.e.

non-speech audio). The frontend of SoundNet is a popup

dictionary in the form of a web browser extension. Users can select an

unfamiliar word on a webpage to listen to its associated environmental auditory

representation in a popup box. It aims to enhance concept understanding as

people browse information on the Internet, and support face-to-face

communication when people want to illustrate a term via a sound as their

conversation partner does not understand the spoken word.

The backend of SoundNet is a lexical network

associated with large scale of non-speech audio data. To develop the sound

enhanced semantic database, we went through a cycle of design, construction,

evaluation, and modification for each stimulus-to-concept association. Soundnails, five-second environmental audio clips, were

extracted from sound effects libraries, and assigned to a vocabulary of common

concepts. Two large scale studies were conducted via Amazon Mechanical Turk to

evaluate the efficacy of soundnail representations.

The first was a tagging study which collected human interpretations of the

sources, locations, and interactions involved in the sound scene. The second

study investigated how well people can understand words encoded in soundnails which are embedded in sentences compared to

conventionally used icons and animations.

Results showed that non-speech audio clips are better in distinguishing

concepts like thunder, alarm, and sneezing. Guidelines on designing effective

auditory representations were proposed based on the findings.

Biographical

sketch

MA, Xiaojuan, Ph.D.,

graduated from

Title: Human-Friendly Robotics

Speaker: Professor Oussama Khatib

Speaker: Professor Oussama Khatib

Artificial Intelligence Laboratory

Department of Computer Science

Visiting Scientist, I2R

Phone: +1 (650)723-9753

Fax: +1 (650) 725-1449

http://robotics.stanford.edu/~ok/

Venue:

Time: 2:00-3:00,

Thu, Jan 15, 2009

Chaired by: Dr Huang Zhiyong

Abstract

Robotics is rapidly expanding into the human

environment and vigorously engaged in its new emerging challenges. Interacting, exploring, and working with humans, the new generation of

robots will increasingly touch people and their lives. The successful

introduction of robots in human environments will rely on the development of

competent and practical systems that are dependable, safe, and easy to use.

This presentation focuses on our ongoing effort to develop human-friendly

robotic systems that combine the essential characteristics of safety,

human-compatibility, and performance. In the area of human-friendly robot

design, our effort has focused on new design concepts for the development of

intrinsically safe robotic systems that possess the requisite capabilities and

performance to interact and work with humans. Robot design has traditionally

relied on the use of rigid structures and powerful motor/gear systems in order

to achieve fast motions and produce the needed contact forces. While suited for

multitude of tasks in industrial robot applications, the resulting systems are

certainly unsafe for human interaction, as they can lead to hazardous impact

forces should the robot unexpectedly collide with its environment.

Our work on human-friendly robot design has led to a novel actuation

approach that is based on the so-called Distributed Macro Mini (DM2) Actuation

concept. DM2 combines the use of small motors at the joints with pneumatic,

muscle-like actuators remotely connected by cables. With this hybrid actuation,

the impedance of the resulting robot is decreased by an order of magnitude,

making it substantially safer without sacrificing performance. To further

increase the robot safety during its interactions with humans, we have

developed an impact absorbent skin that covers its structure. In the area of

human-motion synthesis, our objective has been to analyze human motion to

unveil its underlying characteristics through the elaboration of its

physiological basis, and to formulate general strategies for interactive

whole-body robot control. Our exploration has employed models of human

musculoskeletal dynamics and used extensive experimental studies of human

subjects with motion capture techniques. This investigation has revealed the

dominant role physiological characteristics play in shaping human motion. Using

these characteristics we develop generic motion behaviors that efficiently and

effectively encode some basic human motion behaviors. To implement these

behaviors on robots with complex human-like structures, we developed a unified

whole-body task-oriented control structure that addresses dynamics in the

context of multiple tasks, multi-point contacts, and multiple constraints. The

performance and effectiveness of this approach are demonstrated through

extensive robot dynamic simulations and implementations on physical robots for

experimental validation.

Biographical

sketch

Dr. Khatib is Professor of

Computer Science at

Title: Biomechanical Simulation of

the Human Face-Head-Neck Complex

Title: Biomechanical Simulation of

the Human Face-Head-Neck Complex

Speaker:

Visiting Scientist, I2R

Phone: +1 (310) 206-6946

Fax: +1 (310) 794-5057

http://cs.ucla.edu/~dt/

Venue: Turing @ 13S, I2R

Time: 3:30-5:30,

Thu, Dec 18, 2008

Chaired by: Dr Ong Ee Ping

Abstract

Facial animation has a lengthy history in computer

graphics. To date, the industry has

concentrated either on labor-intensive keyframe or

motion-capture facial animation schemes. As an alternative, I will advocate the

highly automated animation of faces using physics-based and behavioral

simulation methods. To this end, we have developed a biomechanical model of the

face, including synthetic facial soft tissues with dozens of embedded muscle

actuators, plus a motor control layer that automatically coordinates the muscle

contractions to produce natural facial expressions. Despite its sophistication,

our model can nonetheless be simulated in real time on a PC. Unlike the human

face, the neck has been largely overlooked in the computer graphics literature,

this despite its intricate anatomical structure and the important role that it

plays in generating the controlled head movements that are essential to so many

aspects of human behavior. I will present a biomechanical model of the human

head-neck system.

Emulating the relevant anatomy, our model is characterized by

appropriate kinematic redundancy (7 cervical vertebrae coupled by 3-DOF joints)

and muscle actuator redundancy (72 neck muscles arranged in 3 muscle layers).

This anatomically consistent biomechanical model confronts us with challenging

dynamic motor control problems, even for the relatively simple task of

balancing the mass of the head in gravity atop the cervical spine. We develop a

neuromuscular control model for human head animation that emulates the relevant

biological motor control mechanisms. Employing machine learning techniques, the

neural networks in our neuromuscular controller are trained offline to

efficiently generate online pose and stiffness control signals for the

autonomous behavioral animation of the human head and face. We furthermore

augment our synthetic face-head-neck system with a perception model that

affords it a visual awareness of its environment, and we provide a sensorimotor response mechanism that links percepts to

meaningful behaviors.

Time permitting, I will preview our current

efforts to develop a comprehensive biomechanical simulator of the entire human

body, incorporating the aforesaid face-head-neck system. In this work we

confront the twofold challenge of modeling and controlling more or less all of

the relevant articular bones and muscles, as well as

simulating the physics-based deformations of the soft tissues.

Biographical

sketch

Demetri Terzopoulos (PhD '84 MIT) is the Chancellor's

Professor of Computer Science at the

Title: Human-Centered Robotics

Title: Human-Centered Robotics

Speaker: Professor Oussama Khatib

Artificial Intelligence Laboratory

Department of

Visiting Scientist, I2R

Phone: +1 (650)723-9753

Fax: +1 (650) 725-1449

http://robotics.stanford.edu/~ok/

Venue: Big-One, I2R

Time: 10-12, Tue,

Nov 13, 2007

Chaired by: Dr Huang Zhiyong

Abstract

Robotics is rapidly expanding into human environments

and vigorously engaged in its new emerging challenges. Interacting, exploring, and working with humans, the new generation of

robots will increasingly touch people and their lives. The successful introduction

of robots in human environments will rely on the development of competent and

practical systems that are dependable, safe, and easy to use. This presentation

focuses on our ongoing effort to develop human-friendly robotic systems that

combine the essential characteristics of safety, human-compatibility, and

performance. In the area of human-friendly robot design, our effort has focused

on new design concepts for the development of intrinsically safe robotic

systems that possess the requisite capabilities and performance to interact and

work with humans. Robot design has traditionally relied on the use of rigid

structures and powerful motor/gear systems in order to achieve fast motions and

produce the needed contact forces. While suited for multitude of tasks in

industrial robot applications, the resulting systems are certainly unsafe for

human interaction, as they can lead to hazardous impact forces should the robot

unexpectedly collide with its environment.

Our work on human-friendly robot design has led to a novel actuation approach

that is based on the so-called Distributed Macro Mini (DM2) Actuation concept.

DM2 combines the use of small motors at the joints with pneumatic, muscle-like

actuators remotely connected by cables. With this hybrid actuation, the

impedance of the resulting robot is decreased by an order of magnitude, making

it substantially safer without sacrificing performance. To further increase the

robot safety during its interactions with humans, we have developed an impact

absorbent skin that covers its structure. In the area of human-motion

synthesis, our objective has been to analyze human motion to unveil its

underlying characteristics through the elaboration of its physiological basis,

and to formulate general strategies for interactive whole-body robot control.

Our exploration has employed models of human musculoskeletal dynamics and used

extensive experimental studies of human subjects with motion capture

techniques. This investigation has revealed the dominant role physiological

characteristics play in shaping human motion. Using these characteristics we

develop generic motion behaviors that efficiently and effectively encode some

basic human motion behaviors. To implement these behaviors on robots with

complex human-like structures, we developed a unified whole-body task-oriented

control structure that addresses dynamics in the context of multiple tasks,

multi-point contacts, and multiple constraints. The performance and

effectiveness of this approach are demonstrated through extensive robot dynamic

simulations and implementations on physical robots for experimental validation.

Biographical

sketch

Dr. Khatib is Professor of

Computer Science at

Title: Vision-Realistic Rendering

Title: Vision-Realistic Rendering

Speaker: Professor Brian A. Barsky

Professor of Computer Science

Affiliate Professor of Optometry and Vision Science

Visiting Scientist, I2R

Phone: +1 (510) 642-9838

http://www.eecs.berkeley.edu/Faculty/Homepages/barsky.html

Venue: Big-One, I2R

Time: Tue 3-3:50,

Aug 21, 2007

Chaired by: Dr Susanto Rahardja

Abstract

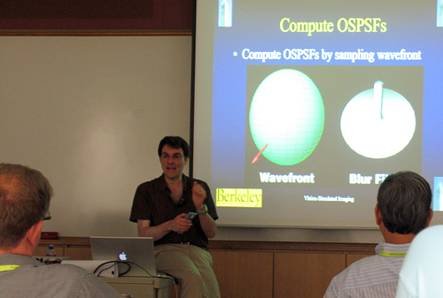

Vision-simulated imaging (VSI) is the computer generation of synthetic images

to simulate a subject's vision, by incorporating the characteristics of a

particular individual’s entire optical system. Using measured aberration data

from a Shack-Hartmann wavefront aberrometry

device, VSI modifies input images to simulate the appearance of the scene for

the individual patient. Each input image can be a photograph, synthetic image

created by computer, frame from a video, or standard Snellen

acuity eye chart -- as long as there is accompanying depth information. An eye

chart is very revealing, since it shows what the patient would see during an

eye examination, and provides an accurate picture of his or her vision. Using wavefront aberration measurements, we determine a discrete

blur function by sampling at a set of focusing distances, specified as a set of

depth planes that discretize the three-dimensional

space. For each depth plane, we construct an object-space blur filter. VSI methodolgy comprises several steps: (1) creation of a set

of depth images, (2) computation of blur filters, (3) stratification of the

image, (4) blurring of each depth image, and (5) composition of the blurred

depth images to form a single vision-simulated image.

VSI provides images and videos of simulated vision to enable a patient's eye

doctor to see the specific visual anomalies of the patient. In addition to

blur, VSI could reveal to the doctor the multiple images or distortions present

in the patient's vision that would not otherwise be apparent from standard

visual acuity measurements. VSI could educate medical students as well as

patients about the particular visual effects of certain vision disorders (such

as keratoconus and monocular diplopia)

by enabling them to view images and videos that are generated using the optics

of various eye conditions. By measuring PRK/LASIK patients pre- and post-op,

VSI could provide doctors with extensive, objective, information about a

patient's vision before and after surgery. Potential candiates

contemplating surgery could see simulations of their predicted vision and of

various possible visual anomalies that could arise from the surgery, such as

glare at night. The current protocol, where patients sign a consent form that

can be difficult for a layperson to understand fully, could be supplemented by

the viewing of a computer-generated video of simulated vision showing the

possible visual problems that could be engendered by the surgery.

Biography

Brian A. Barsky is Professor of Computer Science and

Affiliate Professor of Optometry and Vision Science at the

He was a Directeur

de Recherches at the Laboratoire d'Informatique Fondamentale de Lille (LIFL) of

l'Université des Sciences et Technologies de Lille (USTL). He has been a Visiting Professor of

Computer Science at The Hong Kong University of Science and Technology in Hong

Kong, at the University of Otago in Dunedin, New

Zealand, in the Modélisation Géométrique

et Infographie Interactive group at l'Institut de Recherche en Informatique de Nantes and l'Ecole

Centrale de Nantes, in Nantes, and at the University

of Toronto in Toronto. Prof. Barsky was a

Distinguished Visitor at the School of Computing at the National University of

Singapore in Singapore, an Attaché de Recherche Invité at the Laboratoire Image

of l'Ecole Nationale Supérieure des Télécommunications

in Paris, and a visiting researcher with the Computer Aided Design and

Manufacturing Group at the Sentralinsitutt for Industriell Forskning (Central

Institute for Industrial Research) in Oslo.

He attended

He is a co-author of the book An Introduction to Splines

for Use in Computer Graphics and Geometric Modeling, co-editor of the book

Making Them Move: Mechanics, Control, and Animation of Articulated Figures, and

author of the book Computer Graphics and Geometric Modeling Using Beta-splines. He has published 120 technical articles in this

field and has been a speaker at many international meetings.

Dr. Barsky was a recipient of an IBM

Faculty Development Award and a National Science Foundation Presidential Young

Investigator Award. He is an area editor for the journal Graphical Models. He

is the Computer Graphics Editor of the Synthesis digital library of engineering

and computer science, published by Morgan & Claypool Publishers, and the

Series Editor for Computer Science for Course Technology, part of Thomson

Learning. He was the editor of the Computer Graphics and Geometric Modeling

series of Morgan Kaufmann Publishers, Inc. from December 1988 to September

2004. He was the Technical Program Committee Chair for the Association for

Computing Machinery / SIGGRAPH '85 conference.

His research interests include computer aided geometric design and modeling,

interactive three-dimensional computer graphics, visualization in scientific

computing, computer aided cornea modeling and visualization, medical imaging,

and virtual environments for surgical simulation.

He has been working in spline curve/surface

representation and their applications in computer graphics and geometric

modeling for many years. He is applying his knowledge of curve/surface

representations as well as his computer graphics experience to improving videokeratography and corneal topographic mapping, forming

a mathematical model of the cornea, and providing computer visualization of

patients' corneas to clinicians. This has applications in the design and

fabrication of contact lenses, and in laser vision correction surgery. His

current research, called Vision-Realistic Rendering is developing new

three-dimensional rendering techniques for the computer generation of synthetic

images that will simulate the vision of specific individuals based on their

actual patient data using measurements from a

instrument a Shack-Hartmann wavefront aberrometery device. This research forms the OPTICAL (OPtics and Topography Involving Cornea and Lens) project.