Teaching Portfolio

KAN

Min-Yen

Department of Computer Science

National University of Singapore

9

September 2008

Foreword

This document may be

longer than the usual teaching portfolio, but I hope you will be able to

navigate it with the help of the indexing. Where important to distinguish between the original material

and my annotations, I have included my annotation in a box such as this

one. Please read these comments,

as they will illustrate my reasoning for including this in the teaching portfolio. The exception is when I have included

presentation slides, in which case my annotation will appear as normal text.

Kan Min-Yen

9 September 2008

Table of Contents

Philosophy........................................................................................................................ 3

Treat as a peer............................................................................................................. 3

Engage their thinking and creativity......................................................................... 3

Ask them to help you................................................................................................... 4

Sample Materials from Module Folders....................................................................... 6

Samples from CS 3243 – Foundations of Artificial Intelligence.......................... 7

Samples from CS 6210 / 5244 – Digital Libraries............................................... 24

Selected Student Feedback........................................................................................ 33

Appendix A – Emails on slide development............................................................. 36

Appendix B – IOI / ACM Training Slides.................................................................... 38

Appendix C – Teaching Practicum report excerpt................................................... 39

Appendix D – Beginning research presentation excerpt....................................... 42

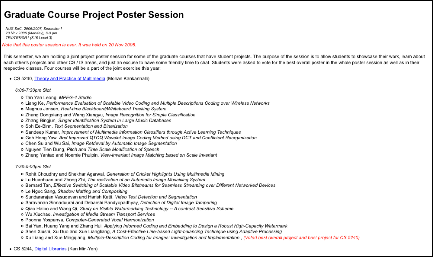

Appendix E – Graduate Research Course Joint Poster Seminar........................ 43

Appendix F – Examples of Discussion Questions................................................... 44

The corresponding,

complete student feedback for all of the modules I have taught can be found in

the section following this one (Student Feedback). The modules folders (Mod. Folder CS5244, Mod. Folder CS5246,

Mod. Folder CS3243) found in the subsequent tabs give a complete inventory of:

- Web pages (which

includes syllabi and course objectives

- Presentation

slides

- Sample final exam

Philosophy

This is an expanded version of my teaching philosophy statement found

in the main dossier. It just

contains additional evidence and claims to support my teaching beliefs.

While, by any rights, I am fairly

new to formal teaching, I have been tutoring friends and family throughout my

life. As tutoring is defined as

giving individual instruction, it may seem that best practices in tutoring may

not extend well to teaching. And

one would be right; I would say that many aspects of teaching many cannot be

done in the way of tutoring one.

However, the principles that I used to effectively tutor can be adapted

to produce effective teaching.

My teaching philosophy

is to view teaching as tutoring many simultaneously.

What does this mean? At first, when I developed this idea,

it was a mental kludge to protect myself from being nervous from being watched

and assessed by so many students.

I felt that it was easier to think of the students as a single student,

whose opinion, understanding and interest level was a product of all of the

students of the class. This let me

interact more naturally with the students and released me from the lock of

nervousness.

While I don’t have as many

problems with nervousness as I did previously, the concept of tutoring still

remains a powerful one that has many strong analogies that I believe is true of

great teaching as well. Three

components of effective tutoring sum up my approach rather nicely.

Treat as a peer

A tutor treats their student as a

peer, not as an individual of lower standing. This means that as tutors and teachers, we extend to our

students the rights and respect that we would demand of and give to our peers. Examples of this start with basic

courtesy: remembering their names when possible and encouraging them to address

me by first name.

“… and he even knows every student by name (17

students)”

[ from my last year’s annual review ]

Treating students as peers also

means that they should know right from wrong. I make it very clear in my upper-class modules that I trust

their judgment. I pointedly

encourage students to openly discuss any homework problem and projects, but ask

that they do not take away any form of notes (electronic or otherwise). In my classes, this has come in the

form of the Gilligan’s Island Rule (as can be seen in my CS 3243 module folder,

page 11), which I discuss and append to the course website every semester. This level of trust is reciprocated

back, which I feel helps to bond my students to me. Another example is in the way I deal with student

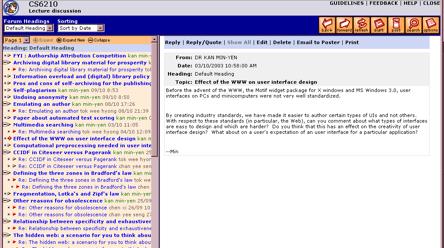

emails. When a student email

touches a subject that I think may be of interest his fellow students, I will

anonymize the email and post the email and my reply to the IVLE forum. I tell the students about this policy

in the beginning of the semester, and I take special care to avoid posting any

personal information. I feel that

this encourages students to ask more questions, as they see that some of their

concerns have been voiced by others.

As a student put it nicely:

“I like the way he 'broadcasts' emails sent to him

regarding the module in IVLE. Other lecturers don't do that. I feel that his

style will benefit most people.”

[ CS 3243 feedback ]

One may believe that remembering

student’s names and interests lies far from the tenets of good teaching. I have found that the knowing the

student better often can yield better learning outcomes, as students are tuned

in for lectures and expect to be engaged as a peer, rather than lectured at.

Engage their thinking and creativity

When an instructor steps off of

the podium and enters the class as a peer, the requirements of him change as

well. When tutoring students one

on one, I seek to engage my peer and try to get them to think of issues in the

larger context as well as relevant examples. After all, when tutoring, we don’t want to help the student

solve a particular problem, but rather to instill in them how to learn to solve

problems independently of us. To

do this, we must continually search for relevant materials to share with our

students. An example of this is in

the introduction of a new lecture on new media in my Digital Libraries

class. Blogging, instant messaging

and SMSing have become new outlets for communication and knowledge

exchange. These technologies are

used by virtually every student but by reflecting upon it in the course setting

allows students to think about the technology from the fresh pespective of the

evolution of knowledge exchange, and not just from a user’s perpective.

Another example is in my use of

choice of second homework assignment in my Artificial Intelligence class. Students have different interests and

A.I. covers a wide swath of material.

Instead of constructing a single homework assignment, I constructed

three different ones. Students

assigned themselves to homework assignments that involved either vision,

robotics or language processing.

The outcome of this extra effort paid off in the way students perceived

and reacted to the assignments:

He is one of the nicest lecturers I've met so far

in my 2 years university learning period. I nomite him for his professionalism

and patience to us students. Another remarkable point is that he really THINKS

how this module can improve, we can see that through the way he designs

projects and so on. Thanks!

[ CS 3243 feedback, emphasis due to the student ]

Is creating choice valuable to

students? The answer is yes. My teaching practicum project focused

on this question and I think it is relevant to the teaching portfolio, so I have

included it as Appendix C.

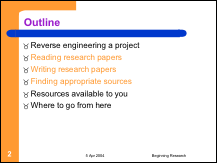

In tutoring, we are taught to

guide the student rather than solve the problems for them. This is true of the most valued

teaching. To this extent, I like

to guide my HYP and UROP students in beginning steps in their research, as

shown in Appendix D, in which I deconstruct the qualities of a good

undergraduate project. I also use

this outline that I give to my new students for guiding my students in my

postgraduate course.

Ask them to

help you

After all, your students are your

peers, so they can help improve our teaching. This has taken two forms in for me thus far: the mid-term

instructor evaluation and the final evaluation with my project students.

As prescribed by CDTL, the

mid-term evaluation is really a great device to get some constructive criticism

on teaching skills. In past

semesters, I had implemented this on my own (see the module folder for CS

6210), but in the past semester, CITA initiated its own midterm feedback. I opted at that time to use their

midterm feedback form rather than mine own. In hindsight, this was a mistake, as the CITA form

encouraged quantitative evaluation rather than constructive qualitative

feedback.

Feedback from a previous semester

showed me that students coming to class late (sometimes due to circumstances

beyond their control) wanted to have a webcast to view the key points they

missed. As such, I have mandated

that all of my classes be webcasted, so that students can have control over

their learning process. This has

been effective with graduate students as well, as many taking my current module

are part-time, off-campus students.

While many do take the pains to come into class, I can understand the

cost of doing so, and would like to make attending class suitable for every and

any student who wishes to learn.

With my research project students,

I am lucky to interact with them over a longer period of time and to do it

one-on-one. I conduct a final exit

interview after UROP and HYP deadlines are over to get their frank feedback

from them about their what they think of their work and my method of

advisement. Building a good

rapport and sense of openness with my students is crucial for any supervisor. My undergraduate project students have

told me informally that they feel that they get a lot of attention from me, as

a meet with each of them on a biweekly basis for an hour each. While this consumes a large portion of

my time (18 undergraduate students = 9 hours per week), my expectations of them

are high and they have risen to my challenge.

Min-Yen Kan

9 September 2008

Sample Materials from Module Folders

Rather

than present all of the course material, I have extracted a few selections from

components from each module and compiled some notes to guide your assessment.

You can

find all content of the module in IVLE and on the class home pages. I do not remove past year’s homepages,

rather I use a different set of web pages each year to indicate the difference

in class.

If you

do not have the time to review the entire module folder, I suggest that you

read the highlights given in the summaries.

Samples from CS 3243 – Foundations of Artificial Intelligence.......................... 7

Aims and Objectives................................................................................................ 7

Syllabus..................................................................................................................... 8

Teaching-Learning Materials.............................................................................. 10

Continual and Final Assessment........................................................................ 11

Homework #1 - Pipe dream............................................................................. 11

Implementation.............................................................................................. 13

Getting a feel for the assignment................................................................ 13

Compiling and submitting the assignment............................................... 14

HW 1 - Excerpt from the high score board................................................ 15

Scores per agent........................................................................................... 15

Comments from Students on HW 1............................................................ 16

Homework #2 - Implementing a Robotic Arm using LEGO

Mindstorms. 17

Background.................................................................................................... 17

Task Specification......................................................................................... 17

Software Design and Implementation....................................................... 18

Hardware Design and Implementation..................................................... 18

Testing the Robotic Arm............................................................................... 19

(Bonus part): Online Planning..................................................................... 19

Resources....................................................................................................... 20

Pictures from their work................................................................................ 20

Final Exam Questions....................................................................................... 22

Summary................................................................................................................. 23

Samples from CS 6210 / 5244 – Digital Libraries............................................... 24

Course Description............................................................................................... 24

Aims and Objectives............................................................................................. 24

Modes of Learning................................................................................................ 24

Textbook and Required Readings..................................................................... 25

Syllabus................................................................................................................... 26

Teaching-Learning Materials.............................................................................. 27

Continual and Final Assessment........................................................................ 30

Homework 2 - Authorship Detection.............................................................. 30

Details.................................................................................................................. 30

What to turn in.................................................................................................... 30

Grading scheme................................................................................................ 31

Summary......................................................................................................................... 32

Samples from CS 3243 – Foundations of Artificial

Intelligence

In A.I., I strove to add value to an existing

course. As mentioned, the

curriculum was revamped to center around two interesting homework

assignments. The second

assignment, in particular, revolved around a choice of three different

assignments in different areas. We

(myself and the TA) had to offer some pre-recorded lectures, see the Syllabus

(page 12) to let students pursue their own interests.

Aims and Objectives

I use IVLE for temporally sensitive things, but the

majority of the coursework material I place directly on the web, allowing

students from other universities to access this information. This has paid off tremendously, as I

have received comments from both instructors and students alike asking for

materials.

We will be using

the Integrated Virtual Learning Environment

(IVLE) for forum discussions, announcements, homework submissions, and other

temporally-sensitive materials. Basic course administration, lecture and

tutorial notes will be available on this publicly-accessible webpage.

Description

(from the course bulletin): The module introduces the basic concepts in search and

knowledge representation as well as to a number of sub-areas of artificial

intelligence. It focuses on covering the essential concepts in AI. The module

covers Turing test, blind search, iterative deepening, production systems,

heuristic search, A* algorithm, minimax and alpha-beta procedures, predicate

and first-order logic, resolution refutation, non-monotonic reasoning,

assumption-based truth maintenance systems, inheritance hierarchies, the frame

problem, certainly factors, Bayes' rule, frames and semantic nets, planning,

learning, natural language, vision, and expert systems and LISP.

Prerequisites: CS1102 Data Structures

and Algorithms, and either CS1231 or CS1231S Discrete Structures

Modular

credits:

4

Workload: 2-1-0-3-3

Our textbook

will be the same textbook as last semester. You can buy it from your seniors

for it or from the NUS Co-op. Make sure you get the second edition textbook.

- Stuart Russell and Peter Norvig

(2003) Artificial Intelligence: A Modern Approach, Prentice Hall, 2nd

Edition.

Copies are also

on reserve (RBR) at both the Science Library and the Central Library.

Note to

NUS-external visitors: Welcome! If you're a fellow AIMA instructor looking for MS

Powerpoint versions of the AIMA slides, you can see the syllabus menu item on

the left for a preview, please contact me for the original .zip file.

Here, I want to highlight my trust in my

students. In this section, you can

read my discussion on the academic honesty policy and read my policy of using

the Gilligan’s Island Rule to encourage collaboration to allow students to

self-police themselves about the appropriate level of collaboration.

Academic

Honesty Policy

(Updated Thu Jul

24 14:15:05 GMT-8 2003)

Collaboration is

a very good thing. Students are encouraged to work together and to teach each

other. The extra-credit programming homework will require a team effort in

which everyone is expected to contribute.

On the other

hand, cheating is considered a very serious offense. Please don't do it!

Concern about cheating creates an unpleasant environment for everyone.

So how do you

draw the line between collaboration and cheating? Here's a reasonable set of

ground-rules. Failure to understand and follow these rules will constitute

cheating, and will be dealt with as per University guidelines. We will be

policing the policy vigorously.

You should

already be familiar with the University's academic code.

If you haven't yet, read it now.

- The Gilligan's

Island Rule:

This rule says that you are free to meet with fellow students(s) and

discuss assignments with them. Writing on a board or shared piece of paper

is acceptable during the meeting; however, you may not take any written

(electronic or otherwise) record away from the meeting. This applies when

the assignment is supposed to be an individual effort. After the meeting,

engage in a half hour of mind-numbing activity (like watching an episode

of Gilligan's Island -- an old

American T.V. show, just in case you haven't heard of it), before starting

to work on the assignment. This will assure that you are able to

reconstruct what you learned from the meeting, by yourself, using your own

brain.

- The Freedom of

Information Rule:

To assure that all collaboration is on the level, you must always write

the name(s) of your collaborators on your assignment. Failure to

adequately acknowledge your contributors is at best a lapse of

professional etiquette, and at worst it is plagiarism. Plagiarism is a

form of cheating.

- The No-Sponge Rule: In intra-team collaboration

where the group, as a whole, produces a single "product", each

member of the team must actively contribute. Members of the group have the

responsibility (1) to not tolerate anyone who is putting forth no effort

(being a sponge) and (2) to not let anyone who is making a good faith

effort "fall through a crack" (to help weaker team members come

up to speed so they can contribute). We want to know about dysfunctional

group situations as early as possible. To encourage everyone to

participate fully, we make sure that every student is given an opportunity

to explain and justify their group's approach.

This section on

academic honesty is adapted from Surendar Chandra's course at the University of

Georgia, who in turn acknowledges Prof. Carla Ellis and Prof. Amin Vahdat at

Duke University for his policy formulation. The Gilligan's Island rule origin is uncertain,

but at least can be traced back to Prof. Dymond at York University's use of it

in 1984.

Syllabus

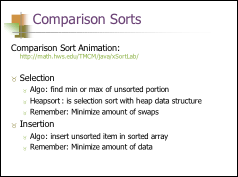

Here you can see the added, pre-recorded lectures

that we had to do to accommodate the choice of homework assignments we offered. It is highlighted in red.

(Last updated

on: Tue Mar 23 19:37:10 GMT-8 2004 ) The

exam begins at 2:30 pm on the 23rd of April, not previously announced at 2:00

pm. Thanks!

Your teaching

staff will try their best to provide you with detailed assessment marks within

three weeks of the submission due date. You are welcome to debate/argue for any

extra points that you feel that you deserve within one week after the initial

grades have been released.

Any questions

about this information should be directed to the general forum on IVLE. The

lecture notes have been adapted from earlier notes by Prof. Ng

Hwee Tou and Prof. Tan Chew Lim, which in turn have

been adapted from the original slides from Stuart Russell and Peter Norvig. Our

teaching assistant, Mr. Huang Weihua, contributed the

slides for the optional lectures on vision and robotics. There's also a good

set of complementary notes by the MIT

OpenCourseWork project. You might want to consult and/or print them out.

Thanks to those of you took the time during the midterm feedback to point this

out!

Exam Date: 23 April 2004 (Afternoon)

|

Date |

Description |

Deadlines |

|

S1:

7 Jan |

Introduction

to AI and Agents Orientation (v

1.0) [ .pdf

] [ .htm ] |

-

IVLE survey |

|

S2:

14 Jan |

Blind

Search Solving

problems by searching (v 1.0, Chapter 3) [ .pdf ] [ .htm ] |

|

|

S3:

21 Jan |

Informed

Search Informed

Search and Exploration (v 1.0, Chapter 4) [ .pdf ] [ .htm ] |

-

Tutorials begin |

|

S4:

28 Jan |

Game

Playing |

|

|

S5:

4 Feb |

Constraint

Satisfaction Constraint

Satisfaction Problems (v 1.1, Chapter 5) [ .pdf ] [ .htm ] |

Homework

1 due |

|

S6:

11 Feb |

Midterm Introduction

to Advanced Topics (see misc for videos) [ .pdf ] [ .htm ] Computer Vision (v 1.0, Chapter 24)

[ .pdf ] [ .htm ] |

-

Midterm test: Closed Book |

|

S7:

18 Feb |

Logical

Agents and Propositional Logic |

Team

Homework 2 released. |

|

S8:

25 Feb |

Logical

Agents and Propositional Logic (cont'd, Addendum to previous week's notes) [ .pdf ] [ .htm ] |

|

|

S9:

3 Mar |

First

Order Logic First-Order

Logic (v 1.2, corrected slide 16, Chapter 8) [ .pdf ] [ .htm ] |

|

|

S10:

10 Mar |

Uncertainty

Uncertainty (v

1.1, Chapter 13) [ .pdf

] [ .htm

] |

|

|

S11:

17 Mar |

Learning

Learning from

Observations (v 1.1, Chapter 18) [ .pdf

] [ .htm ] |

Team

Homework 2 due (extended by one week as of Mon Feb 16 17:50:22 GMT-8 2004) |

|

S12:

24 Mar |

Exam

Revision (Check out misc for past exams) |

Last

Tutorial |

|

23

Apr |

Final Exam

(Afternoon / Closed book; one A4 double-sided sized sheet allowed with notes) |

|

Min-Yen Kan <kanmy@comp.nus.edu.sg> Created on:

Mon Dec 1 19:36:22 2003 | Version: 1.0 | Last modified: Sat Mar 27 16:17:08

2004

Teaching-Learning Materials

Most of the lecture material in the course was from

previous semesters work by Ng Hwee Tou and Tan Chew Lim. Therefore I have not shown instances of

the slides used for the course. My

contribution here was to translate the slides for the course from SliTeX to

Powerpoint. This may not sound

like much work, but it was a sorely needed addition to the teaching materials

for the community. I refer you to

Appendix A for my contribution.

Continual and Final Assessment

I’ve included the first assignment on game playing,

and one of the three choices of the second assignment (Robotics: construct a

robotic arm). I’ve also included

portions of my final exam to illustrate the types of questions that I offered. My former advisor also asked for the

homeworks to see whether she could use them in her class (see email on page 27)

Homework

#1 - Pipe dream

With HW 1, the students had to implement a game

player. Some students told me it

took them many hours because they were motivated to do it. I’ve included some of the high scores

board to showcase their efforts.

(Adapted from

the original Japanese arcade game in 1990)

For this

homework, you will implement a game playing agent to play the solitaire game,

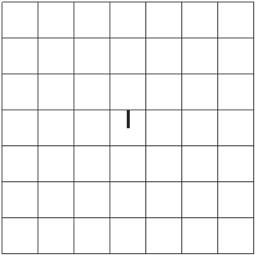

Pipe Dream. In Pipe Dream, the goal is to lay pipe to divert ooze from a

gushing sewer main break into a system of pipes to keep it from leaking out as

long as possible. Your score is the final determiner of whether your agent does

well or not.

The board is a

7x7 grid in which a pipe tile can be placed in a square. The pipe tiles can be

placed anywhere on the grid and come in seven different configurations: a

vertical pipe, a horizontal pipe, a cross (a vertical and horizontal pipe

stacked together) and four elbow pipes. Pipe tiles are provided one at a time,

in some order. In addition to knowing the current piece to be placed, the agent

has access to the queue of the next 4 tiles to be placed. The source of the

ooze originates from the center tile, going towards the top.

The initial layout at time 0.

At time t=0

through t=6, no ooze emerges from the center source tile. Subsequently, after 3

units of time, the ooze will go through a single pipe tile. Ooze can be

directed through cross pipe in both directions. The grid's edge is the

boundary; any pipe ends facing edges can not be connected to additional pipe.

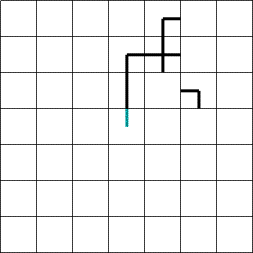

The ooze is starting to flow, at time 6. If there is

no suitable tile above the source tile by t=9, the game is over.

At each time

unit, the agent can do one of the following actions, all of which take a unit

of time to perform:

·

Place the current tile at a empty x,y coordinate

·

Destroy an occupied tile at a filled x,y coordinate (only possible

if ooze has not started to flow through the tile yet)

·

Do nothing

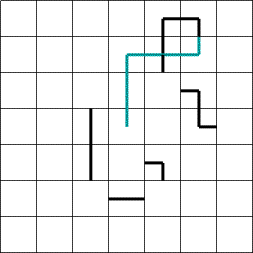

At time 18, this agent has laid some pipe, and waited

at some time units.

Note: You cannot

directly discard a pipe tile; you must place in on an available spot on the

grid and then destroy it. If your module specifies to do an action which is

invalid, a unit time will also elapse.

The game ends

when the ooze spills out of the pipe (because your agent didn't lay an

appropriate at the outflow coordinate, or because the flow ran off the edge of

the grid, or because the entire grid is filled with pipe. The agent's goal is

the maximize its final score (the score is the agent's utility).

Your agent's

score can be calculated by the following:

·

Each tile where the ooze flows through: +2

·

Bonus for each cross tile where the ooze flows through both ways:

+5 (a total of (2x2)+5 = 9)

·

Each destroyed tile: -3

Note that tiles

laid but un-oozed through do not factor into the agent's score whatsoever.

Implementation

Your agent will

play this game by way of a single Java class which will extend the abstract

class, Player. Most significantly, the Player class has the public

method, play(). play() takes a single argument Driver d, an instance of the Driver class, which will enable

you to get the state of the game components: the queue, the board and

information about the next ooze.

play() will return an Action object, that encodes the

agent's action and, when applicable, its coordinates. The class Action, has the following

methods:

·

setAction (char action): sets the action of the agent for this

unit t to one of the three actions: 'p' place current tile, 'd' destroy tile,

'w' wait. The default value of action is 'w' wait.

·

setX (int x): sets the x coordinate for the action's location.

Valid values will be between 0 and 6.

·

setY (int y): sets the y coordinate for the action's location.

Valid values will be between 0 and 6.

·

constructor Action (char action, int x, int y): the obvious

constructor. Will take the values provided and install it in the appropriate

fields.

Note that the

valid values for the X and Y coordinates will not check whether the action

would actually be valid. It is your responsibility to generate an agent that

constructs a valid Action object.

Queue pieces are

represented by tiles which take on character values: one of the following

characters seven characters: '+' <cross piece>, '|' <vertical

pipe>, '-' <horizontal pipe>, or 'q' <tile with left and up

outlets>, 'e' <pipe with up and right outlets>,'c' <right and down

outlets>, or 'z' <down and left outlets> (it may be helpful to

visualize this on a QWERTY keyboard with 's' in the center).

The Board will

have two additional types of pieces that can appear: ' ' <empty space>,

'\'' <starting tile, with ooze coming out northwards>.

For convenience,

in implementing the agent class, you may also call the following accessor

methods to get the state of the game, through the Driver class instance:

·

int getTimeToNextOoze (): returns the number of turns left before the

ooze moves forward another square.

·

int getTime (): returns the number of turns elapsed since the

start of the game

·

int getScore (): returns the agent's score so far

·

int getNextOozeSpotX (): returns the X coordinate of the next spot

where the ooze will move to.

·

int getNextOozeSpotY (): returns the Y coordinate of the next spot

where the ooze will move to.

·

char[] getQueue (): returns a 5 element array containing the

pieces in the queue to be placed. The 0th indexed piece is the current piece to

be placed.

·

char[][] getMap (): returns a 7 by 7 element array containing the

pieces on the map.

Additional

accessor and print methods are available to you in this assignment. The full

documentation for the java package is available. Examine the contents

carefully. Also available to you is a sample class, MyPlayer.java,

that also shows how to compile your agent into an actual game execution.

Getting a feel for the assignment

Well, by all

means you have to play the game a bit to get an idea of what type of heuristics

and search might be useful. You can try my implementation of the Strategic

Pipe Dreams Framework. It'll probably one of the few times you can tell

your parents that you must play games in order to do your homework!

Compiling and submitting the assignment

When the

pipedream package is released, you'll have to do the following things to

compile and run the package.

To compile,

you'll need the pipedream.jar

(Version 1.1, updated Mon Jan 26 18:38:21 GMT-8 2004) and the directory where

you have your implementation of the MyPlayer class in your classpath. Assuming MyPlayer.java is in the current

directory:

javac -classpath

.:pipedream.jar MyPlayer.java

To execute the

game you have to do something similar:

java -cp .:pipedream.jar

MyPlayer

You should

submit your working version of the agent by the midnight on the due date, 4

Feb. The IVLE workbin will accept late submissions. Late submissions are

subject the the late

submission policy (read carefully). Your class should be named MyPlayer and

should present in a file named MyPlayer.java. Your work should be

done independently; see our classes' section on Academic

Honesty Policy if you have questions about what that entails.

HW 1 -

Excerpt from the high score board

I allowed them to identify their submission with a

tagline and then posted the test results to the public. Here are some. Some of their taglines are quite

humorous.

N.B. - This is

the default score as retrieved by the testing harness. The game players are run

over 200 random test cases. The scores here do not account for time and

computation limits so your actual mileage (and grade) may vary.

Read in 158

agent(s) scores and 156 identities.

Jump to: [ agent

averages ] [ best

per game ] [ best

average over all agents ]

Scores per agent

|

Average Score |

Agent Tagline |

|

59.68 |

Game

playing is fun! But making agents to play for me is not fun! :P |

|

57.38 |

My

agent is smart... |

|

54.68 |

Game

Programming is FUN! |

|

53.38 |

When

testing, please initialized a new Player to play each game. you are really

appreciated |

|

47.50 |

ph34r

M01 l33t 5k1||z!!! |

|

47.31 |

If

at first you don't succeed, you are running about average. |

|

44.75 |

This

assignment is fun yoo! |

|

43.79 |

PipeDream

agent using searching and heuristics |

|

42.00 |

This

is the PipeDream Programme written by NAME, MATRIC, SoC Year 1. |

|

40.07 |

Game

playing is fun! Making agents to play for me is even more fun! |

|

39.34 |

Game

playing is painful! Making agents to play is even more miserable! |

|

38.23 |

Game

playing is fun! Making agents to play for me is even more fun! |

|

38.16 |

Making

agents to play for me is *_*? >_ |

Comments

from Students on HW 1

|

From: |

GAN HIONG YONG |

|

Date: |

06/02/2004 10:54:00 PM |

|

Heading: |

Default Heading |

|

Topic: |

OMG the scoreboard |

|

60+???

50+???

(maybe the

lecturer's and tutor's agents join in the fun? lol) |

|

|

From: |

NGUYEN THUY TRAM |

|

Date: |

05/02/2004 12:02:00 AM |

|

Topic: |

quite interesting... ^^ |

|

Yo...haha..

homework 1 is over.. i have something to submit ..i thought i

couldnt implement it, but i could...not bad Arghh..but

have to say good luck to me haha.. And good

luck to ya guys toooooo.. |

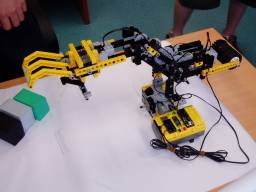

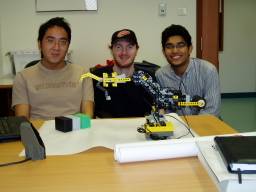

Homework #2 -

Implementing a Robotic Arm using LEGO Mindstorms

In

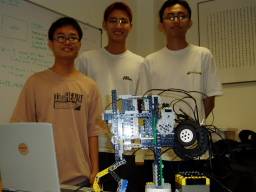

Homework #2 (robotics variant), they had to implement a robotic arm. I’ve included some pictures of the

resulting work by the students.

- Homework #2R is over. See the pictures of the Lego Mindstorms robots to view

what the students have created.

You should submit your working version of the

assignment by the midnight on the due date. The IVLE workbin will accept late

submissions. Late submissions are subject the the late submission policy (read carefully). Your

submission should contain a plain .txt file called students.txt which should have the

same format as given in the hyperlink (in fact, why don't you save the linked

file now and adapt it before you submit it?). Make sure your files are in plain

ASCII text format -- MS Word files or other word processing files will NOT be

entertained. Your team's work should be done independently of other groups; see

our classes' section on Academic Honesty Policy if you have questions about what that entails.

This homework specification was written by Huang Weihua. Please address your

questions, concerns and answers to him.

Background

The LEGO Mindstorms product is a

robot-kit-in-a-box. It consists of a computer module called the RCX (Robot

Command System), and an inventory of many TECHNICS parts. Furthermore, it

provides sensors and electric motors that are essential to a physical agent.

The following sensors are provided:

- One light

sensor which measures light intensity.

- Two touch

sensors that detects various events such as click, pressed and released.

Using the LEGO Mindstorms kit, various robots

can easily be created, including mobile robots (with either legs or wheels),

robotic arms etc. In this assignment, we are going to have a hands-on

experience by actually implementing and programming a robotic arm using the

LEGO Mindstorms. Some examples of such robotic arms are shown below:

Examples

of Lego Robotic Arms

Examples

of Lego Robotic Arms

Task Specification

The basic function of a robotic arm is to

stretch itself to certain location in the environment, and then perform some

actions such as grabbing an object or releasing an object. If a robotic arm

works in a blocks world (see textbook pg. 446), then its task is to move the

blocks in a planned sequence and reach the goal state.

To simplify our task, we may assume that there

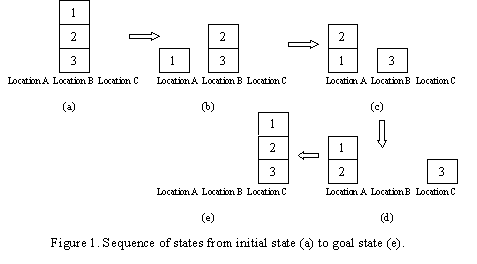

are only three locations A, B and C that can be used to put blocks. The blocks

in the initial state are put in one of the locations, as shown in Figure 1(a).

In the goal state, the blocks are all shifted to another location, and the

order among the blocks may change. The first task is to formulate the state

representation by determining how you are going to reflect the locations of the

blocks and the order among the blocks. Using the state representation, how do

you represent the state in Figure 1(a)? How do you represent the state in

Figure 1(e)?

Software Design and

Implementation

The actions of a robotic arm are controlled by a

program. The program generates plans based on the state information and

determines the type of an action as well as the parameters required for

performing that action. If a continuous planning is adopted, then the planning

is based on the current state and one action is returned at a time. A simpler

approach is to create a complete plan consisting of a series of actions that

are to be carried out to reach the goal state. Using Figure 1 as an example,

based on the initial state in 1(a) and the goal state in 1(e), the series of

actions are:

Move(block1, locationA), Move(block2, locationA),Move(block3, locationC), Move(block2, locationC),Move(block1, locationC)

Continuous planning requires object recognition

that is not achievable using the sensors provided by LEGO Mindstorms kit. Thus

you are only required to perform one-time planning at the beginning to determine

the series of actions to be carried out by your robotic arm. Now you should

come out with your algorithm to generate the action sequence based on the state

representation you have come out previously.

Although LEGO Mindstorms Kit already includes a

programming software with graphical interface, it is not convenient to write

complicated codes that achieves tasks like planning. Fortunately there is a

platform called LEJOS which allows us to write JAVA programs and then convert

into instructions that are understandable by the RCX. You can find the

technical details of the LEJOS in the resources listed in the last section. Now

write a program to give your robotic arm the software support. In your program,

you should perform two tasks:

1.

To come up with a planner that generates a series of actions to be

performed.

2.

To translate the actions into executable instructions and feed

them into the RCX.

Note that the initial state and the goal state

will be given on the spot, thus hard coding your robot to handle just a

specific situation is a bad choice.

Hardware Design and

Implementation

A simple robotic arm has 3 effective degrees of

freedom (DOF): one for turning the arm to left and right, one for raising and

lowering the arm, and one for grabbing and releasing. If each of these 3 DOFs

is taken care by an electric motor, then we need a total of 3 motors. The

actions that can be performed are then:

Grab(Object o);Release(Object o);RotateLeft(time t);RotateRight(time t);RaiseArm(time t);LowerArm(time t);

Note that the major movement of a robotic arm is

rotation in our application. Suppose the power of the electric motor is fixed

during the process, then the rotational angle is proportional to the execution

time t. By specifying the time t, the movement of the components of the arm can

be controlled.

You should carefully design your robotic arm. A

suggested way is to construct the two major parts separately.

- The first

part is the arm it self, which should have 2 motors attached to it. The

first motor controls the raising and lowering action, and the second motor

controls the fingers for grabbing and releasing actions.

- The second

part is the base station. The base station carries the RCX, and it should

have a rotational unit that can rotate the arm under the control of the

third motor.

When you are constructing the two parts you

should consider the following issue:

- The base

station should stand firmly on the ground (or the table) and provide

enough support to the robotic arm so that it won't fall down during the

movement.

- The joints

of the robotic arms should be designed wisely, so that the arm can reach

an appropriate distance and angle.

- The two

parts are attached properly, without colliding with each other.

There is an example of robotic arm in the CD-ROM

attached with each Mindstorms Kit, you may want to use it as the starting point

for your robotic arm, but you should make improvements so that the arm is able

to handle the current problem efficiently.

Testing the Robotic Arm

After you have implemented the robotic arm, you

may first let it perform the basic actions, such as rotating for different

angles etc. After you are sure that your robot is ready, you may define some

initial states and goal states, run your program to determine the action series

and then feed them into the robot to carry them out.

For testing purpose, you need to construct a

physical environment to simulate the "blocks world". If your robotic

arm has difficulties grabbing a block, you may consider other alternatives such

as a ball or something with irregular shapes that are easier to grab.

Essentially, the ordering among the objects should be reflected.

During the final submission, you should include

your environment settings so that we are able to test your robotic arm. We may

also consider to conduct demo sessions so that you can show how your robotic

arm works by yourself.

(Bonus part): Online

Planning

Note that in the basic part your task is to

simply perform off-line planning based on the given initial state and known

goal state. When planning is completed, a series of actions are generated and

executed with no further changes. Off-line planning does not involve perception

(in this case the light sensors), and it expects all the actions to be

performed successfully. However this expectation is too good to be true in most

situations and a sensorless planner does not have ability to get out from an

unexpected state resulted from a bad action.

In the real world, we have to deal with the case

where certain action fails to provide an expected result. Planning methods that

handles indeterminacy is discussed in the textbook page 431. The basic idea is

to equip the agent with sensors so that it is able to detect any problem during

the process of action execution and then manage to recover from it.

"Execution monitoring and replanning" is the most appropriate method

to be used here.

To apply this approach to your robotic arm, you

need to add two features:

- The robotic arm should have sensors at appropriate places on the

arm so that the arm can tell the object it is facing. This serves two

purposes. Firstly, by knowing the position of an object, the robotic arm

has partial information about which state it is looking at. This may

provide the robotic arm the ability of localization in which case it is

able to find out the initial state by itself. Secondly, the arm is able to

detect if the previous action was successful by checking whether the

correct block was placed at the target location.

- The robotic arm should be able to have the ability of replanning.

Based on the reading from the sensors, if a wrong block is detected at the

target location, then the arm needs to do something to recover from it.

One choice is to find back the correct block in the other possible

locations. After this, the robot can carry on the remaining part of the

original plan. Alternatively, the robot can also form a new initial state

and come out with a completely new plan.

To illustrate the idea of localization, let's

consider the example given in Figure 1 in the description of the basic tasks.

Instead of telling the robotic arm the initial state is 1(a), we may let the

arm determine the initial state by itself. When the arm is lowered to some

level, the light sensor can tell the color of the block 1, so it knows block 1

is at the top. When the arm moves block 1 to another location and comes back,

it sees block 2. Now the initial state is known, since there is no other

possible location for block 3 except under block 2.

To show how replanning can be done, let's

consider the move from 1(d) to 1(e). The original plan is to move block 1 to

location B, followed by moving block 2 to location C, and then move block 1 to

location C. Imagine when moving block 2, it drops on block 1 at location B. By

execution monitoring, the robotic arm will go to location C and check the color

of the top block, which is block 3. Now the arm knows something is wrong, and

it needs to visit other locations to locate block 2 and move it to location C

(which is an extra action which is not included in the original plan). If this

time nothing is wrong, the arm can proceed to move block 1. If block 2 drops

again, then the arm needs to determine another extra action.

Note that the light sensor provided in the LEGO

Mindstorms Kit can tell the difference among three light intensities: white,

black and green. So to be recognized by the light sensor(s), the objects need

to have one of these colors.

If your robotic arm is able to perform online

planning, your team will be awarded 20% bonus marks, which will make up for

whatever marks deducted from other aspects of this homework. The maximum final

mark you can get is still 100%.

Resources

1.

LEJOS: http://lejos.sourceforge.net/

2.

LEGO Mindstorms Homepage: http://www.legomindstorms.com/

3.

LEGO Robotics resources: http://www.crynwr.com/lego-robotics/

4.

Local Lejos and Mindstorms set-up instructions from Weihua

Min-Yen Kan <kanmy@comp.nus.edu.sg> Created on: Tue Feb

10 13:54:48 2004 | Version: 1.0 | Last modified: Mon Mar 22 09:46:08 2004

Pictures from their work

|

Group

|

Robots

... |

...

with their creators* |

|

Group 35: |

||

|

Group 18: |

||

|

Group 25: |

||

|

Group 24: |

Final Exam Questions

I’ve included two questions from the

final exam. The first shows that

the students have to think about the principles and not just remember the facts

taught to them. The second shows

that even calculation questions can be made interesting.

Also, I’ve included a comment from

two students about the final that I think are particularly helpful.

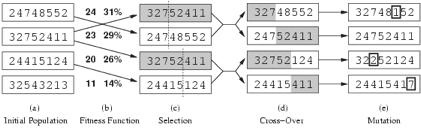

2. Genetic

Algorithms (8 points)

The genetic

algorithm has two steps in generating an individual from a population. The first step selects two individuals

at random with probability proportional to their fitness. The two individual parents

reproduce by contributing parts of their genome to create a new

individual. In a second step, the

new individual’s genome is possibly mutated at some random location in its

genome.

Briefly discuss

the impact on the algorithm if the second step of the (mutation) step is

omitted.

8. Learning

from Observations (20 points)

Part I. Ms Apple I. Podd is an SoC student who listens

to music almost everywhere she goes.

She often has homework due for her modules and many of them involve

programming. We’ve sampled her

choice of music genre over several points in time.

Using information gain, compute the decision tree for

the following observations of Apple.

Show all work. The

following mathematical formulas may be useful for your computation.

[N.B. Data rows omitted for space]

|

Ty (nickname) |

|

|

Date: |

12/02/2004 08:25:00 PM |

|

Topic: |

Re: Exam format Query |

|

Just making sure.. Anyway, judging from

the midterm questions, I guess this module requires more thinking skills than

memorising skills. So I guess its alright even with just an A4 sheet. |

|

|

From: |

LEONG WAI KAY |

|

|

Topic: |

Re: last post |

|

|

Yah he prolly had an AI agent mark those papers..

lolAnyone wanna discuss some of the questions from the exam? Think the

questions were rather intresting and thoughtfully set. |

Summary

In this

module, I accomplished my first semester of teaching a large undergraduate class

by myself. I hope to improve the

curriculum in the future by tying together the (interesting) homework

assignments I have created more closely with the course curriculum.

The first

avenue for improvement is to re-do the tutorials as they have been used in the

course for many times. The

students know old material when they see it and the questions are not relevant

as they need to be.

|

|

Kathy

McKeown |

|

More options

|

Aug 31 (4 days ago) |

Min -- can you

send me information about your AI class?

I'd love to be able to see:

1. your syllabus

2. your notes for class

3. your assignments

4. your tests

To start, I'm more interested in 1 and 3. Particularly 3, as I'm trying

to decide what game playing program to give them and I remember that

you had an interesting one.

Thanks!

Kathy

Samples from CS 6210 / 5244 – Digital Libraries

In Digital Libraries, I

teach a course that is more oriented to the application of databases, HCI and

text processing. It integrates all

of these fields yet needs to be balanced such that it does not significantly

overlap with the materials of these other courses, taught by other professors.

The course is

project-based and has a heavy emphasis on building core research skills is

emphasized. As such, a survey

paper, presentation and final project are the core components. Students are encouraged to make their

work conference publishable. At

least one of the ten teams in the course did end up submitting their work for

publication.

Course Description

This module is

targeted to advanced undergraduates and beginning graduate students who wish to

understand real-world issues in building, using and maintaining large volumes

of information in digital libraries.

Fundamentals of

modern information retrieval will first be taught, with a particular focus on

how this fundamental technology is merged with traditional information finding

skills of the librarian / cataloger / archivist.

Students will

round out their knowledge with case studies of how different disciplines (e.g.

music, arts, medicine and law) impose different search, usability and

maintenance requirements on the digital library.

More detail on this course. Why have this course? Who

should think about taking this course? What are the prerequisites? What are the

aims and objectives of the course? How are students to be assessed?

Aims and Objectives

- Acquire working skills in

research using electronic texts of many types: from on-line newspaper

texts to fiction to hyperlinked collections of documents.

- Learn how text-based

information systems work: principles, design, indexing, search and

retrieval, markup, clustering. Get hands-on experience in design and

building of such systems.

- Understand the perspectives and

problems of information providers in non-CS/IS fields, and how to apply

current and emerging technologies related to these problems.

- Familiarize students with current

standards in digital library environments and specific requirements of

digital libraries for different disciplines.

Modes of Learning

(Updated Thu Jul

24 14:15:05 GMT-8 2003)

Lectures and

class participation will be used for the first half of the course. The first

half will cover fundamentals of information retrieval and digital library

standards, as well as furnish the students with a wide variety of topics of

current interests to information professions in academic and industry.

Survey paper. By the third week of

the course, students will have selected one topic (from a controlled list) to

write up a survey paper on, using papers suggested by the lecturer and through

their own information gathering.

Mini lecture

presentation. Based on the topic of your survey paper, you will be grouped

into small teams to present the material on the papers in your survey. These

lectures will be in the last three sessions of our regular class schedule.

Lectures will be 15 minutes long (about 5-8 slides), with five minutes for

questions.

Project. After the survey,

students will work on a particular project topic for further study, either

individually or in groups of two or three. Students can either propose projects

to me or choose from a list of topics. Our last class will feature a poster

presentation (open to the public) which will allow the SoC staff and students

to see the efforts of your work. Information on choosing a topic for the final

project is given

here.

N. B. - I know students are

sometimes at a loss on how to assess their progress in a course due to lags in

instructors or tutors returning assessment to them. I will try my best to grade

work within two weeks of receiving the homework assignments.

Textbook and Required Readings

Required:

- Lesk, Michael (1997) Practical Digital

Libraries: Books, Bytes and Bucks.

Recommended:

- Baeza-Yates and Ribeiro-Neto

(1999) Modern Information Retrieval. There's a chapter of it online that we are

going to use by Marti Hearst on User

Interfaces.

- Witten, Bell and Moffat (2003) Managing

Gigabytes.

- Also, Arms (2003) online

version of his earlier 2000 book, Digital

Libraries.

- Chakrabhati (2003) Mining

the Web.

(Updated Thu

Jul 24 14:07:58 GMT-8 2003) I have put a number of books that will be relevant

to your project work in the RBR.

Click

here to see the current books on reserve and their status.

We will also be

reading selected papers from a number of relevant conference papers to give you

an idea of the current state-of-the-art. You will be reading a number of

additional papers as part of the survey paper.

Syllabus

(Updated on Tue Oct 7 14:50:20

GMT-8 2003

Below is the

syllabus for the course. Not detailed enough for you? Try the complete, detailed schedule. N.B. - The notes below are the public copies, which

are purposely incomplete. Students in class should use the versions in IVLE,

which are more complete.

- (S0. 6 Aug) M0: Defining a

digital library [ Orientation

]

- (S1. 13 Aug and S2. 20 Aug)

M1-M2: A crash course in traditional library science. [ Overview ] [ Reference

Interviews ] [ Information

Seeking ] [ Overview

2 ] [ Library

Evaluation ]

- (S3. 27 Aug) M3: Practical

aspects of information retrieval. [ Practical IR

] [ IR

Evaluation ]

- (S4. 3 Sep) M4: Multimedia,

multilingual, multiple access, multiple needs. [ Multimedia ] [ Textual

Images ]

- (S4. 10 Sep) M5: Traditional

and automated approaches and cataloguing/indexing services. [ Indexing and

Cataloging ] [ Identifiers

] [ WordNet

]

- (S5. 17 Sep) M6: Metadata

creation and management. [ Metadata

] [ Semantic

Web ]

- (S6. 24 Sep) M7: Introduction

to bibliometrics. [ Bibliometrics

] [ Pagerank

and HITS ] [ Assessment

Overview ]

- (S7. 1 Oct) M8: Usability of

OPACs and retrieval engines with respect to searching, browsing and

retrieval. [ Usability

and HCI ] [ Presentation

Guidelines ]

- (S8. 8 Oct) M9: Computational

literary analysis and M10: Digital library policy [ Computational

Literary Analysis ] [ DL

Policy ]

- (S10. 15 Oct) M11: Mini Lecture

presentations by student teams.

[ Web

Information Seeking (Presented by Yang Hui and Guo Shuqiao) ]

[ Peer to Peer

Systems in the DL (Presented by Li Yingguang and Vorobiev Artem) ]

[ Document

Clustering for DLs (Presented by Lin Li and Wong Swee Song) ]

[ Intelligent

Agents in the DL (Presented by Tok Wee Hyong and Qiu Long) ]

- (S11. 22 Oct) M12: Mini Lecture

presentations by student teams.

[ Spatial

and Temporal (Maslennikov Mstislav and Chan Yee Seng) ]

[ Music and

Speech in DL (Wang Gang and Huang Wendong) ]

[ Multimedia

Mining and summarization (Edward Wiyaja and Hendra Setiawan) ]

[ Metadata

Extraction (Chen Xi and Wang Xiaohang) ]

- (S12. 29 Oct) No class - poster

presentation in lieu (8 Nov)

- ([Thursday] 13 Nov, 1-5 pm) -

Final project poster presentations.

Reading

Period: 15. 5 Nov 2003

Teaching-Learning Materials

I’ve illustrated some of

the techniques I’ve used using only one lecture as an example (HCI in digital

libraries).

Here I’ve used some strategically placed blanks to

keep student’s attention and to force them to remember what they’ve heard.

I give real-life examples and annotate them on the

slides so that students can follow both in the lecture and their notes (they

get the .ppt or .pdf files of the slides) when studying or reflecting on the

course.

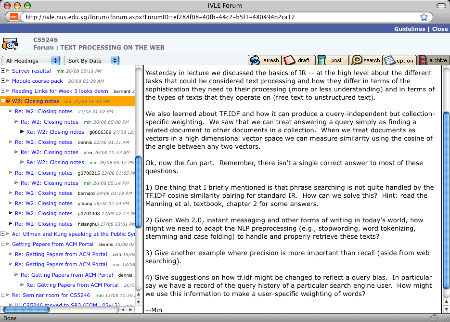

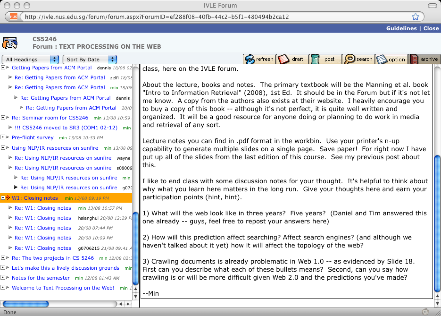

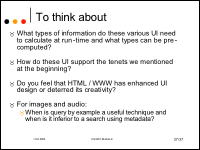

I frame my lectures with introductory and conclusion material that is

summarizes the information that I’ve given in lecture. The questions that I pose at the end of

lecture I also post to IVLE to get students to bounce the problem back and

forth between each other. The

third bullet on the right hand slide generates my post in IVLE that I would

like students to reflect on.

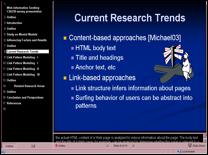

This module also included some presentations by

students as part of their continual assessment. I’ve included some of their work here to illustrate the mode of peer to peer

teaching. They were given 20

minutes of presentation and budget about 10 slides per team.

Continual

and Final Assessment

This module did not have

either a midterm or a final. As

suggested by the Head of the Department, I have revised this module to have

both a midterm and final, eschewing the continual assessment. I have included one of the two assignments

here.

Homework 2 - Authorship Detection

Quick Links: [ Home ] [ IVLE ] [ Project Info ] [ Schedule

] [ Details ] [ HW 1 ] [ HW 2

]

As per our lecture materials on

authorship detection, you will be creating machine learning features to be used

with a standard machine learner to try to guess the identity of the author. We

will be using papers from reviews of books from Amazon.com to try to compute

attribution.

Details

You are to come up with as many

features as you can think of for computing the authorship of the files.

"Classic" text categorization usually work to compute the subject

category of the work. Here, because many of the book reviewers examine similar

subjects and write reviews for a wide range of books, standard techniques will

not fare as well. You should use features that you come up with, as well any

additional features you can think of. You can code any feature you like, but

you will be limited to 30 real-valued features in total. You do not have to use

all 30 features if you do not wish to.

In the workbin, you will find a

training file containing reviews of books and other materials from 2 of

Amazon's top customer reviewers (for their .com website, Amazon keeps different

reviews in different counties -- e.g., UK). It is organized as 1 review per

line, and thus are very long lines. Each line gives the review followed by a

tab (\t) and then the author ID (either +1 or -1). There are 100 examples per

author in the training section.

In the test.txt file you will find a list of reviews,

again, one per line, but without the author ID given.

We are going to use the SVM

light package authored by Thorsten Joachims as the machine learning framework.

You should familiarize yourself with how to apply SVM to your dataset. SVM has

a very simple format for vectors used to train and test its hypothesis. I

should have demonstrated this during class. Be aware that training an SVM can

be quite time-intensive, even on a small training set.

What to turn in

You will upload an X.zip (where

X is your matric ID) file by the due date, consisting of the following four

files:

- A summary file in plain text, giving your matric

number (as the only form of ID), containing the percentage precision and

recall for each class, under leave-one-out validation. You should

inventory the features used in your assignment submission and briefly

explain (1 sentence to 1 paragraph) explain the feature, if non-obvious.

(filename: X.sum, where X is your matric ID). You can also include any

notes about the development of your submission if you'd like.

- Your training file (X.train), consisting of the

features for each review, followed by the author ID. This should be in a

format that can be passed directly to the machine learner for training.

Remember, you may only use up to a maximum of 30 features.

- Your testing file (X.test), consisting of the

same features as above, but without the class labels (as you don't know

them).

- A model file (X.model) that was induced by

running

svm_trainwith defaults (i.e., no arguments) to the machine learner.

Updated on Wed Oct 15

15:25:12 GMT-8 2003. Please use a ZIP (not RAR or TAR) utility to construct

your submission. Do not include a directory in the submission to extract to

(e.g., unzipping X.zip should give files X.sum, not X/X.sum or

submission/X.sum). Please use all capital letters when writing your matric

number (matric numbers should start with HT or HD for all students in this

class). Your cooperation with the submission format will allow me to grade the

assignment in a timely manner.

Grading scheme

Your grade will be 75%

determined by performance as judged by accuracy, and 25% determined by the

summary file you turn in and the smoothness of the evaluation of your

submission. For example, if your files cause problems with the machine learner

(incorrect format, etc.) this will result in penalties within in that 25%.

Of the remaining 75%, 45% of the

grade will be determined by your training set performance, and 30% determined

by the testing performance. For both halves, the grade given will be largely

determined by how your system performs against your peer systems. I will also

have a reference, baseline system to compare your systems against, this will

constitute the remaining portion of the grade.

Please note that it is quite

trivial to get a working assignment that will get you most of the grade. Even

the single feature classifier using a feature of review-length-in-words, would

be awarded at least 40 marks (the 25% plus 15% worse than baseline

performance). I recommend that you make an effort to complete a baseline

working system within the first week of the assignment.

Summary

In this module, I accomplished my first semester of

teaching a graduate class. This

semester, I am re-running this course and am working on improving the

curriculum by introducing more lectures on related topics (e.g., new media,

blogging, instant messaging and short message service) to make the course more

relevant to the students today.

Selected Student Feedback

Although you do have a full inventory of the

student feedback on each faculty member at your disposal, I have provided a

distilled version that highlights both weaknesses and strengths of my

teaching. I hope that these are

valuable to you.

CS 1102 Data Structures (Semester II 2002/2003)

In my first semester of

teaching, I co-taught 1102 with Sun-Teck, Wei-Tsang and David. Sun-Teck and I were paired as a team to

teach one section. I taught the

first half of the course.

Please note, this was

the first year we fielded the online judge for grading of labs. As such, there were many snafus (e.g.,

downtimes and crashes of the judge). This, in my opinion is the cause for the

low student feedback ratings.

Overall instructor score: 3.715

Number of students: 205

Nominations for teaching award: 15 (7% of class)

Comments (positive, then negative)

- Quite

humourous at times. Can explain concepts quite clearly

- Lectures

are painfully clear...=) Extremely helpful and always there for

consultation

- I

feel that he's approachable and forthcoming with extra elaboration and

explanation on syllabus, as seen from his frequent postings on the forum.

He tries to inject little humour into the lecture, making it less dry. =)

- I

went to attend the first lecture, however I think the way he is taech is a

little too difficult for me to understand. There is a certain gap between

him an d me, thats why I decided to do my own readings and try to

understand through self learning.

- NEVER

DO ANYTHING FUNNY...

- Since you are teaching in a Singapore university it might be beneficial to tone down your American accent in regard to the majority of Asian students in your lectures. And Oh, perhaps add abit more life and colour to your lecture notes.

CS 6210 Trial Run: Digital Libraries (Semester I

2003/2004)

This was my first run of

my graduate course on digital libraries, coded as Special Topics in Computer

Science. It was small class, and

as such, was easier to teach and also had less feedback.

Overall instructor score: 3.715 / 5

Number of students: 15

Perceived Difficulty (new for 2003/2004 reports):

3.4 / 5

Nominations for teaching award: 5 (33% of class)

Comments (positive, then negative)

- Class

discussions are actively stimulated during lecture times. He is very

approachable for consulation. Once, I missed a lecture because I was held

back during an extended discussion with another lecturer. When I

approached Dr. Kan the next day to discuss some stuff, he readily gave a

concise summary of the lecture which I've missed. He used online forums to

hold further discussions + monitor the forums

regularly too. Gave us opportunity to practice writing a critical survey

paper.

- concentrate.

CS 3243 Artificial Intelligence (Semester II

2003/2004)

This was my first

attempt at teaching AI. As

mentioned already, we did a large revamping of the curriculum. Working with Weihua, my TA for the

course, we changed all of the homework assignments, as you can see in the

module folder. Students pointed

out that the homework was not well connected to the syllabus and that the

tutorials were too old. We plan to

re-examine these weaknesses in the next run of the course.

Overall instructor score: 4 / 5

Number of students: 154 (not counting PG students

taking for QE)

Perceived Difficulty (new for 2003/2004 reports):

3.8 / 5

Nominations for teaching award: 5 (33% of class)

Comments (holistic, positive, then negative)

- Strengths

- good range of assignment topics for us to explore. Weaknesses - level of

expectation of the students is perhaps a little too high. Some of us are

from the 3-Year tech Focus Stream who may not have as strong foundations

as our peers from the Hons stream. Hence, sometimes we do feel overwhelmed

and lost. Perhaps a better balance could be established in the following

semesters that offer this module. It was, nevertheless, a good experience

for learning.

- Strength:

good and difficult projects Weakness: projects are not relevant to

lectures

- One

of the best balanced modules I have taken (in terms of workload..etc)

Interesting project concept and good lectures.

- He

is well-versed in the area of AI, and hence was able to come up with

innovative ideas / assignments relevant to the learning / exploration of

the subject. He is also open to feedback, which is good since we students

can then use it as a channel to communicate with him our feelings and

sentiments over his management of the course.

- Organized,

unarrogant, motivating and simply nice. The best lecturer I've had in

Singapore.

- He

is one of the nicest lecturers I've met so far in my 2 years university

learning period. I nomite him for his professionalism and patience to us

students. Another remarkable point is that he really THINKS how this

module can improve, we can see that through the way he designs projects

and so on. Thanks!

- Very

interesting lecturer can make eve very boring subject matter like

probability seem very interesting. Is also innovative in giving more

thoughtprovoking assignments rather than things which require simple

regurgitating or programming.

- projects

are too time consuming

- The

homework is unrelated to the course.

- Weakness

of the module is using the old notes and follow ONE textbook as syllabus.

AI != search and logic

- The

syllabus has too many things... both the lecturers and students can't cope

with such a workload

- I

was thinking that he might have too high expectations of us in giving the

first homework, but the situation was made better as evident in the

setting of the homework2 assignemtns. Perhaps he could do a review on what

kind of students he has amongst the cohort before actually setting out to

plan the assigments. This will help him to identify his target audience to

better plan a module that not only caters to the needs but which also

facilitates the learning of ALL the students rather than only the better

ones.

- More

active lecture, more variations in the tone More difficult exercise in the

tutorials

Appendix A – Emails on slide development

Before

my conversion of the slides to PowerPoint format, there were many instructors

on the AIMA mailing list looking for this exact resource. I decided to do something about

it. As Stuart Russell (the

textbook author) mentions in his email, no one had done this for the community

yet.

|

203 |

Min-Yen Kan |

Mon 1/5/2004 |

||

|

191 |

Tue 10/7/2003 |

|||

|

190 |

Mon 10/6/2003 |

|||

|

189 |

|

Mon 10/6/2003 |

||

|

188 |

|

Mon 10/6/2003 |

||

|

187 |

|

Mon 10/6/2003 |

||

|

186 |

|

Mon 10/6/2003 |

||

|

185 |

Mon 10/6/2003 |

|||

|

175 |

Sun 9/7/2003 |

|||

|

41 |

Roy M. Turner |

|

Fri 11/9/2001 |

|

|

40 |

simi |

|

Thu 11/8/2001 |

|

|

39 |

|

Thu 11/8/2001 |

||

|

38 |

Lisa Cingiser DiPippo |

|

Thu 11/8/2001 |

|

|

37 |

|

Thu 11/8/2001 |

||

|

36 |

Lisa Cingiser DiPippo |

|

Thu 11/8/2001 |

|

|

26 |

Austin Texas |

|

Fri 8/31/2001 |

Hi all:

> It would be nice if the publisher (or a volunteer) would produce a

> powerpoint version of the slides...

> Stuart Russell

First, thanks to the authors of the book for creating a wonderful

2nd ed. of the AIMA text. The supplementary material is very helpful.

For many of us, a powerpoint version of the slides would be helpful*.

I've translated (edited) chapter slides into powerpoint format

(chapters 1,2,3,4,5,6,7,8,9,13, 14 Section 1-2,18 Section 1-3).

You're welcome to take them and use them in your courses. I've posted

the web page versions and .pdf files on the web, the source powerpoint

files are available from me directly.

Please see http://www.comp.nus.edu.sg/~kanmy/courses/3243_2004/

for

the my syllabus and links to the powerpoint slides.

Details on slide translation:

- main TeX translated into tabbed text using a perl script

- imported into powerpoint and manually edited to add font changes,

symbols and equations

- algorithms' and posted as bitmaps from screen captures of the pdf

file. (I don't think many people will want to edit the algorithm

details...)

- figures and graphs transformed to gif using un*x convert image