Human Subject Evaluation -2

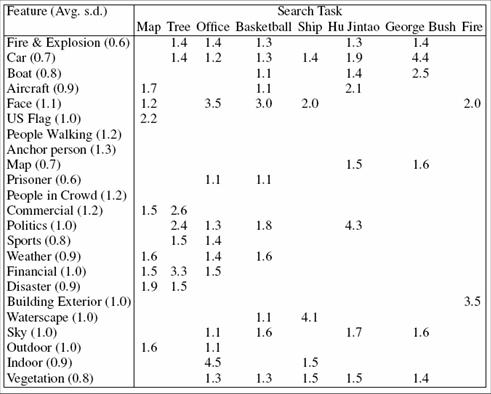

Importance ratings

Importance ratings

nInter-judge agreement using Kappa is low ( 0.2 to 0.4)

nRatings for concrete nouns were most stable, follow by backgrounds and video categories, with actions

being the worse

nNegative correlations are prominent in our dataset