|

Learning Search Strategies and Heuristics for Constraint Programming

Roland Yap

School of Computing, National University of Singapore

Abstract

Effective solving of constraint problems often requires designing specific or choosing good search heuristics. Thus, developing general-purpose search strategies is an important component in Constraint Programming.

We have introduced a new idea using correlation of conflicts between variables as the basis of search heuristics which can be recorded using a correlation matrix.

We propose two specific new variable heuristics using the correlaton matrix, crbs-sum and crbs-max. Preliminary experiments on a variety of different problem benchmarks

show that the correlation heuristics are competitive with existing well known and effective search heuristics.

Although there are many search heuristics which have been shown effective. It is unclear which search heuristic should be

applied on which problem. Furthermore, the performance between search

heuristics can vary significantly which makes the wrong choice, non-robust.

We propose to automatically select variable search heuristics using on-line reinforcement

learning. This leads to several algorithms based on various multi-armed

bandit techniques. We show that on-line learning leads to more robust

variable search heuristics.

Keywords: Search heuristics, reinforcment learning, multi-armed bandit, robust CSP solving

People

Roland Yap

Wei Xia

Ruiwei Wang

Publications

"Correlation Heuristics for Constraint Programming" [pdf]

by Ruiwei Wang, Wei Xia and Roland H. C. Yap

The 29th International Conference on Tools with Artificial Intelligence (ICTAI'17)

"Learning Robust Search Strategies Using a Bandit-Based Approach" [pdf]

by Wei Xia and Roland H. C. Yap

The 32th AAAI Conference on Artificial Intelligence (AAAI'18)

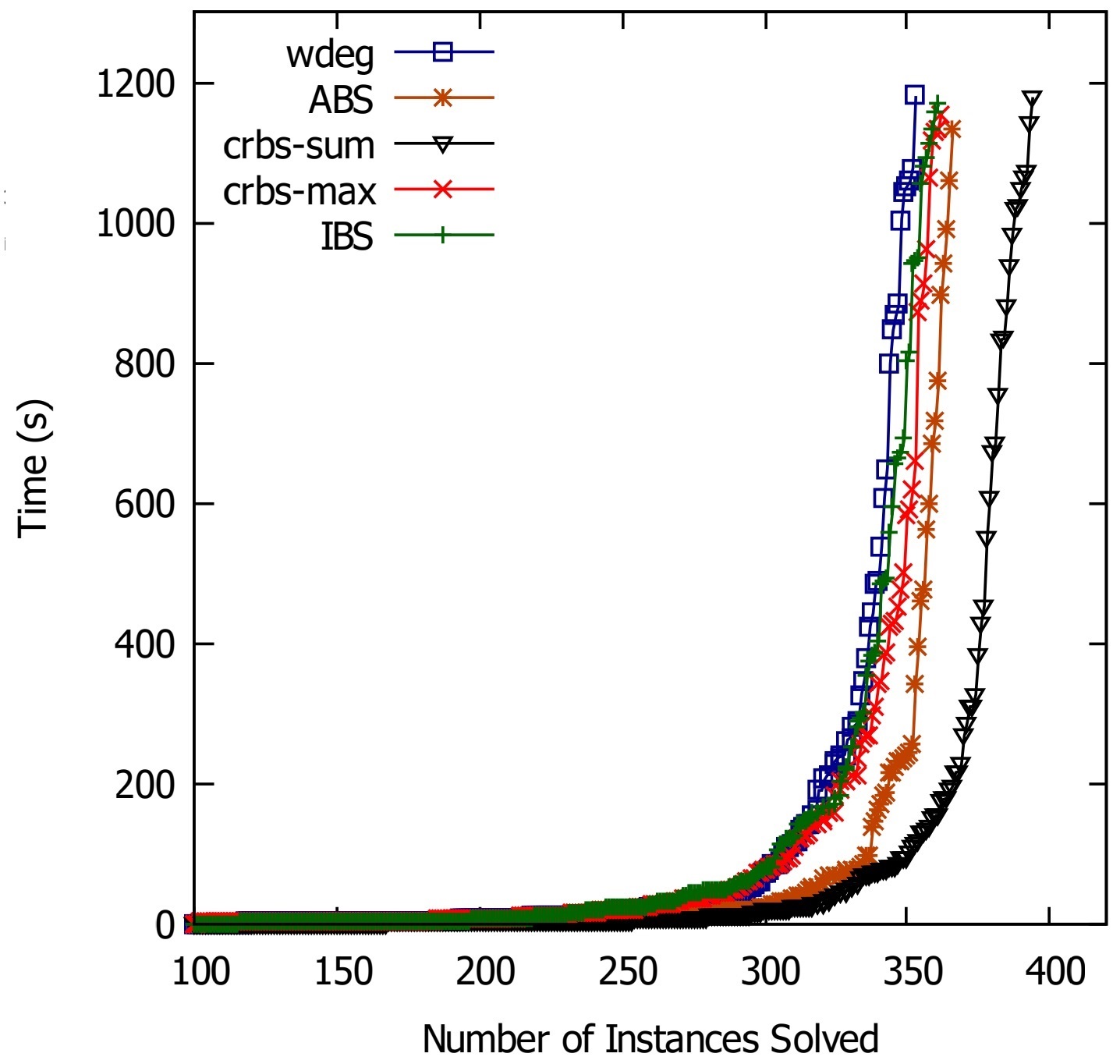

Experimental results for the correlation heuristics (ICTAIĄŻ17)

Figure 1 presents the runtime distribution of the benchmark

instances solved using the different heuristics. The variable heuristic crbs-sum and crbs-max are our correlation heuristics.

In this experiment, we see that crbs-sum solves the most instances within the time limit of 1200 seconds.

|

Figure 1: Runtime distribution for all benchmark instances.

|

Experimental results for the bandit-based heuristics (AAAI'18)

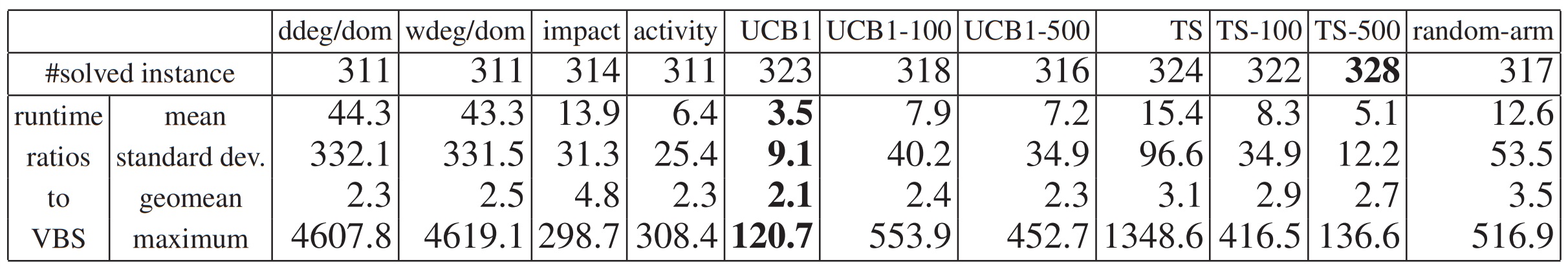

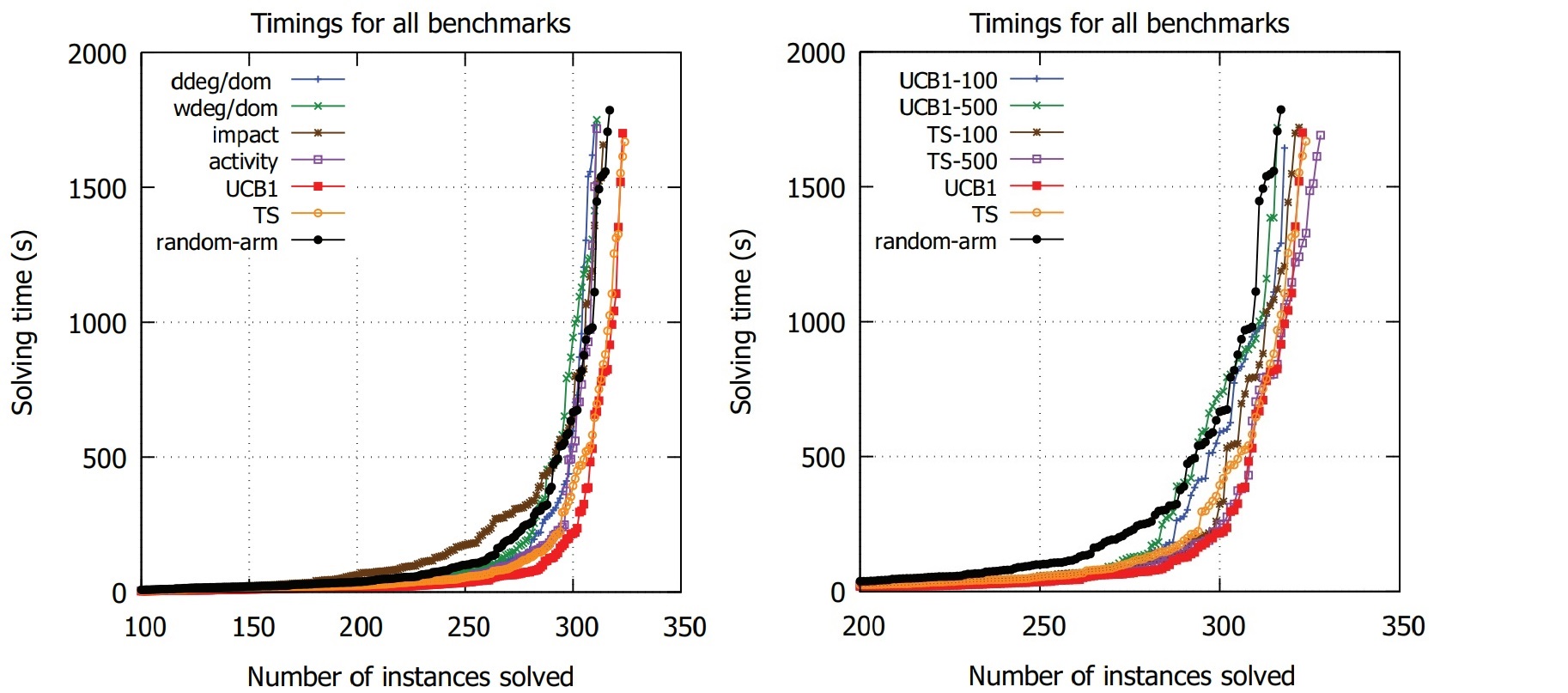

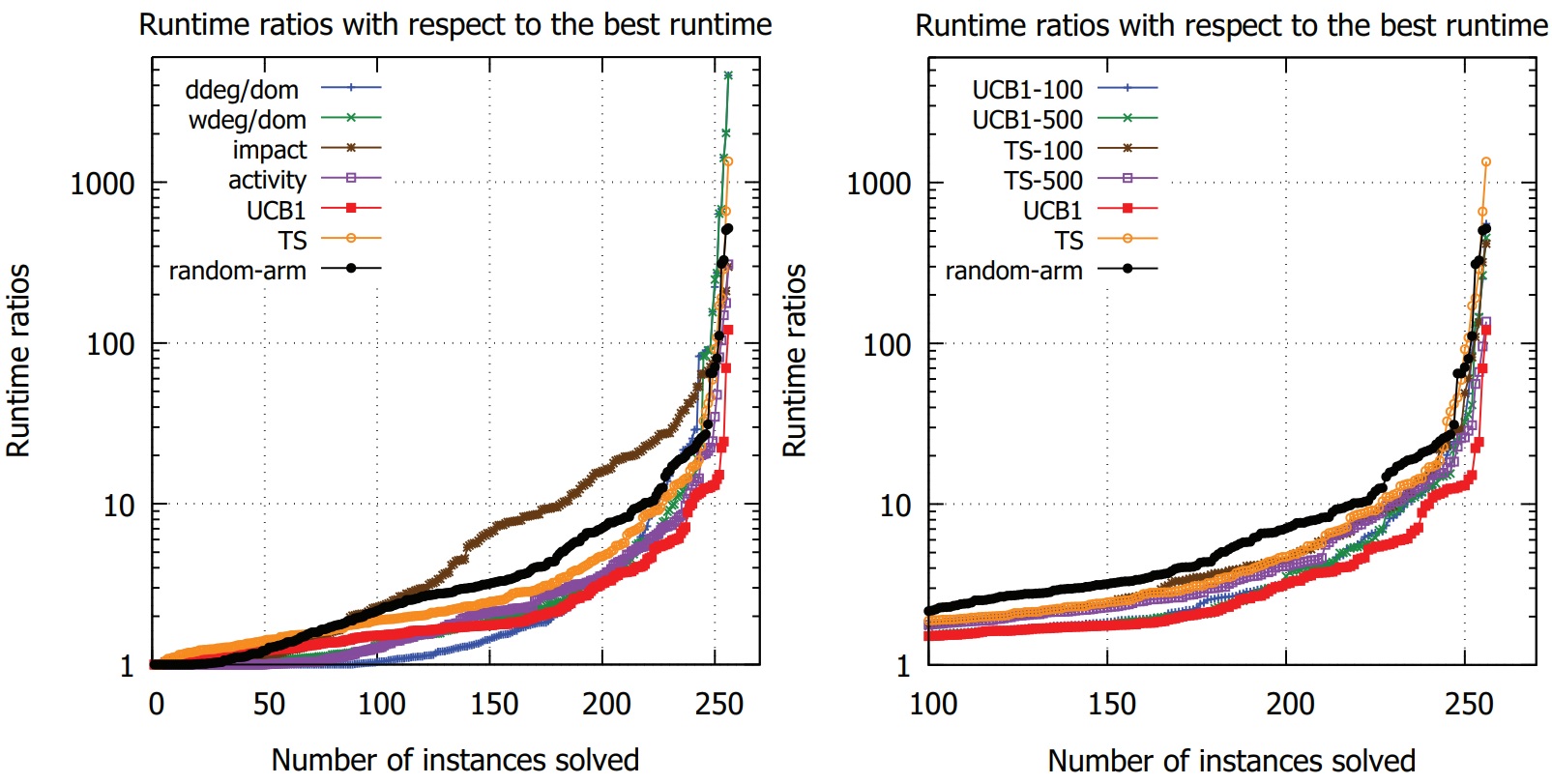

Table 1, Figure 2, and Figure 3 give the overall statistics, the runtime distribution and the runtime ratio distribution of all search heuristics. They show that UCB1 is the most robust with the smallest standard deviation and maximum ratio with respect to the VBS runtime.

|

Table 1: Overall results for all evaluated variable ordering heuristics. Our online learning heuristics are: TS, TS-n, UCB1, and UCB1-n.

Existing search heuristics compared are: ddeg/dom, wdeg/dom, impact, and activity.

A random meta heuristic, random-arm, is also compared.

|

|

Figure 2: Runtime distribution for all benchmark instances.

|

Figure 3: Runtime ratios to VBS for all benchmark instances.

|

|