CrowdOp: Query Optimization for Declarative Crowdsourcing Systems

CrowdOp is a declarative crowdsourcing system, which is designed to hide the complexities and relieve the user the burden of dealing with the crowd. The user is only required to submit an SQL-like query and the system takes the responsibility of compiling the query, generating the execution plan and evaluating in the crowdsourcing marketplace.

Introduction

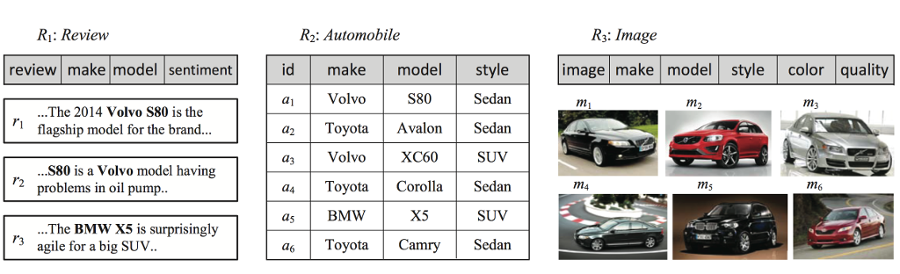

CrowdOp provides an SQL-like query language as a declarative interface to the crowd. An SQL-like declarative interface is designed to encapsulate the complexities of dealing with the crowd and provide the crowdsourcing system an interface that is familiar to most database users. To illustrate a declarative crowdsourcing interface, we consider the three example relations shown in the following figure: the REVIEW table contains car reviews from customers; the AUTOMOBILE table contains car specifications; the IMAGE table contains car pictures.

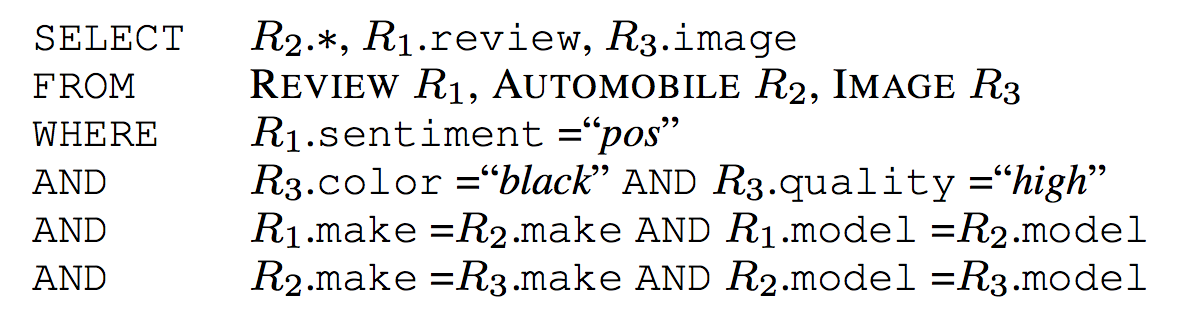

An example query for finding cars with black color, high-quality images and positive reviews is

For a given query, CrowdOp first compiles the query, generates an execution plan, posts human intelligence tasks (HITs) to the crowd according to the plan, collects the answers, handles errors and resolves the inconsistencies in the answers. While CrowdOp improves the usability of crowdsourcing, it is required to to have the capability to optimize and provide a "near optimal" query execution plan for each query. Compared to the query optimization techniques proposed in recent crowdsourcing systems and algorithms, CrowdOp has the following differences in its design principles:

- Supporting cost-based query optimization. CrowdOp adopts cost-based optimization that estimates costs of alternative query plans for evaluating a query and uses the one with the lowest estimated cost (with respect to pre-defined cost functions).

- Optimizing multiple crowdsourcing operators. CrowdOp supports cost-based optimization for all crowdsourcing operators, optimizes the overall cost of all operators involved in a query, and derives the “best” query evaluation plan.

- Tradeoff between monetary cost and latency. CrowdOp incorporates the cost-latency tradeoff into its optimization objectives. It is capable of finding the query plan with low latency given a user-defined budget constraint, which nicely balances the cost and time requirement of users.

System Overview

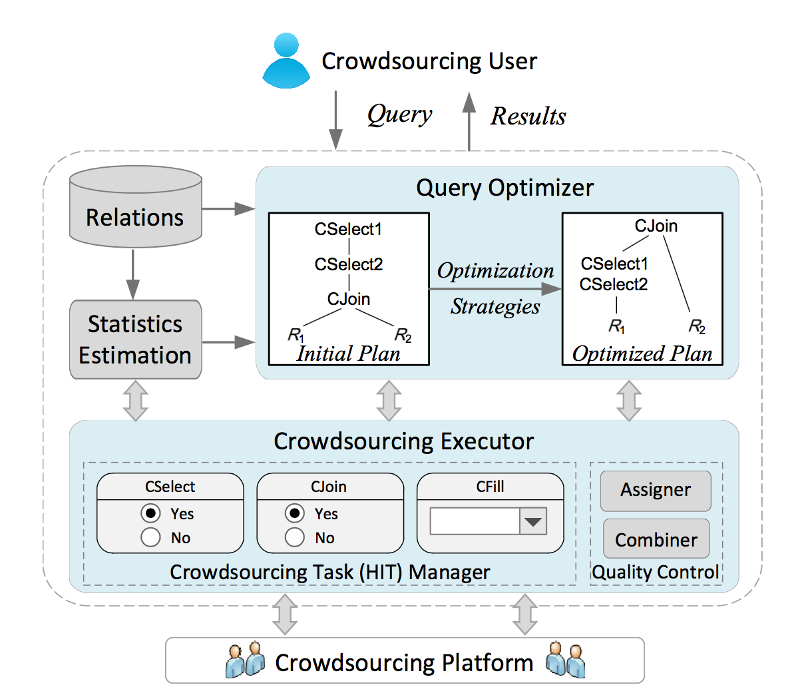

The architecture of CrowdOp is illustrated in the figure below. An SQL query is issued by a crowdsourcing user and is firstly processed by Query Optimizer, which parses the query and produces an optimized query plan. The query plan is then executed by Crowdsourcing Executor to generate human intelligence tasks (or HITs) and publish these HITs on crowdsourcing platforms, such as Amazon Mechanical Turk (AMT). Based on the HIT answers collected from the crowd, Crowdsourcing Executor evaluates the query and returns the obtained results to the user.

- Query optimizer. CrowdOp optimizes crowd-powered operators that abstract specific types of operations that can be processed by humans, e.g., Crowd-Select, Crowd-Join, and CrowdFill.

- Crowdsourcing executor. CrowdOp employs a Crowdsourcing Task (HIT) Manager to generate the HITs to be published on crowd- sourcing platforms. The HIT manager carefully designs interfaces and is also responsible for HIT-level optimization, e.g., batching.

Publication(s)

- CrowdOp: Query Optimization for Declarative Crowdsourcing Systems

J. Fan, M. Zhang, S. Kok, M. Lu, B.C. Ooi IEEE Transactions on Knowledge and Data Engineering, 2015.

Source Code

- You can download the source code from GitHub