- Approaches

- WP1:Binary Analysis

- WP2:Binary Hardening

- WP3:Formal Verification

- WP4:Sensitive Data Protection

Approaches

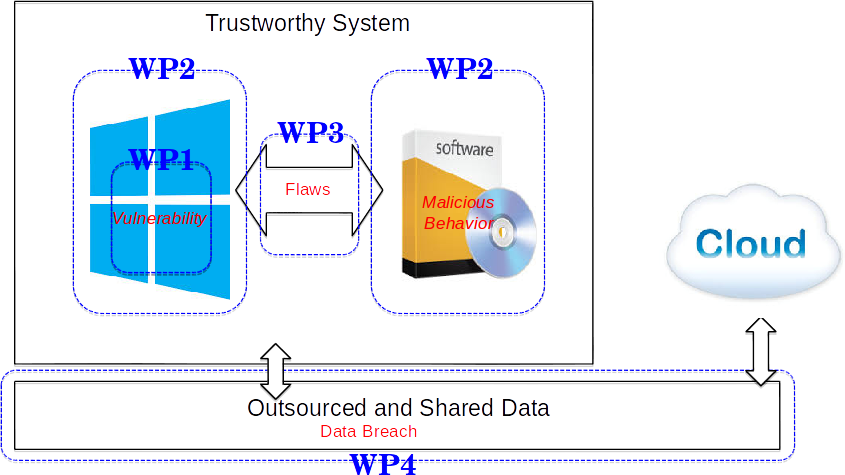

To build trustworthy systems out of COTS components, we consider three sub-problems: (a) ensuring the safe functioning of each sub-component, (b) safe inter-connection and interfacing of sub-components, and (c) a strong second line of defence for storing outsourced sensitive data and processing on it obliviously in COTS software binaries. We describe our proposed work in the following, via four work packages. Work packages 1 and 2 correspond to intra-component analysis and hardening, work package 3 corresponds to inter-component verification, and work package 4 refers to the protection of sensitive data which could be processed by un-trusted or partially trusted software components. The overall relationship and connection among the four work-packages are shown in the following.

The final deliverables of the project not only yields a trustworthy COTS-integrated platform, but also several point technologies which can help address the needs of government agencies. These point technologies are directly useful not only for trustworthy system construction, but also post-mortem activities such as incident response e.g. applying binary analysis on known malware.

To find out the planned research in each work-package, click the links for the individual work-packages on the top of this page.

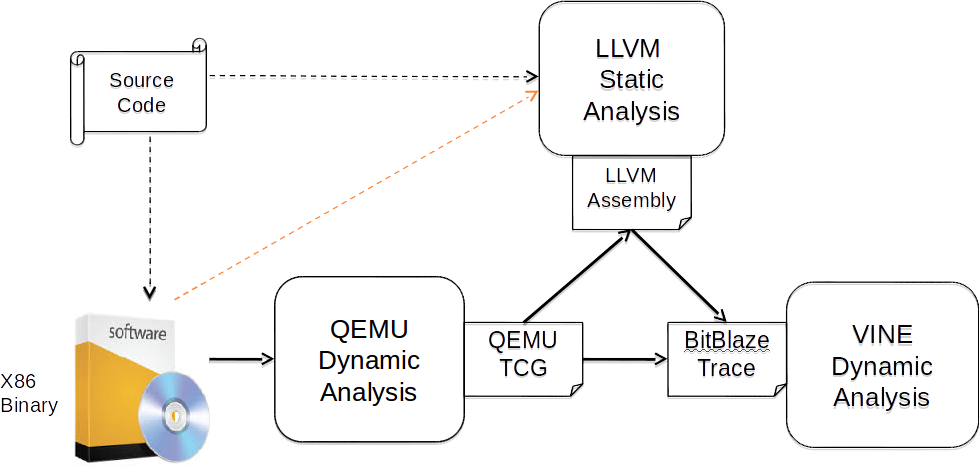

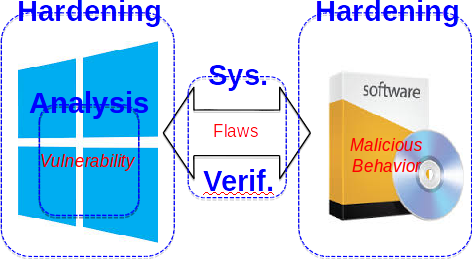

Work Package 1 (WP1): Binary Analysis

Recent advances in binary analysis and white-box fuzzing have ameliorated the path explosion problem for test generation in binaries. Given that finding crash test cases is now becoming easier, we focus our effort on the problem of clustering and determining the root-cause of a crash. Note that a crash does not necessarily imply a critical security problem (e.g. a malicious code execution, data theft) but still affects robustness --- in fact, only 1% of the crashes may be seriously exploitable. The main challenge is that security testers receive millions of crash reports an year, which is far more than what a large human development team can process and handle. This leads to security flaws being left un-diagnosed with longer than necessary time-to-patch. To address this, we aim to develop infrastructure to (a) to cluster crash-reproducing tests into semantically related classes, (b) separate out bug classes that are security relevant, (c) devise methods to distil out the “essence” of an error cause (called the root-cause) which can aid security analysts to zero in quickly on the code to be patched, and finally (d) generate the “simple” fixes automatically via semantic analysis. Our approach will focus on deep binary analysis which will combine techniques from software testing and analysis research in novel ways to address these challenges. Our proposed technologies can be effectively used for vulnerability detection, explanation and patching. In addition, our core expertise in static and dynamic analysis can also be leveraged to help address the needs of the government agencies in related activities, such as analysis-based reverse engineering methods to help understand binaries.

Deliverable Tool-chain

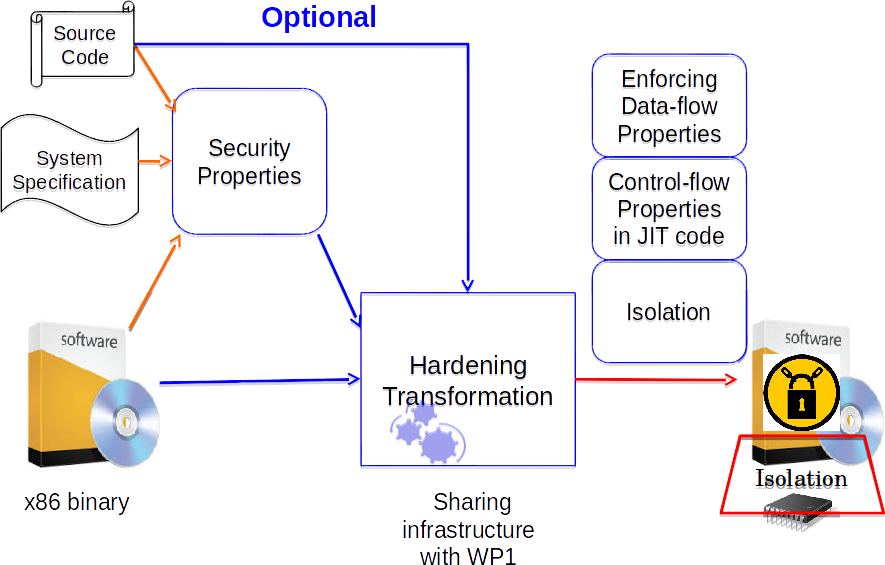

Work Package 2 (WP2): Binary Hardening

We aim to investigate techniques to address the limitations mentioned above. The core of this work package is a framework that can be used to enforce a wide range of security properties, such as control-flow property, data-flow property, and constraints on critical program data. The framework will weave users’ security requirements to generate hardened binaries with only minor human effort needed. To facilitate specification of security properties, we will also investigate solutions to extract security property from a wide range of sources, such as high-level specifications, source code, and binaries. We will develop new supporting mechanisms to enforce new security properties, where we focus on usability while having low overheads. The proposed solutions also benefit from our past expertise in binary analysis.

Work Package 3 (WP3): Formal Verification

In this work package, we will focus on the interfaces across components. We generally term the interaction between components as protocols, e.g., the communication among a browser, a web Single-Sign-On (SSO) server, and a website using the SSO service. As another example, in a trusted system implementation, the API calls made between the trusted system and the trusted platform module (TPM) is another type of protocol. Current solutions for checking the correctness of security protocols are focused on untimed protocols. Considering time in security protocols is necessary in multiple scenarios that need to guarantee the freshness of data / messages received over the network. We plan to apply formal methods to verify authentication properties and to find vulnerabilities in timed and un-timed security protocols. The PAT model checker will be extended to handle both timed and untimed security protocols. For reasoning about specific protocols suggested by agencies such as DSTA – we will augment PAT with limited theorem proving. This can be achieved by a master-slave proof architecture where the PAT model checker is invoked by a theorem prover. The framework developed in WP3 can also be used to reason about the correctness of inter-component communication after the hardening transformations in WP2, at a high level.

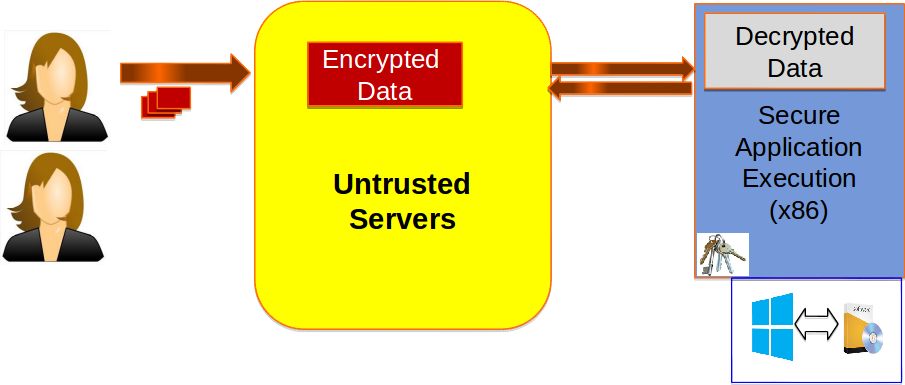

Work Package 4 (WP4): Sensitive Data Protection, at-rest and in-computation

In modern COTS-integrated backend infrastructure, data is routinely outsourced to a public or third-party cloud service (e.g. Salesforce, Dropbox). We focus on ensuring the privacy and confidentiality of such sensitive user-owned data (e.g. enterprise documents) in the final system that combines COTS components with external third- party services. Sensitive data-handling applications, such as enterprise email, allow users share large amounts of personal and security-sensitive data such as documents and presentations via text and email attachments. The privacy of such mission- or business-critical data is paramount when hosted on a third-party cloud service. Such data is often shared with thousands of users in large organizations or when cross-organizational collaboration is needed (e.g. via mail groups). At the same time, email service providers need the ability to compute on such data --- for example, to permit searching, sorting, archival, spam detection and virus scanning -- - on the server-side. For such usage, data protection should offer the following: (a) Encrypted sharing for large data across a large number of dynamic users (b) Ease-of-revocation: For Secure deletion or email recall, to dynamically resize shared recipient sets, or to revoke access to lost/stolen devices (c) Ability to allow remote computation on encrypted data (d) Cost-efficient network retrieval to/from client’s device. We proceed to achieve these goals in this work-package.