Algorithms & Theory

At the heart of all computer programs lie the mathematical foundations of computer science – theory and algorithms.

We delve into these theoretical concepts, understanding the fundamental abilities and limitations of the computational tools we work with, and use these insights to develop improved software and algorithms.

What We Do

Sub Areas

- Algorithmic Game Theory

- Combinatorial Algorithms

- Complexity Theory

- Constraint Satisfaction

- Cryptography

- Distributed Algorithms

- Fault Tolerance & Robustness

- Graph Theory & Algorithms

- Information Theory & Coding

- Learning Theory

- Logic

- Networking

- Optimisation

- Privacy & Security

- Quantum Information & Algorithms

- Transportation & Logistics Algorithms

Our Research Projects

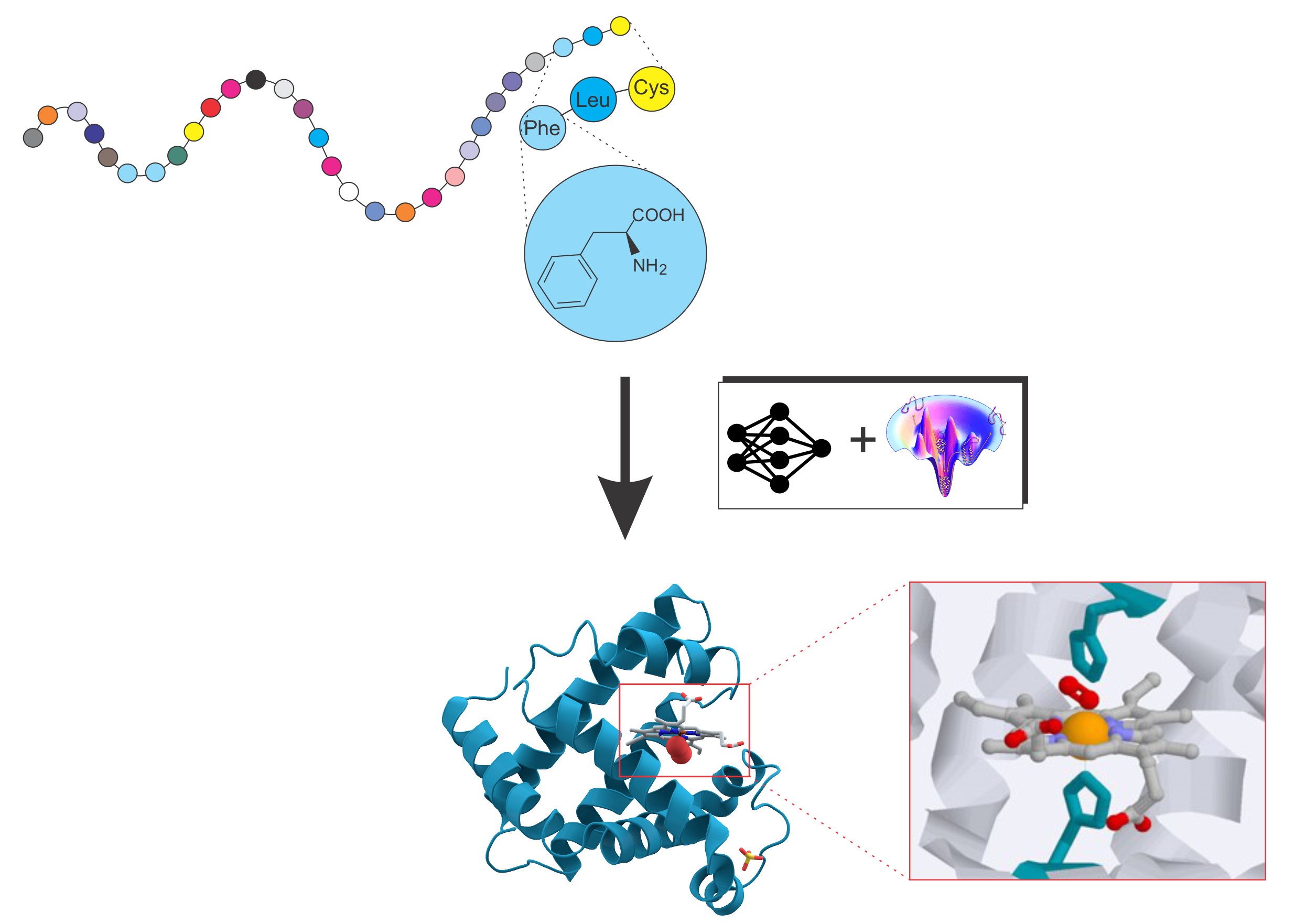

AI for Science

This research focuses on developing foundational artificial intelligence methods to advance scientific discovery. We study how modern machine learning models—such as deep learning, foundation models, and generative AI—can be tightly integrated with domain knowledge from physics, biology, and medicine to enable accurate modeling of biomolecular structures, interactions, and functions. This research direction is supported by national Foundational Research Capabilities (FRC) initiatives in Singapore, aiming to build long-term AI-driven scientific discovery platforms across disciplines.

Protein folding and protein structure prediction

Deciphering the structure and function of proteins is central to modern biology and medicine. Our research develops pioneering computational frameworks that integrate advanced AI methodologies with physics-based force fields to accurately model protein structures and interactions. A central goal is to elucidate the fundamental relationships linking protein sequence, structure, dynamics, and function.

Breaking the Box - On Security of Cryptographic Devices

This project focuses on advancing the security of cryptographic systems against active and passive adversaries, particularly in the context of tampering and side-channel attacks. By addressing foundational problems such as constructing non-malleable extractors, codes, and secret-sharing schemes, the research aims to develop robust cryptographic protocols that remain secure under a wide range of physical and mathematical attacks.

Computational Hardness of Lattice Problems and Implications

This project explores the computational hardness of lattice problems, aiming to develop optimal algorithms, establish near-tight complexity bounds, and investigate the limitations of proving lower bounds. It also seeks to introduce new fine-grained hardness assumptions with significant implications for cryptography and computational complexity, alongside extending and studying the scope of fine-grained hardness results.

A Hybrid Approach to Automatic Programming

The project introduces an innovative approach that combines traditional program analysis, neural machine translation, and human guidance to enhance accuracy and generalization in automated programming tasks, thereby making coding accessible to non-experts.

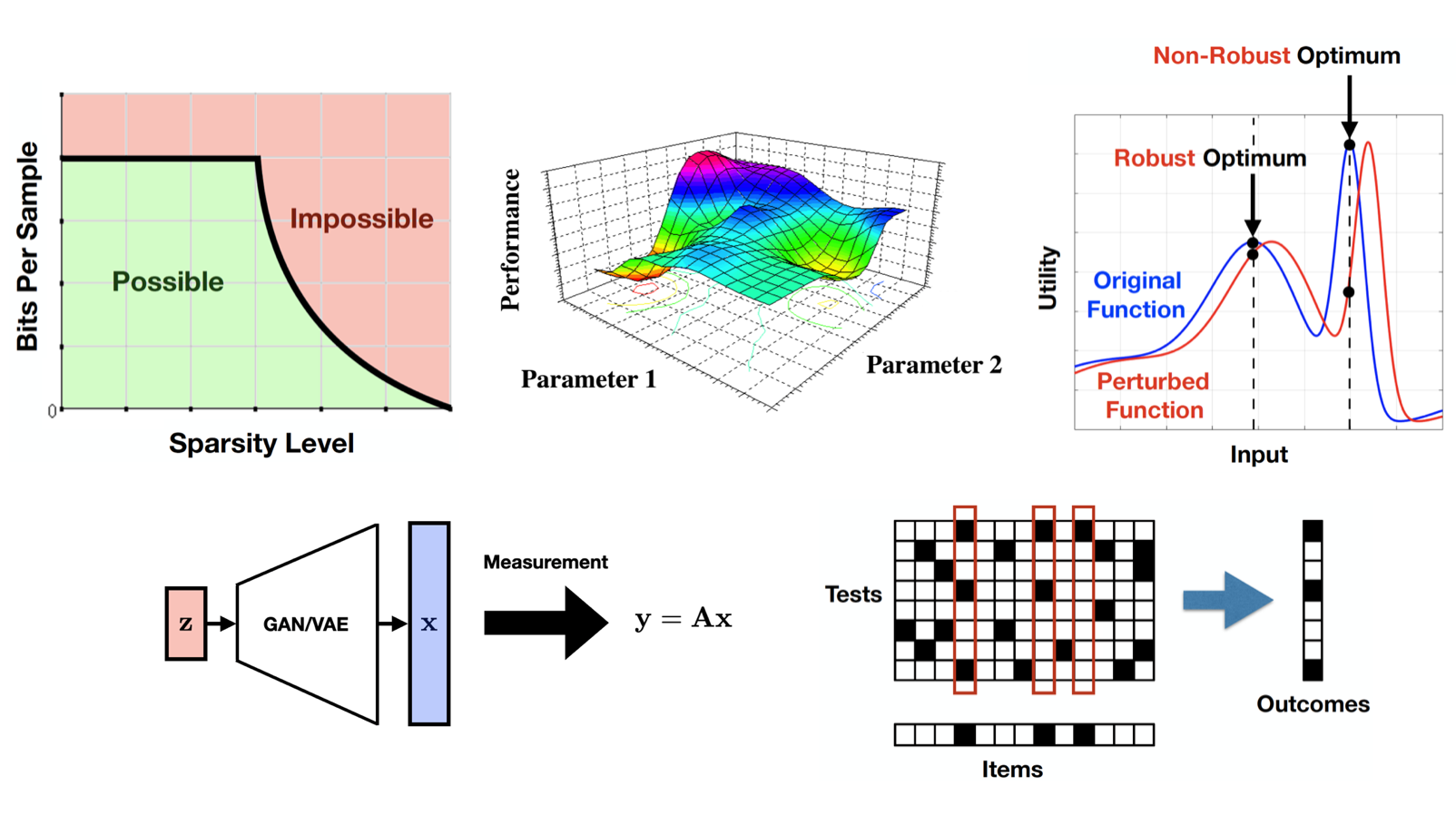

Safety and Reliability in Black-Box Optimization

This project seeks to enhance safety, reliability, and robustness in black-box optimization, exploring new function structures and addressing limitations. This includes extending decision-making frameworks to grey-box settings and multi-agent learning, utilizing a methodology blending theoretical analyses and algorithm development.

- Optimisation

Fault-tolerant Graph Structures: Efficient Constructions and Optimality

This project aims to enhance graph problem solutions in the presence of network failures by developing fault-tolerant constructions and optimized graph structures. Through novel approaches, it contributes to algorithmic understanding, graph theory, and real-life applications.

Computational Hardness Assumptions and the Foundations of Cryptography

This program seeks to broaden and diversify the foundations of cryptography by identifying new plausible computational hardness assumptions that can be used to construct cryptosystems. Our current approach is to study and construct "fine-grained" cryptographic primitives based on the conjectured hardness of various well-studied algorithmic problems.

Our Research Groups

Deep Learning Lab

Our lab aims to contribute to the development of deep learning methods and the understanding of intelligence.

Information Theory and Statistical Learning Group

Our group performs research at the intersection of information theory, machine learning, and high-dimensional statistics, with ongoing areas of interest including information-theoretic limits of learning, adaptive decision-making under uncertainty, scalable algorithms for large-scale inference and learning, and robustness considerations in machine learning.

- Information Theory & Coding, Learning Theory, Optimisation