|

|

About Myself

I am currently an Associate Professor at the Department of Computer Science at the National University of Singapore (NUS), where I head the Computer Vision and Robotic Perception (CVRP) Laboratory. I am also affiliated with the NUS Graduate School for Integrative Sciences and Engineering (NGS-ISEP), and the NUS Institute of Data Science (NUS-IDS). Prior to NUS, I was a researcher at Mitsubishi Electric Research Laboratories (MERL), USA. I did my PhD in Computer Science at ETH Zurich under the supervision of Prof. Marc Pollefeys, and I received my B.Eng with first class honors and M.Eng degrees from the Department of Mechanical Engineering at NUS. Before my PhD, I worked at DSO National Laboratories in Singapore as a Member of Technical Staff. I am serving as an Associate Editor for TPAMI. I have served or will serve as an Area Chair for CVPR, ICCV, ECCV, WACV, BMVC, 3DV, ICLR, NeurIPS, AAAI and IJCAI, and was part of the organizing committee as one of the Program Chairs for 3DV 2022 and the Demo Chair for CVPR 2023. I have organized 3DV 2025 in Singapore as one of the General Chairs. I am also a recipient of the NRF Investigatorship, Class of 2024.

My research interest is on 3D Computer Vision with the following focus. 1) Computer Vision: 3D digital modeling; Point Cloud Processing; 3D Scene Understanding; 3D Human/Animal pose and shape estimation; Multiview Geometry. 2) Robotics: Embodied AI, Mobile Robots, Manipulators. 3) Machine Learning: Data efficient learning; Robust and long-term learning; Mulitmodality learning.

Several PhD (Aug'26 and Jan'27) positions on 3D Computer Vision and Embodied AI/Robotics are available. Applicants from diverse nationalities are encouraged to apply.

I am reachable at:

Address: Computing 1, 13 Computing Drive, Singapore 117417

Email: gimhee.lee@nus(dot)edu(dot)sg, Office Tel: +65-6516-2214,

Office Location: COM2-03-54

News and Updates

- 31.01.2026: One paper accepted at ICRA 2026!

- 26.01.2026: We have two papers accepted at ICLR 2026!

- 19.01.2026: One paper accepted at RA-L 2026!

- 22.12.2025: I'll be an area chair for ECCV 2026.

- 02.12.2025: I'll be an area chair for ICML 2026.

- 08.11.2025: We have 5 papers accepted at AAAI 2026!

- 05.11.2025: We have 2 papers accepted at 3DV 2026!

- 19.09.2025: We have 9 papers accepted at NeurIPS 2025!

- 16.08.2025: I'll be an area chair for ICLR 2026.

- 29.07.2025: I'll be a senior area chair for CVPR 2026.

- 26.07.2025: I'll be an area chair for AAAI 2026.

- 16.07.2025: One paper accepted at BMVC 2025!

- 27.06.2025: We have 7 papers accepted at ICCV 2025!

- 19.06.2025: I'll be an area chair for WACV 2025.

- 16.05.2025: One paper accepted at ACL 2025!

- 11.05.2025: I'll be serving as an associate editor for TPAMI.

- 01.05.2025: I'll be an area chair for BMVC 2025.

- 27.02.2025: We have 8 papers accepted at CVPR 2025 (7 posters, 1 highlight)!

- 18.02.2025: One paper accepted at IJCV 2025!

- 17.02.2025: I'll be an area chair for NeurIPS 2025.

- 27.01.2025: One paper accepted at ICRA 2025!

- 23.01.2025: We have 6 papers accepted at ICLR 2025!

- 03.12.2024: I'll be an area chair for ICCV 2025.

- 06.11.2024: We have two papers accepted at 3DV 2025!

- 29.10.2024: One paper accepted at WACV 2025!

- 26.09.2024: We have 6 papers accepted at NeurIPS 2024 (5 posters, 1 spotlight)!

- 14.09.2024: I'll be a lead area chair for CVPR 2025.

- 08.08.2024: I'll be an area chair for ICLR 2025.

- 20.07.2024: We have 2 papers accepted at BMVC 2024!

- 01.07.2024: We have 5 papers accepted at ECCV 2024!

- 02.05.2024: I'll be an area chair for BMVC 2024.

- 27.03.2024: I'll be an area chair for NeurIPS 2024.

- 22.03.2024: I'll be organizing 3DV 2025 as one of the General Chairs!

- 05.03.2024: One paper accepted at IJCV 2024!

- 27.02.2024: We have 5 papers accepted at CVPR 2024!

- 15.12.2023: I'm awarded the NRF Investigatorship 2024!

- 09.12.2023: One paper accepted at AAAI 2024!

- 03.12.2023: My student Yu Chen is awarded the Google PhD Fellowship!

- 11.11.2023: I'll be an area chair for IJCAI 2024.

- 06.11.2023: I'll be a lead area chair for ECCV 2024.

- 24.10.2023: We have one paper accepted at WACV 2024!

- 16.10.2023: We have 2 papers accepted at 3DV 2024 (1 poster, 1 oral)!

- 22.09.2023: We have 2 papers accepted at NeurIPS 2023!

- 15.09.2023: We have one paper accepted at IJCV 2023!

- 25.08.2023: We have 2 papers accepted at BMVC 2023!

- 15.08.2023: I'll be an area chair for ICLR 2024.

- 13.08.2023: I'll be an area chair for CVPR 2024.

- 14.07.2023: We have 8 papers accepted at ICCV 2023!

- 22.06.2023: We have one paper accepted at IROS 2023!

- 24.05.2023: We organized the first Singapore Vision Day (SVD)!

- 21.04.2023: I received the Faculty Teaching Excellence Award for AY 2021/22!

- 08.03.2023: I'll be an area chair for NeurIPS 2023.

- 28.02.2023: We have 5 papers accepted at CVPR 2023!

- 17.01.2023: We have one paper accepted at ICRA 2023 and one paper accepted at RA-L 2023!

- 01.12.2022: I'll be an area chair for IJCAI 2023.

- 20.11.2022: One paper accepted at AAAI 2023!

- 02.11.2022: I'll be an area chair for ICCV 2023.

- 10.08.2022: I'll be an area chair for ICLR 2023.

- 04.07.2022: We have 5 papers accepted at ECCV 2022!

- 02.03.2022: I'll be the program chair for 3DV 2022.

- 02.03.2022: Two papers accepted at CVPR 2022!

- 16.02.2022: I'll be an area chair for ECCV 2022.

- 20.01.2022: I received tenure and have been promoted to Associate Professor!

- 01.12.2021: One paper accepted at AAAI 2022!

- 03.10.2021: One paper accepted at 3DV 2021!

- 29.09.2021: Two papers accepted at NeurIPS 2021!

- 03.08.2021: My student Chen Li recieved the Research Achievement Award from SoC!

- 23.07.2021: Two papers accepted at ICCV 2021!

- 01.07.2021: One paper accepted at IROS 2021!

- 29.06.2021: I'll be an area chair for WACV 2022.

- 17.05.2021: I'll be an area chair for BMVC 2021.

- 08.05.2021: One paper accepted at ICML 2021!

- 01.03.2021: We have 4 papers accepted at CVPR 2021 (1 oral, 3 posters)!

- 28.02.2021: We have 2 papers accepted at ICRA 2021!

- 16.12.2020: Check this interview of me by AI Singapore!

- 17.11.2020: I'll be an area chair for ICCV 2021.

- 04.08.2020: My student Zi Jian Yew recieved the Research Achievement Award from SoC!

- 30.07.2020: One paper accepted at BMVC 2020!

- 27.07.2020: I'll be an area chair for 3DV 2020.

- 03.07.2020: We have 4 papers accepted at ECCV 2020 (1 spotlight, 3 posters)!

- 01.07.2020: One paper accepted at IROS 2020!

- 22.06.2020: One paper accepted at ICPR 2020!

- 17.03.2020: I'll be an area chair for BMVC 2020.

- 28.02.2020: I received the Faculty Teaching Excellence Award for AY 2018/19!

- 24.02.2020: We have 4 papers accepted at CVPR 2020 (1 oral, 3 posters)!

- 23.01.2020: I'll be an area chair for CVPR 2021.

- 22.01.2020: We have 2 papers accepted at ICRA 2020!

- 20.12.2019: One paper accepted at ICLR 2020!

- 19.12.2019: Check out this article featuring our work on cross-view localization!

- 09.09 2019: Check out this article featuring my ex-FYP student Yew Siang Tang's research work!

- 23.07.2019: We have 2 papers accepted at ICCV 2019!

- 01.07.2019: One paper accepted at BMVC 2019!

- 21.06.2019: I'll be the Demo Chair for CVPR 2023.

- 20.06.2019: One paper accepted at IROS 2019!

- 01.06.2019: One paper accepted at IJCV 2019!

- 25.02.2019: We have 2 papers accepted at CVPR 2019!

- 15.02.2019: I received the Faculty Teaching Excellence Award for AY 2017/18!

- 26.01.2019: We have 3 papers accepted at ICRA 2019!

- 03.07.2018: One paper accepted at ECCV 2018!

- 19.02.2018: We have 6 papers accepted at CVPR 2018 (1 spotlight, 5 posters)!

- 02.09.2017: One paper accepted at the International Conference on 3D Vision 2017!

- 02.08.2017: One journal paper has been accepted at the Journal of Image and Visual Computing 2017!

- 17.07.2017: One paper accepted at ICCV 2017!

- 15.06.2017: One paper accepted at IROS 2017!

- 21.12.2016: One post-doctoral research fellow position available immediately.

See Openings for more details. - 11.07.2016: One paper accepted at ECCV 2016!

- 01.03.2016: One paper accepted at CVPR 2016!

- 03.08.2015: I join the National University of Singapore as an Assistant Professor.

- 16.07.2015: I left my research position at MERL.

- 07.06.2015: I am attending CVPR 2015 in Boston MA, USA to present our paper.

- 22.06.2014: I am attending CVPR 2014 in Columbus Ohio, USA to present our paper.

- 19.06.2014: I left ETH Zurich to take up a new position as a researcher at MERL for a year.

- 31.05.2014: I am attending ICRA 2014 in Hong Kong, China to present our paper.

- 19.03.2014: I successfully defended my PhD thesis "Visual Mapping and Pose Estimation for Self-Driving Cars"!

Current Members / Visitors

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Past Members -- Research Fellows and PhD Students

- 06.2017 -- 04.2018: Dr. Ho Man Rang Nguyen, Research Fellow, Computer Science. Current: VinAI

- 08.2014 -- 11.2018: Dr. Jiaxin Li, PhD Student, ECE | Research Fellow, Computer Science. Current: Light Robotics

- 08.2014 -- 11.2018: Dr. Bingbing Zhuang, PhD Student, ECE. Current: Apple

- 08.2014 -- 02.2019: Dr. Mengdan Feng, PhD Student, Mechanical Engineering. Current: Huawei

- 01.2015 -- 01.2019: Dr. Liang Pan, PhD Student, Mechanical Engineering. Current: Shanghai AI Lab

- 04.2019 -- 11.2019: Dr. Xun Xu, Research Fellow, Computer Science. Current: A*STAR

- 08.2015 -- 04.2020: Dr. Sixing Hu, PhD Student, Computer Science. Current: SmartMore

- 08.2018 -- 08.2020: Dr. Ziquan Lan, Research Fellow, Computer Science. Current: Startup

- 08.2016 -- 08.2020: Dr. Xiaogang Wang, PhD Student, Mechanical Engineering. Current: Amazon

- 08.2016 -- 08.2021: Dr. Meng Tian, PhD Student, Mechanical Engineering. Current: Yinwang Intelligent Technology Co, China

- 08.2017 -- 01.2022: Dr. Zi Jian Yew, PhD Student, Computer Science. Current: Aicadium, Singapore

- 08.2015 -- 12.2022: Dr. Jiahao Lin, PhD Student | Research Fellow, Computer Science. Current: Black Sesame Technologies

- 03.2021 -- 08.2023: Dr. Conghui Hu, Research Fellow, Computer Science. Current: NUS

- 03.2017 -- 05.2024: Dr. Chen Li, Research Assistant | PhD Student | Research Fellow, Computer Science. Current: A*STAR

- 01.2020 -- 08.2024: Dr. Can Zhang, PhD Student, Computer Science. Current: NUS

- 09.2023 -- 11.2024: Dr. Tao Hu, Research Fellow, Computer Science. Current: Bytedance

- 08.2020 -- 03.2025: Dr. Yating Xu, PhD Student, Computer Science. Current: Huawei

- 06.2023 -- 03.2025: Dr. Tianxin Huang, Research Fellow, Computer Science. Current: HKU

- 08.2021 -- 05.2025: Dr. Yuyang Zhao, PhD Student, Computer Science. Current: Nvidia

- 01.2021 -- 06.2025: Dr. Weng Fei Low, PhD Student, NUS IDS. Current: Waymo

- 01.2021 -- 09.2025: Dr. Jinnan Chen, PhD Student, Computer Science. Current: On the Job Market

- 01.2022 -- 10.2025: Dr. Yu Chen, PhD Student, Computer Science. Current: Huawei, UK

- 08.2021 -- Awaiting Defense: Mengqi Guo, PhD Student, Computer Science. Current: Huawei

- 08.2021 -- Awaiting Defense: Jie Long Lee, PhD Student, Computer Science. Current: On the Job Market

- 08.2021 -- Awaiting Defense: Zhiwen Yan, PhD Student, Computer Science. Current: Tencent

Past Visiting Students

- 04.2016 -- 10.2016: Dr. Zhengning Li , PhD student, Tongji University

- 07.2017 -- 09.2017: Dr. Dawei Sun , Undergrad, Tsinghua University

- 07.2017 -- 09.2017: Dr. Xiaojian Ma , Undergrad, Tsinghua University

- 07.2018 -- 09.2018: Yirou Guo, Undergrad, Beihang University

- 07.2018 -- 09.2018: Shanyi Zhang, Undergrad, Beihang University

- 07.2018 -- 03.2019: Haleh Mohammadian, Master Student, Sherif University of Technology

- 01.2019 -- 05.2019: Yaxuan Li, Undergrad, Shandong University

- 08.2018 -- 06.2020: Dr. Bee-ying Sae-Ang, PhD student, King Mongkut's University of Technology Thonburi

- 12.2019 -- 12.2020: Dr. Beibei Jin, PhD student, Chinese Academy of Sciences

- 01.2021 -- 03.2023: Dr. Na Dong, PhD student, Harbin Institute of Technology

- 01.2023 -- 07.2023: Dr. Wentao Jiang, PhD student, Beihang University

- 10.2023 -- 03.2024: Dr. Fangyin Wei, PhD student, Princeton University

- 09.2023 -- 09.2024: Dr. Buzhen Huang, PhD student, Southeast University

- 09.2023 -- 11.2024: Bo Xu, PhD student, Wuhan University

- 04.2024 -- 03.2025: Dr. Zhexiong Wan, PhD student,Northwestern Poly Uni

- 10.2024 -- 03.2025: Ruida Zhang, PhD student, Tsinghua University

- 02.2025 -- 07.2025: Xiyue Guo, PhD student, Zhejiang University

- 09.2023 -- 08.2025: Qing Mao, PhD student, Northwestern Poly Uni

Academic and Teaching Activities

Reviewer/Area Chair/Organizing Committee

- ICCV: 2017, 2019, 2021 (Area Chair), 2023 (Area Chair), 2025 (Area Chair)

- ECCV: 2018, 2020, 2022 (Area Chair), 2024 (Lead Area Chair), 2026 (Area Chair)

- CVPR: 2017, 2018, 2019, 2020, 2021 (Area Chair), 2022, 2023 (Demo Chair), 2024 (Area Chair), 2025 (Lead Area Chair), 2026 (Senior Area Chair)

- BMVC: 2019, 2020 (Area Chair), 2021, 2022 (Area Chair), 2023 (Area Chair), 2024 (Area Chair), 2025 (Area Chair)

- WACV: 2014, 2015, 2017, 2018, 2019, 2020, 2021, 2022 (Area Chair), 2023, 2025 (Area Chair)

- ACCV: 2018, 2020, 2022 (Award Committee)

- 3DV: 2017, 2018, 2019, 2020 (Area Chair), 2021, 2022 (Program Chair), 2024, 2025 (General Chair)

- ICML: 2026 (Area Chair)

- SIGGRAPH: 2024

- ICLR: 2023 (Area Chair), 2024 (Area Chair), 2025 (Area Chair), 2026 (Area Chair)

- NeurIPS: 2020, 2021, 2022, 2023 (Area Chair), 2024 (Area Chair), 2025 (Area Chair)

- AAAI: 2024 (Area Chair), 2026 (Area Chair)

- IJCAI: 2023 (Area Chair), 2024 (Area Chair)

- IROS: 2012, 2013, 2016, 2017, 2018, 2019, 2020

- ICRA: 2011, 2013, 2014, 2015, 2016, 2017, 2019, 2020, 2021

- CORL: 2017, 2019

- TPAMI: 2017, 2018, 2019

- IET Computer Vision: 2016

- Elseiver Journal of Robotics and Autonomous Systems (RAS): 2015

- IEEE Robotics & Automation Magazine (RAM), 2013

- Autonomous Robots (AURO) 2011

- Elseiver Journal of Computer Vision and Image Understanding (CVIU), 2013, 2016

CVPR 2014 Doctoral Consortium

I was selected for the CVPR 2014 Doctoral Consortium. My mentor was Prof. Kostas Daniilidis from UPenn. Here's the poster I presented to him.

Invited/Visiting Talks

- 01.2026: Invited talk at Neuromorphic Intelligence: From Algorithm to System at AAAI 2026, Singapore

- 12.2025: Westlake University, Hangzhou China

- 10.2025: Invited talk at Advancements for Intelligent Robotics in 4D Scenes at IROS 2025

- 10.2025: Invited talk at 2nd Workshop on Scalable 3D Scene Generation and Geometric Scene Understanding at ICCV 2025, USA

- 06.2025: Korea Advanced Institute of Science & Technology (KAIST), Daejeon, South Korea

- 05.2025: Seoul National University, Seoul, South Korea

- 05.2025: Yonsei University, Seoul, South Korea

- 04.2025: China3DV 2025, Beijing China

- 12.2024: Nanjing University of Science and Technology, Nanjing China

- 11.2024: Changan Automobile, Chongqing China

- 05.2024: 1st Singapore Media Technology Workshop, Huawei, Singapore

- 12.2023: Shanghai Tech, Shanghai China

- 12.2023: Zhejiang University, Hangzhou China

- 12.2023: Huawei Noah Ark's Lab, Shenzhen China

- 12.2023: Hong Kong University of Science and Technology, Guangzhou China

- 09.2023: Robotics Perception Workshop, SUTD, Singapore

- 06.2022: Invited talk at Workshop on ScanNet Indoor Scene Understanding Challenge at CVPR 2022, USA

- 10.2021: Invited talk at Workshop on Interactive Labeling and Data Augmentation for Vision at ICCV 2021, Virtual

- 01.2021: Invited talk at 2nd Workshop on VASBSD at WACV 2020, Virtual

- 10.2020: 3DGV virtual seminar series on Geometry Processing and 3D Computer Vision

- 10.2019: Huawei Research Center, Tokyo Japan

- 09.2019: DSO AI Innovation Hub, Singapore

- 09.2019: Microsoft Research Lab Cambridge, UK

- 09.2019: Imperial College London, UK

- 07.2019: AI Summer School, Singapore

- 06.2019: Massachusetts Institute of Technology (MIT), USA

- 01.2019: Korea Advanced Institute of Science & Technology (KAIST), Daejeon, South Korea

- 09.2018: University of Zurich, Switzerland

- 10.2016: TU Graz, Institute of Computer Graphics and Vision, Austria

- 09.2014: NEC Laboratories, Cupertino USA

- 12.2013: DSO National Laboratories, Singapore

Teaching Assistant / Lab Instructor

- Spring semesters 2012 and 2013 (ETH): TA for "Informatik I D-MAVT" (Introductory C++ for Mechanical Engineers)

- Fall semesters 2011 and 2012 (ETH): TA for "Computer Vision"

- Spring semester 2011 (ETH): TA for "3D Photography"

- Spring and Fall semesters 2010 (ETH): TA for "Visual Computing"

- Jan-May 2007 (NUS): Lab instructor for "Frequency Response" (Lab for Control Systems)

- Jan-May 2007 (NUS): TA for "Special Advanced Topics in Mechatronics II" (Probabilistic Robotics)

- Jan-May 2006 (NUS): Lab instructor for Speed/Position Control (Lab for Control Systems)

Lecturer

- AY2017/18 -- AY2023/24 Semester 1 (NUS): CS5340 - Uncertainty Modeling in AI

- AY2019/20 -- AY2022/23 Semester 2 (NUS): CS5477/CS4277 - 3D Computer Vision

- AY2018/19 Semester 2 (NUS): CS6208 - Topics in AI: Computer Vision for Self-Driving Cars

- AY2017/18 Semester 2 (NUS): CS6212 - Topics in Media: 3D Computer Vision

- AY2016/17 Semester 1 (NUS): ME5405 - Machine Vision Part II

- AY2015/16, AY2016/17 Semester 2 (NUS): ME3241/E - Microprocessor Part I

- AY2015/16, AY2016/17 Semester 2 (NUS): ME6402 / ME6701 - Topics in Mechatronics II (Deep Learning for Computer Vision)

- Spring Semester 2013 (ETH): Lecture on SfM / SLAM in the "3D Photography" class

- Spring Semester 2014 (ETH): Lecture on "Bundle Adjustment and SLAM" in the "3D Photography" class

Publications

Peer-Reviewed Conference / Journal Papers

2026

- Zilin Fang, Anxing Xiao, David Hsu, Gim Hee Lee, From Obstacles to Etiquette: Robot Social Navigation with VLM-Informed Path Selection, In IEEE Robotics and Automation Letters (RA-L), 2026.

- Yingzhao Li, Yanyan Li, Shixiong Tian, Yanjie Liu, Lijun Zhao, Gim Hee Lee, MipSLAM: Alias-free Gaussian Splatting SLAM, In IEEE International Conference on Robotics and Automation (ICRA), 2026.

- Seungjun Lee, Gim Hee Lee, Segment Any Events with Language, In International Conference on Learning Representations (ICLR), 2026.

- Hanyu Zhou, Gim Hee Lee, LLaVA-4D: Embedding SpatioTemporal Prompt into LMMs for 4D Scene Understanding, In International Conference on Learning Representations (ICLR), 2026.

- Yanyan Li, Yingzhao Li, Gim Hee Lee, Active3D: Active High-Fidelity 3D Reconstruction via Multi-Level Uncertainty Quantification, In AAAI Conference on Artificial Intelligence, 2026. (Oral) paper)

- Yanyan Li, Ze Yang, Keisuke Tateno, Federico Tombari, Liang Zhao, Gim Hee Lee, RiemanLine: Riemannian Manifold Representation of 3D Lines for Factor Graph Optimization, In AAAI Conference on Artificial Intelligence, 2026. (Oral) paper)

- Shuo Feng, Zihan Wang, Yuchen Li, Rui Kong, Hengyi Cai, Shuaiqiang Wang, Gim Hee Lee, Piji Li, Shuqiang Jiang, VPN: Visual Prompt Navigation, In AAAI Conference on Artificial Intelligence, 2026.

- Wencan Cheng, Gim Hee Lee, HandMCM: Multi-modal Point Cloud-based Correspondence State Space Model for 3D Hand Pose Estimation, In AAAI Conference on Artificial Intelligence, 2026.

- Kuluhan Binici, Shivam Aggarwal, Cihan Acar, Nam Trung Pham, Karianto Leman, Gim Hee Lee, Tulika Mitra, Condensed Data Expansion Using Model Inversion for Knowledge Distillation, In AAAI Conference on Artificial Intelligence, 2026.

- Tianxin Huang, Gim Hee Lee, Unified Geometry and Color Compression Framework for Point Clouds via Generative Diffusion Priorsn, In International Conference on 3D Vision (3DV), 2026.

- Zhiwen Yan, Weng Fei Low, Tianxin Huang, Gim Hee Lee, 1000FPS+ Novel View Synthesis from End-to-End Opaque Triangle Optimization, In International Conference on 3D Vision (3DV), 2026.

2025

- Mengqi Guo, Bo Xu, Yanyan Li, Gim Hee Lee, 4D3R: Motion-Aware Neural Reconstruction and Rendering of Dynamic Scenes from Monocular Videos, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Yu Chen, Rolandos Alexandros Potamias, Evangelos Ververas, Jifei Song, Jiankang Deng, Gim Hee Lee, Deep Gaussian from Motion: Exploring 3D Geometric Foundation Models for Gaussian Splatting, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Qing Mao, Tianxin Huang, Yu Zhu, Jinqiu Sun, Yanning Zhang, Gim Hee Lee, PoseCrafter: Extreme Pose Estimation with Hybrid Video Synthesis, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Jie Long Lee, Gim Hee Lee, Distil-E2D: Distilling Image-to-Depth Priors for Event-Based Monocular Depth Estimation, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Haoran Zhou, Gim Hee Lee, Motion4D: Learning 3D-Consistent Motion and Semantics for 4D Scene Understanding, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Zihan Wang, Seungjun Lee, Gim Hee Lee, Dynam3D: Dynamic Layered 3D Tokens Empower VLM for Vision-and-Language Navigation, In Conference on Neural Information Processing Systems (NeurIPS), 2025. (Oral) paper)

- Yu Yang, Alan Liang, Jianbiao Mei, Yukai Ma, Yong Liu, Gim Hee Lee, X-Scene: Large-Scale Driving Scene Generation with High Fidelity and Flexible Controllability, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Zhiqiang Yan, Jianhao Jiao, Zhengxue Wang, Gim Hee Lee, Event-Driven Dynamic Scene Depth Completion, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Dongyue Lu, Lingdong Kong, Gim Hee Lee, Camille Simon Chane, Wei Tsang Ooi, FlexEvent: Towards Flexible Event-Frame Object Detection at Varying Operational Frequencies, In Conference on Neural Information Processing Systems (NeurIPS), 2025.

- Chor Boon Tan, Conghui Hu, Gim Hee Lee, CLAIR: CLIP-Aided Weakly Supervised Zero-Shot Cross-Domain Image Retrieval, In British Machine Vision Conference (BMVC), 2025.

- Haoye Dong, Gim Hee Lee, PS-Mamba: Spatial-Temporal Graph Mamba for Pose Sequence Refinement, In International Conference on Computer Vision (ICCV), 2025.

- Zhexiong Wan, Jianqin Luo, Yuchao Dai, Gim Hee Lee, Event-aided Dense and Continuous Point Tracking: Everywhere and Anytime, In International Conference on Computer Vision (ICCV), 2025.

- Ruida Zhang, Chengxi Li, Chenyangguang Zhang, Xingyu Liu, Haili Yuan, Yanyan Li, Xiangyang Ji, Gim Hee Lee, Street Gaussians without 3D Object Tracker, In International Conference on Computer Vision (ICCV), 2025.

- Hanyu Zhou, Haonan Wang, Haoyue Liu, Yuxing Duan, Luxin Yan, Gim Hee Lee, STD-GS: Exploring Frame-Event Interaction for SpatioTemporal-Disentangled Gaussian Splatting to Reconstruct High-Dynamic Scene, In International Conference on Computer Vision (ICCV), 2025.

- Hanyu Zhou, Gim Hee Lee, LLaFEA: Frame-Event Complementary Fusion for Fine-Grained Spatiotemporal Understanding in LMMs, In International Conference on Computer Vision (ICCV), 2025.

- Zhiqiang Yan, Zhengxue Wang, Haoye Dong, Jun Li, Jian Yang, Gim Hee Lee, DuCos: Duality Constrained Depth Super-Resolution via Foundation Model, In International Conference on Computer Vision (ICCV), 2025.

- Qi Xun Yeo, Yanyan Li, Gim Hee Lee, Statistical Confidence Rescoring for Robust 3D Scene Graph Generation from Multi-View Images, In International Conference on Computer Vision (ICCV), 2025.

- Can Zhang, Gim Hee Lee, IAAO: Interactive Affordance Learning for Articulated Objects in 3D Environments, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

- Seungjun Lee, Gim Hee Lee, DiET-GS: Diffusion Prior and Event Stream-Assisted Motion Deblurring 3D Gaussian Splatting, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

- Zihan Wang, Gim Hee Lee, g3D-LF: Generalizable 3D-Language Feature Fields for Embodied Tasks, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

- Bingbing Hu, Yanyan Li, Rui Xie, Bo Xu, Haoye Dong, Junfeng Yao, Gim Hee Lee, Learnable Infinite Taylor Gaussian for Dynamic View Rendering, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

- Jinnan Chen, Lingting Zhu, Zeyu Hi, Shengju Qian, Yugang Chen, Xin Wang, Gim Hee Lee, MAR-3D: Progressive Masked Auto-regressor for High-Resolution 3D Generation, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025. (Highlight paper)

- Dongyue Lu, Lingdong Kong, Tianxin Huang, Gim Hee Lee, GEAL: Generalizable 3D Affordance Learning with Cross-Modal Consistency, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

- Buzhen Huang, Chen Li, Chongyang Xu, Dongyue Lu, Jinnan Chen, Yangang Wang, Gim Hee Lee, Reconstructing Close Human Interaction with Appearance and Proxemics Reasoning, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

- Stefan Lionar, Jiabin Liang, Gim Hee Lee, TreeMeshGPT: Artistic Mesh Generation with Autoregressive Tree Sequencing, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

- Zihan Wang, Yaohui Zhu, Gim Hee Lee, Yachun Fan, NavRAG: Generating User Demand Instructions for Embodied Navigation through Retrieval-Augmented LLM, In Association for Computational Linguistics (ACL), 2025.

- Yixin Fang, Yanyan Li, Kun Qian, Federico Tombari, Yue Wang, Gim Hee Lee, LiLoc: Lifelong Localization using Adaptive Submap Joining and Egocentric Factor Graph, In IEEE International Conference on Robotics and Automation (ICRA), 2025.

- Zhexiong Wan, Bin Fan, Le Hui, Yuchao Dai, Gim Hee Lee, Instance-Level Moving Object Segmentation from a Single Image with Events,, In International Journal of Computer Vision (IJCV), 2025.

- Can Zhang, Gim Hee Lee, econSG: Efficient and Multi-view Consistent Open-Vocabulary 3D Semantic Gaussians, In International Conference on Learning Representations (ICLR), 2025.

- Seungjun Lee, Yuyang Zhao, Gim Hee Lee, Segment Any 3D Object with Language, In International Conference on Learning Representations (ICLR), 2025.

- Zilin Fang, David Hsu, Gim Hee Lee, Neuralized Markov Random Field for Interaction-Aware Stochastic Human Trajectory Prediction, In International Conference on Learning Representations (ICLR), 2025.

- Yuyang Zhao, Chung-Ching Lin, Kevin Lin, Zhiwen Yan, Linjie Li, Zhengyuan Yang, Jianfeng Wang, Gim Hee Lee, Lijuan Wan, GenXD: Generating Any 3D and 4D Scenes, In International Conference on Learning Representations (ICLR), 2025.

- Tianxin Huang, Zhiwen Yan, Yuyang Zhao, Gim Hee Lee, ComPC: Completing a 3D Point Cloud with 2D Diffusion Priors, In International Conference on Learning Representations (ICLR), 2025.

- Jinnan Chen, Chen Li, Jianfeng Zhang, Lingting Zhu, Buzhen Huang, Hanlin Chen, Gim Hee Lee, Generalizable Human Gaussians from Single-View Image, In International Conference on Learning Representations (ICLR), 2025.

- Zhiwen Yan, Chen Li, Gim Hee Lee, OD-NeRF: Efficient Training of On-the-Fly Dynamic Neural Radiance Fields, In International Conference on 3D Vision (3DV), 2025.

- Tao Hu, Zhiwen Yan, Xiaogang Yu, Gim Hee Lee, Particle Rendering: Implicitly Aggregating Incident and Outgoing Light Fields for Novel View Synthesis, In International Conference on 3D Vision (3DV), 2025.

- Jinnan Chen, Chen Li, Gim Hee Lee, DiHuR: Diffusion-Guided Generalizable Human Reconstruction, In Winter Conference on Applications of Computer Vision (WACV), 2025.

2024

- Yunsong Wang, Tianxin Huang, Hanlin Chen, Gim Hee Lee, FreeSplat: Generalizable 3D Gaussian Splatting Towards Free View Synthesis of Indoor Scenes, In Conference on Neural Information Processing Systems (NeurIPS), 2024.

- Hanlin Chen, Fangyin Wei, Chen Li, Tianxin Huang, Yunsong Wang, Gim Hee Lee, VCR-GauS: View Consistent Depth-Normal Regularizer for Gaussian Surface Reconstruction, In Conference on Neural Information Processing Systems (NeurIPS), 2024.

- Yating Xu, Chen Li, Gim Hee Lee, MVSDet: Multi-View Indoor 3D Object Detection via Efficient Plane Sweeps, In Conference on Neural Information Processing Systems (NeurIPS), 2024.

- Yu Chen, Gim Hee Lee, DoGaussian: Distributed-Oriented Gaussian Splatting for Large-Scale 3D Reconstruction Via Gaussian Consensus,, In Conference on Neural Information Processing Systems (NeurIPS), 2024.

- Tianxin Huang, Zhenyu Zhang, Ying Tai, Gim Hee Lee, Learning to Decouple the Lights for 3D Face Texture Modeling, In Conference on Neural Information Processing Systems (NeurIPS), 2024.

- Tao Hu, Wenhang Ge, Yuyang Zhao, Gim Hee Lee, X-Ray: A Sequential 3D Representation For Generation, In Conference on Neural Information Processing Systems (NeurIPS), 2024. (Spotlight paper)

- Yuyang Zhao, Na Zhao, Gim Hee Lee, Synthetic-to-Real Domain Generalized Semantic Segmentation for 3D Indoor Point Clouds, In British Machine Vision Conference (BMVC), 2024.

- Yunsong Wang, Na Zhao, Gim Hee Lee, Syn-to-Real Unsupervised Domain Adaptation for Indoor 3D Object Detection, In British Machine Vision Conference (BMVC), 2024.

- Weng Fei Low, Gim Hee Lee, Deblur e-NeRF: NeRF from Motion-Blurred Events under High-speed or Low-light Conditions, In European Conference on Computer Vision (ECCV), 2024.

- Yanyan Li, Chenyu Lyu, Yan Di, Guangyao Zhai, Gim Hee Lee, Federico Tombari, GeoGaussian: Geometry-aware Gaussian Splatting for Scene Rendering, In European Conference on Computer Vision (ECCV), 2024.

- Bo Xu, Liu Ziao, Mengqi Guo, Jiancheng Li, Gim Hee Lee, URS-NeRF: Unordered Rolling Shutter Bundle Adjustment for Neural Radiance Fields, In European Conference on Computer Vision (ECCV), 2024.

- Mengqi Guo, Chen Li, Hanlin Chen, Gim Hee Lee, UNIKD: UNcertainty-Filtered Incremental Knowledge Distillation for Neural Implicit Representation, In European Conference on Computer Vision (ECCV), 2024.

- Mengqi Guo, Chen Li, Yuyang Zhao, Gim Hee Lee, TreeSBA: Tree-Transformer for Self-Supervised Sequential Brick Assembly, In European Conference on Computer Vision (ECCV), 2024.

- Na Dong, Yongqiang Zhang, Mingli Ding, Gim Hee Lee, Towards Non Co-occurrence Incremental Object Detection with Unlabeled In-the-wild Data, In International Journal of Computer Vision (IJCV), 2024.

- Buzhen Huang, Chen Li, Chongyang Xu, Liang Pan, Yangang Wang, Gim Hee Lee, Closely Interactive Human Reconstruction with Proxemics and Physics-Guided Adaption, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024.

- Yunsong Wang, Hanlin Chen, Gim Hee Lee, GOV-NeSF: Generalizable Open-Vocabulary Neural Semantic Fields, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024.

- Jie Long Lee, Chen Li, Gim Hee Lee, DiSR-NeRF: Diffusion-Guided View-Consistent Super-Resolution NeRF, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024.

- Zhiwen Yan, Weng Fei Low, Yu Chen, Gim Hee Lee, Multi-Scale 3D Gaussian Splatting for Anti-Aliased Rendering, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024.

- Khoi Duc Nguyen, Chen Li, Gim Hee Lee, ESCAPE: Encoding Super-keypoints for Category-Agnostic Pose Estimation, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024.

- Yunsong Wang, Na Zhao, Gim Hee Lee, Enhancing Generalizability of Representation Learning for Data-Efficient 3D Scene Understanding, In International Conference on 3D Vision (3DV), 2024. (Oral paper)

- Chen Li, Jiahao Lin, Gim Hee Lee, GHuNeRF: Generalizable Human NeRF from a Monocular Video, In International Conference on 3D Vision (3DV), 2024.

- Na Zhao, Gim Hee Lee, Robust Visual Recognition with Class-Imbalanced Open-World Noisy Data, In AAAI Conference on Artificial Intelligence, 2024.

- Yating Xu, Conghui Hu, Gim Hee Lee, Rethink Cross-modal Fusion in Weakly-Supervised Audio-Visual Video Parsing , In Winter Conference on Applications of Computer Vision (WACV), 2024.

2023

- Hanlin Chen, Chen Li, Mengqi Guo, Zhiwen Yan, Gim Hee Lee, GNeSF: Generalizable Neural Semantic Fields, In Conference on Neural Information Processing Systems (NeurIPS), 2023.

- Stefan Lionar, Xiangyu Xu, Min Lin, Gim Hee Lee, NU-MCC: Multiview Compressive Coding with Neighborhood Decoder and Repulsive UDF, In Conference on Neural Information Processing Systems (NeurIPS), 2023.

- Yuyang Zhao, Zhun Zhong, Na Zhao, Nicu Sebe, Gim Hee Lee, Style-Hallucinated Dual Consistency Learning: A Unified Framework for Visual Domain Generalization, In International Journal of Computer Vision (IJCV), 2023.

- Yating Xu, Conghui Hu, Gim Hee Lee, Motion and Context-Aware Audio-Visual Conditioned Video Prediction, In British Machine Vision Conference (BMVC), 2023.

- Yating Xu, Na Zhao, Gim Hee Lee, Towards Robust Few-shot Point Cloud Semantic Segmentation, In British Machine Vision Conference (BMVC), 2023.

- Weng Fei Low, Gim Hee Lee, Robust e-NeRF: NeRF from Sparse & Noisy Events under Non-Uniform Motion, In International Conference on Computer Vision (ICCV), 2023.

- Yu Chen, Gim Hee Lee, DReg-NeRF: Deep Registration for Neural Radiance Fields, In International Conference on Computer Vision (ICCV), 2023.

- Yating Xu, Conghui Hu, Na Zhao, Gim Hee Lee, Generalized Few-Shot Point Cloud Segmentation Via Geometric Words , In International Conference on Computer Vision (ICCV), 2023.

- Conghui Hu, Can Zhang, Gim Hee Lee, Unsupervised Feature Representation Learning for Domain-generalized Cross-domain Image Retrieval , In International Conference on Computer Vision (ICCV), 2023.

- Wentao Jiang, Hao Xiang, Xinyu Cai, Runsheng Xu, Jiaqi Ma, Yikang Li, Gim Hee Lee, Si Liu, Optimizing the Placement of Roadside LiDARs for Autonomous Driving , In International Conference on Computer Vision (ICCV), 2023.

- Jinnan Chen, Chen Li, Gim Hee Lee, Weakly-supervised 3D Pose Transfer with Keypoints , In International Conference on Computer Vision (ICCV), 2023.

- Na Dong, Yongqiang Zhang, Mingli Ding, Gim Hee Lee, Boosting Long-tailed Object Detection via Step-wise Learning on Smooth-tail Data , In International Conference on Computer Vision (ICCV), 2023.

- Can Zhang, Gim Hee Lee, GeT: Generative Target Structure Debiasing for Domain Adaptation , In International Conference on Computer Vision (ICCV), 2023.

- Zhiwen Yan, Chen Li, Gim Hee Lee, NeRF-DS: Neural Radiance Fields for Dynamic Specular Objects , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

- Yu Chen, Gim Hee Lee, DBARF: Deep Bundle-Adjusting Generalizable Neural Radiance Field , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

- Chen Li, Gim Hee Lee, ScarceNet: Animal Pose Estimation with Scarce Annotation , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

- Hao Yang, Lanqing Hong, Aoxue Li, Tianyang Hu, Zhenguo Li, Gim Hee Lee, Liwei Wang, ContraNeRF: Generalizable Neural Radiance Fields for Synthetic-to-Real Novel View Synthesis via Contrastive Learning , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

- Ziwei Yu, Chen Li, Linlin Yang, Xiaoxu Zheng, Michael Bi Mi, Gim Hee Lee, Angela Yao, Overcoming the Tradeoff in Accuracy and Plausibility for 3D Hand Shape Reconstruction , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

- Yu Chen, Zihao Yu, Shu Song, Jianming Li, Tianning Yu, Gim Hee Lee, AdaSfM: From Coarse Global to Fine Incremental Adaptive Structure from Motion , In IEEE International Conference on Robotics and Automation (ICRA), 2023.

- Yunlong Ran, Jing Zeng, Shibo He, Jiming Chen, Lincheng Li, Yingfeng Chen, Gim Hee Lee, Qi Ye, NeurAR: Neural Uncertainty for Autonomous 3D Reconstruction with Implicit Neural Representations , In IEEE Robotics and Automation Letters (RA-L), 2023.

- Hualian Sheng, Sijia Cai, Na Zhao, Bing Deng, Min-Jian Zhao, Gim Hee Lee, PDR: Progressive Depth Regularization for Monocular 3D Object Detection , IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 2023.

- Na Dong, Yongqiang Zhang, Mingli Ding, Gim Hee Lee, Incremental-DETR: Incremental Few-Shot Object Detection via Self-Supervised Learning , In AAAI Conference on Artificial Intelligence, 2023.

2022

- Zhun Zhong, Yuyang Zhao, Gim Hee Lee, Nicu Sebe, Adversarial Style Augmentation for Domain Generalized Urban-Scene Segmentation, In Conference on Neural Information Processing Systems (NeurIPS), 2022.

- Weng Fei Low, Gim Hee Lee, Minimal Neural Atlas: Parameterizing Complex Surfaces with Minimal Charts and Distortion, In European Conference on Computer Vision (ECCV), 2022.

- Yuyang Zhao, Zhun Zhong, Na Zhao, Nicu Sebe, Gim Hee Lee, Style-Hallucinated Dual Consistency Learning for Domain Generalized Semantic Segmentation, In European Conference on Computer Vision (ECCV), 2022.

- Conghui Hu, Gim Hee Lee, Feature Representation Learning for Unsupervised Cross-domain Image Retrieval, In European Conference on Computer Vision (ECCV), 2022.

- Tohar Lukov, Na Zhao, Gim Hee Lee, Ser-Nam Lim, Teaching with Soft Label Smoothing for Mitigating Noisy Labels in Facial Expressions, In European Conference on Computer Vision (ECCV), 2022.

- Hualian Sheng, Sijia Cai, Na Zhao, Bing Deng, Jianqiang Huang, Xian-Sheng Hua, Minjian Zhao, Gim Hee Lee, Rethinking IoU-based Optimization for Single-stage 3D Object Detection, In European Conference on Computer Vision (ECCV), 2022.

- Yuyang Zhao, Zhun Zhong, Zhiming Luo, Gim Hee Lee, Nicu Sebe, Source-Free Open Compound Domain Adaptation in Semantic Segmentation, In IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 2022.

- Zi Jian Yew, Gim Hee Lee, REGTR: End-to-end Point Cloud Correspondences with Transformers, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- Yuyang Zhao, Zhun Zhong, Nicu Sebe, Gim Hee Lee, Novel Class Discovery in Semantic Segmentation, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- Na Zhao, Gim Hee Lee, Static-Dynamic Co-Teaching for Class Incremental 3D Object Detection, In AAAI Conference on Artificial Intelligence, 2022. (Oral paper)

- Meng Tian, Gim Hee Lee, Weakly Supervised Learning of Keypoints for 6D Object Pose Estimation, In arXiv, 2022.

2021

- Zi Jian Yew, Gim Hee Lee, Learning Iterative Robust Transformation Synchronization , In International Conference on 3D Vision (3DV), 2021.

- Chen Li, Gim Hee Lee, Coarse-to-fine Animal Pose and Shape Estimation , In Conference on Neural Information Processing Systems (NeurIPS), 2021.

- Na Dong, Yongqiang Zhang, Mingli Ding, Gim Hee Lee, Bridging Non Co-occurrence with Unlabeled In-the-wild Data for Incremental Object Detection , In Conference on Neural Information Processing Systems (NeurIPS), 2021.

- Jiaxin Li, Zijian Feng, Qi She, Henghui Ding, Changhu Wang, Gim Hee Lee, MINE: Towards Continuous Depth MPI with NeRF for Novel View Synthesis, In International Conference on Computer Vision (ICCV), 2021.

- Xiaogang Wang, Marcelo Ang, Gim Hee Lee, Voxel-based Network for Shape Completion by Leveraging Edge Generation, In International Conference on Computer Vision (ICCV), 2021. (Oral paper)

- Min Zhao, Xin Guo, Le Song, Baoxing Qin, Xuesong Shi, Gim Hee Lee, A General Framework for Lifelong Localization and Mapping in Changing Environment, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021.

- Xiaogang Wang, Marcelo Ang, Gim Hee Lee, A Self-supervised Cascaded Refinement Network for Point Cloud Completion, In IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2020.

- Bo Li, Qili Wang, Gim Hee Lee, FILTRA: Rethinking Steerable CNN by Filter Transform, In International Conference on Machine Learning (ICML), 2021.

- Jiaxin Li, Gim Hee Lee, DeepI2P: Image-to-Point Cloud Registration via Deep Classification, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

- Jiahao Lin, Gim Hee Lee, Multi-view Multi-Person 3D Pose Estimation with Plane Sweep Stereo, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

- Chen Li, Gim Hee Lee, From Synthetic to Real: Unsupervised Domain Adaptation for Animal Pose Estimation, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021. (Oral paper)

- Na Zhao, Tat-Seng Chua, Gim Hee Lee, Few-shot 3D Point Cloud Semantic Segmentation, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

- Zi Jian Yew, Gim Hee Lee, City-scale Scene Change Detection using Point Clouds, In IEEE International Conference on Robotics and Automation (ICRA), 2021.

- Jiahao Lin, Gim Hee Lee, Learning Spatial Context with Graph Neural Network for Multi-Person Pose Grouping, In IEEE International Conference on Robotics and Automation (ICRA), 2021.

2020

- Chen Li, Gim Hee Lee, Weakly supervised generative network for multiple human pose hypotheses, In British Machine Vision Conference (BMVC), 2020.

- Bo Li, Evgeniy Maryushev, Gim Hee Lee, Relative Pose Estimation of Calibrated Cameras with Known SE(3) Invariants, In European Conference on Computer Vision (ECCV), 2020.

- Jiahao Lin, Gim Hee Lee, HDNet: Human Depth Estimation for Multi-person Camera-space Localization, In European Conference on Computer Vision (ECCV), 2020.

- He Chen, Pengfei Guo, Pengfei Li, Gim Hee Lee, Gregory Chirikjian, Multi-person 3D Pose Estimation in Crowded Scenes based on Multi-view Geometry, In European Conference on Computer Vision (ECCV), 2020. (Spotlight paper)

- Meng Tian, Marcelo Ang, Gim Hee Lee, Shape prior deformation for categorical 6D object pose and size estimation, In European Conference on Computer Vision (ECCV), 2020.

- Na Zhao, Tat-Seng Chua, Gim Hee Lee, SESS: Self-Ensembling Semi-Supervised 3D Object Detection, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020. (Oral paper)

- Xun Xu, Gim Hee Lee, Weakly Supervised Semantic Point Cloud Segmentation: Towards 10x Fewer Labels, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Zi Jian Yew, Gim Hee Lee, RPM-Net: Robust Point Matching using Learned Features, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Xiaogang Wang, Marcelo Ang, Gim Hee Lee, Cascaded Refinement Network for Point Cloud Completion, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Shen Li, Bryan Hooi, Gim Hee Lee, Identifying Through Flows for Recovering Latent Representations, In International Conference on Learning Representations (ICLR), 2020.

- Xiaogang Wang, Marcelo Ang, Gim Hee Lee, Point Cloud Completion by Learning Shape Priors, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020.

- Liang Pan, Chee-Meng Chew, Gim Hee Lee, PointAtrousGraph: Deep Hierarchical Encoder-Decoder with Atrous Convolution for Point Clouds, In IEEE International Conference on Robotics and Automation (ICRA), 2020.

- Meng Tian, Liang Pan, Marcelo H Ang Jr, Gim Hee Lee, Robust 6D Object Pose Estimation by Learning RGB-D Features, In IEEE International Conference on Robotics and Automation (ICRA), 2020.

- Sixing Hu, Gim Hee Lee, Image-Based Geo-Localization Using Satellite Imagery, In International Journal of Computer Vision (IJCV) 2020.

- Na Zhao, Tat-Seng Chua, Gim Hee Lee, PS^2-Net: A Locally and Globally Aware Network for Point-Based Semantic Segmentation, In International Conference on Pattern Recognition (ICPR), 2020.

2019

- Yew Siang Tang, Gim Hee Lee, Transferable Semi-supervised 3D Object Detection from RGB-D Data, In IEEE International Conference on Computer Vision (ICCV), 2019.

- Jiaxin Li, Gim Hee Lee, USIP: Unsupervised Stable Interest Point Detection from 3D Point Clouds, In IEEE International Conference on Computer Vision (ICCV), 2019.

- Jiahao Lin, Gim Hee Lee, Trajectory Space Factorization for Deep Video-Based 3D Human Pose Estimation, In British Machine Vision Conference (BMVC), 2019.

- Bingbing Zhuang, Quoc-Huy Tran, Gim Hee Lee, Loong-Fah Cheong, Manmohan Chandraker, Degeneracy in Self-Calibration Revisited and a Deep Learning Solution for Uncalibrated SLAM, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019.

- Chen Li, Gim Hee Lee, Generating Multiple Hypotheses for 3D Human Pose Estimation with Mixture Density Network, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- Ziquan Lan, Zi Jian Yew, Gim Hee Lee, Robust Point Cloud Based Reconstruction of Large-Scale Outdoor Scenes, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- Mengdan Feng, Sixing Hu, Marcelo H. Ang, Gim Hee Lee, 2D3D-MatchNet: Learning to Match Keypoints Across 2D Image and 3D Point Cloud, In IEEE International Conference on Robotics and Automation (ICRA), 2019.

- Jiaxin Li, Yingcai Bi, Gim Hee Lee, Discrete Rotation Equivariance for Point Cloud Recognition, In IEEE International Conference on Robotics and Automation (ICRA), 2019.

- Lionel Heng, Benjamin Choi, Zhaopeng Cui, Marcel Geppert, Sixing Hu, Benson Kuan, Peidong Liu, Rang Nguyen, Ye Chuan Yeo, Andreas Geiger, Gim Hee Lee, Marc Pollefeys, Torsten Sattler, Project AutoVision: Localization and 3D Scene Perception for an Autonomous Vehicle with a Multi-Camera System, In IEEE International Conference on Robotics and Automation (ICRA), 2019.

- Mengdan Feng, Marcelo Ang, Gim Hee Lee, Learning Low-Rank Images for Robust All-Day Feature Matching, In IEEE Intelligent Vehicle Symposium (IV), 2019.

2018

- Zi Jian Yew, Gim Hee Lee, 3DFeat-Net: Weakly Supervised Local 3D Features for Point Cloud Registration, In European Conference on Computer Vision (ECCV), 2018.

- Chen Li, Zhen Zhang, Wee Sun Lee, Gim Hee Lee, Convolutional Sequence to Sequence Model for Human Dynamics, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Sixing Hu, Mengdan Feng, Rang M. H. Nguyen, Gim Hee Lee, CVM-Net: Cross-View Matching Network for Image-Based Ground-to-Aerial Geo- Localization, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018 (Spotlight paper).

- Mikaela Angelina Uy, Gim Hee Lee, PointNetVLAD: Deep Point Cloud Based Retrieval for Large-Scale Place Recognition, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Bingbing Zhuang, Loong-Fah Cheong, Gim Hee Lee, Baseline Desensitizing In Translation Averaging, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Ziquan Lan, David Hsu, Gim Hee Lee, Solving the Perspective-2-Point Problem for Flying-Camera Photo Composition, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Jiaxin Li, Ben M Chen, Gim Hee Lee, SO-Net: Self-Organizing Network for Point Cloud Analysis, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Mengdan Feng, Sixing Hu, Gim Hee Lee, Marcelo H. Ang, Towards Precise Vehicle-Free Point Cloud Mapping: An On-vehicle System with Deep Vehicle Detection and Tracking, IEEE International Conference on Systems, Man, and Cybernetics (SMC), 2018.

2017

- Christian Haene, Lionel Heng, Gim Hee Lee, Friedrich Fraundorfer, Paul Furgale, Torsten Sattler, Marc Pollefeys, 3D visual perception for self- driving cars using a multi-camera system: Calibration, mapping, localization, and obstacle detection, In Image and Vision Computing (IVC) 2017.

- Gim Hee Lee, Line Association and Vanishing Point Estimation with Binary Quadratic Programming, In International Conference on 3D Vision (3DV) 2017.

- Bingbing Zhuang, Loong-Fah Cheong, Gim Hee Lee, Rolling-Shutter Aware Differential SfM and Image Rectification, In IEEE International Conference on Computer Vision (ICCV), 2017.

- Jiaxin Li, Huangying Zhan, Ben M Chen, Ian Reid, Gim Hee Lee, Deep learning for 2D scan matching and loop closure, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2017.

2016

- Gim Hee Lee, A Minimal Solution for Non-Perspective Pose Estimation from Line Correspondences, In European Conference on Computer Vision (ECCV), 2016.

- Olivier Saurer, Marc Pollefeys, Gim Hee Lee, Sparse to Dense 3D Reconstruction from Rolling Shutter Images, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

- Ulrich Schwesinger, Mathias Buerki, Julian Timpner, Stephan Rottmann, Lars Wolf, Lina Maria Paz, Hugo Grimmet, Ingmar Posner, Paul Newman, Christian Haene, Lionel Heng, Gim Hee Lee, Torsten Sattler, Marc pollefeys, Marco Allodi, Francesco Valenti, Keiji Mimura, Bernd Goebelsmann, and Roland Siegwart, Automated Valet Parking and Charging for e-Mobility Results of the V-Charge Project, In IEEE Intelligent Vehicles Symposium (IV), 2016.

- Syeda Mariam Ahmed, Yan Zhi Tan, Gim Hee Lee, Chee-Meng Chew, Chee Khiang Pang, Object detection and motion planning for automated welding of tubular joints, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2016.

2015

- Gim Hee Lee, Bo Li, Marc Pollefeys, Friedrich Fraundorfer, Minimal Solutions for the Multi-Camera Pose Estimation Problem, In International Journal of Robotics Research (IJRR), 2015.

- Olivier Saurer, Marc Pollefeys, and Gim Hee Lee, A Minimal Solution to the Rolling Shutter Pose Estimation Problem, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2015.

- Srikumar Ramalingam, Michel Antunes, Dan Snow, Gim Hee Lee, Sudeep Pillai, Line-Sweep: Cross-Ratio For Wide-Baseline Matching and 3D Reconstruction, In IEEE Computer Vision and Pattern Recognition (CVPR), 2015.

- Lionel Heng, Gim Hee Lee, and Marc Pollefeys, Self-Calibration and Visual SLAM with a Multi-Camera System on a Micro Aerial Vehicle, In Autonomous Robots (AURO), 2015.

2014

- Christian Haene, Lionel Heng, Gim Hee Lee, Alexi Sizov, and Marc Pollefeys, Real-Time Direct Dense Matching on Fisheye Images Using Plane-Sweeping Stereo, International Conference for 3D Vision (3DV), 2014.

- Lionel Heng, Gim Hee Lee, and Marc Pollefeys, Self-Calibration and Visual SLAM with a Multi-Camera System on a Micro-Aerial Vehicle, In Robotics: Science and Systems (RSS), 2014.

- Lionel Heng, Dominik Honegger, Gim Hee Lee, Lorenz Meier, Petri Tanskanen, Friedrich Fraundorfer, and Marc Pollefeys, Autonomous Visual Mapping and Exploration With a Micro Aerial Vehicle, Journal of Field Robotics (JFR), 2014.

- Gim Hee Lee, Marc Pollefeys, and Friedrich Fraundorfer, Relative Pose Estimation for a Multi-Camera System with Known Vertical Direction, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2014.

- Gim Hee Lee, and Marc Pollefeys, Unsupervised Learning of Threshold for Geometric Verification in Visual-Based Loop-Closure, In IEEE International Conference on Robotics and Automation (ICRA), 2014.

- Lionel Heng, Mathias Buerki, Gim Hee Lee, Paul Furgale, Roland Siegwart, and Marc Pollefeys, Infrastructure-Based Calibration of a Multi-Camera Rig, In IEEE International Conference on Robotics and Automation (ICRA), 2014.

- Davide Scaramuzza, Michael C Achtelik, Lefteris Doitsidis, Fraundorfer Friedrich, Elias Kosmatopoulos, Agostino Martinelli, Markus W Achtelik, Margarita Chli, Savvas Chatzichristofis, Laurent Kneip, Daniel Gurdan, Lionel Heng, Gim Hee Lee, Simon Lynen, Marc Pollefeys, Alessandro Renzaglia, Roland Siegwart, Jan Carsten Stumpf, Petri Tanskanen, Chiara Troiani, Stephan Weiss, Lorenz Meier, Vision-Controlled Micro Flying Robots: from System Design to Autonomous Navigation and Mapping in GPS-denied Environments, IEEE Robotics & Automation Magazine, 2014.

2013

- Gim Hee Lee, Bo Li, Marc Pollefeys, and Friedrich Fraundorfer, Minimal Solutions for Pose Estimation of a Multi-Camera System, In International Symposium on Robotics Research (ISRR), 2013.

- Bo Li, Lionel Heng, Gim Hee Lee, and Marc Pollefeys, A 4-point Algorithm for Relative Pose Estimation of a Calibrated Camera with a Known Relative Rotation Angle, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2013.

- Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, Structureless Pose-Graph Loop-Closure with a Multi-Camera System on a Self-Driving Car, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2013.

- Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, Robust Pose-Graph Loop-Closures with Expectation-Maximization, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2013.

- Paul Furgale, Ulrich Schwesinger, Martin Rufli, Wojciech Derendarz, Hugo Grimmett, Peter Muehlfellner, Stefan Wonneberger, Julian Timpner, Stephan Rottmann, Bo Li, Bastian Schmidt, Thien Nghia Nguyen, Elena Cardarelli, Stefano Cattani, Stefan Bruening, Sven Horstmann, Martin Stellmacher, Holger Mielenz, Kevin Koeser, Markus Beermann, Christian Haene, Lionel Heng, Gim Hee Lee, Friedrich Fraundorfer, René Iser, Rudolph Triebel, Ingmar Posner, Paul Newman, Lars Wolf, Marc Pollefeys, Stefan Brosig, Jan Effertz, Cédric Pradalier, and Roland Siegwart, Toward Automated Driving in Cities using Close-to-Market Sensors, an Overview of the V-Charge Project, In IEEE Intelligent Vehicle Symposium (IV), 2013.

- Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, Motion Estimation for a Self-Driving Car with a Generalized Camera, In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2013.

2012

- Friedrich Fraundorfer, Lionel Heng, Dominik Honegger, Gim Hee Lee, Lorenz Meier, Petri Tanskanen, and Marc Pollefeys, (Authors in alphabetical order, except Marc Pollefeys who was the principal investigator) Vision-Based Autonomous Mapping and Exploration Using a Quadrotor MAV, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2012. (Finalist for the IROS 2012 Best Paper Award)

- Markus Achtelik, Michael Achtelik, Yorick Brunet, Margarita Chli, Savvas A. Chatzichristofis, Jean-Dominique Decotignie, Klaus-Michael Doth, Friedrich Fraundorfer, Laurent Kneip, Daniel Gurdan, Lionel Heng, Elias B. Kosmatopoulos, Lefteris Doitsidis, Gim Hee Lee, Simon Lynen, Agostino Martinelli, Lorenz Meier, Marc Pollefeys, Damien Piguet, Alessandro Renzaglia, Davide Scaramuzza, Roland Siegwart, Jan Stumpf, Petri Tanskanen, Chiara Troiani, and Stephan Weiss, (Authors in alphabetical order) sFly: Swarm of Micro Flying Robots, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2012. (Finalist for the IROS 2012 Best Video Award)

- Lorenz Meier, Petri Tanskanen, Lionel Heng, Gim Hee Lee, Friedrich Fraundorfer, Marc Pollefeys, PIXHAWK: A micro aerial vehicle design for autonomous flight using onboard computer vision, Autonomous Robots, 2012.

2011

- Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, RS-SLAM: RANSAC sampling for visual FastSLAM, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2011.

- Lionel Heng, Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, Real-time photo-realistic 3D mapping for micro aerial vehicles, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2011.

- Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, MAV visual SLAM with plane constraint, In IEEE International Conference on Robotics and Automation (ICRA), 2011.

- Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, A benchmarking tool for MAV visual pose estimation, In IEEE International Conference on Control, Automation, Robotics and Vision (ICARCV), 2010.

2009

- Gim Hee Lee, Marcelo H. Ang Jr., Mobile Robots Navigation, Mapping, and Localization Part I, In Encyclopedia of Artificial Intelligence, 2009.

- Gim Hee Lee, Marcelo H. Ang Jr., Mobile Robots Navigation, Mapping, and Localization Part II, In Encyclopedia of Artificial Intelligence, 2009.

2008

- Gim Hee Lee, Marcelo H. Ang Jr., An Integrated Algorithm for Autonomous Navigation of a Mobile Robot in an Unknown Environment, In Journal of Advanced Computational Intelligence and Intelligent Informatics (JACIII) 12(4), 2008.

Theses

- Gim Hee Lee, Visual Mapping and Pose Estimation for Self-Driving Cars, PhD Thesis, ETH Zurich, 2014.

- Gim Hee Lee, Sensor-Based Localization of a Mobile Robot, Masters Thesis, National University of Singapore, 2007.

- Gim Hee Lee, Navigation of a Mobile Robot in an Unknown Environment, Bachelor Thesis, National University of Singapore, 2005.

Prospective Students

Please read this before contacting me!

I am always looking for motivated PhD/MComp/BComp students to work with me in the area of 3D Computer Vision at the Department of Computer Science, NUS.

PhD students must get accepted by one of the following PhD programs to work with me: (1) NUS School of Computing Graduate Research Scholarship; (2) NUS Integrative Sciences and Engineering Program NUS-ISEP Scholarship; (3) AI Singapore AISG Scholarship; (4) Agency for Science, Technology and Research ASTAR SINGA Scholarship. Note that the acceptance decisions are made by the respective committee, contacting me will not influence their decisions. However, you may wish to drop me an email to inform me on your application and interest to work with me.

No funding is available for MComp and BComp students. For MComp/undergrad students from the Department of Computer Science, NUS, who wants to do your MComp thesis or FYP/UROP with me, please send me an email. Please contact me for internship/visiting only if you are (1) self-funded, (2) able to stay for >=12 months, and (3) have sufficient research experience in the area of 3D Computer Vision and Machine Learning.

Job Openings

I do not have any postdoc position that is immediately available. However, if you have a strong background in 3D computer vision and robotics, and meet the following requirements, do drop me an email with your CV and research plan:

- PhD degree in Computer Science or other closely related disciplines

- Outstanding candidate with a Masters degree can also be considered for a research associate position

- Excellent knowledge on 3D computer vision and hands-on experience with real robots e.g. manipulator arm, mobile robot, etc

- Strong publication record in the top computer vision, machine learning and robotics conferences (CVPR/ICCV/ECCV/ICML/NeurIPS/ICLR/ICRA/IROS/RSS)

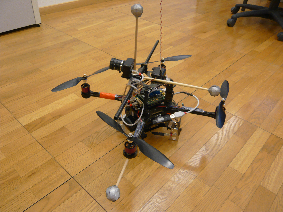

MAV Dataset

Disclaimer: The original dataset was deleted from my ETH webpage when I left. I have tried my best to recover the dataset here, I apologize if it is not perfect.

Nicolo Valigi has kindly made the dataset competible with ROS.

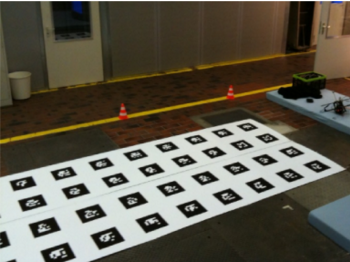

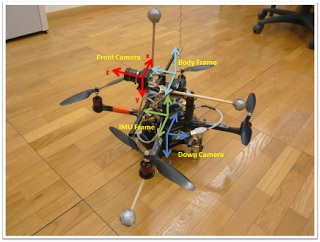

The large collections of datasets for researchers working on the Simultaneous Localization and Mapping problem are mostly collected from sensors such as wheel encoders and laser range finders mounted on ground robots. The recent growing interest in doing visual pose estimation with cameras mounted on micro-aerial vehicles however has made these datasets less useful. Here, we provide datasets collected from a sensor suite mounted on the "pelican" quadrotor platform in an indoor environment. Our sensor suite includes a forward looking camera, a downward looking camera, an inertial measurement unit and a Vicon system for groundtruth. We propose the use our datasets as benchmarking tools for future works on visual pose estimation for micro-aerial vehicles.

Five synchronized datasets - 1LoopDown, 2LoopsDown, 3LoopsDown, hoveringDown and randomFront are created. These datasets are collected from the quadrotor flying 1, 2 and 3 loop sequences, hovering within a space of approximately 1m x 1m x 1m, and flying randomly within the sight of the Vicon system. These datasets consist of images from the camera, accelerations, attitude rates, absolute angles and absolute headings from the IMU, and groundtruth from the Vicon system. Images from the first 4 datasets are from the downward looking camera and images from the last dataset are from the forward looking camera. Synchronized datasets for the calibrations of the forward and downward cameras are also provided.

More details of the acquisition of this dataset are given in our paper:

- Gim Hee Lee, Friedrich Fraundorfer, and Marc Pollefeys, A benchmarking tool for MAV visual pose estimation, In IEEE International Conference on Control, Automation, Robotics and Vision (ICARCV), 2010.

Get Our Dataset Here!

- Quadrotor flew 1 closed loop trajectory (downward looking camera) [1LoopDown]

- Quadrotor flew 2 closed loops trajectory (downward looking camera) [2LoopsDown]

- Quadrotor flew 3 closed loops trajectory (downward looking camera) [3LoopsDown]

- Quadrotor hovering within a space of approximately 1m x 1m x 1m (downward looking camera) [hoveringDown]

- Quadrotor flying randomly within the line-of-sight of the Vicon system (front looking camera) [randomFront]

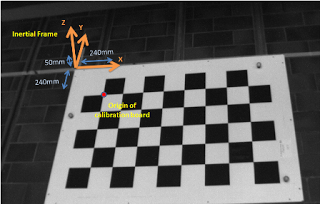

- Calibration images (front looking camera) [calibrationFront]

- Calibration images (downward looking camera) [calibrationDown]

Additional Information

- Transformation matrix that relates downward looking camera to quad rotor [T_Quad_Cam (Down)]

- Transformation matrix that relates front looking camera to quad rotor [T_Quad_Cam (Front)]

- Downward looking camera intrinsic values [intrinsic (Down)]

- Frontlooking camera intrinsic values [intrinsic (Front)]

- ARToolKitPlus Markers configuration file [ARTK Config]

- Matlab file for benchmarking pose estimation algorithm with Vicon groundtruth (Cosine Similarity) [benchmark.m]

Note that the quadrotor frame (body frame) refers to the coordinate frame that we measure the Vicon readings. There is a separate IMU frame where the accelerations readings from the accelerometer are measured with respect to. The IMU frame is -4cm in the z-axis of the downward looking camera and it is taken to have the same orientation as the body frame. There is also an Inertial frame which is the fixed world frame. See the above figures for illustrations of these coordinate frames.

Disclaimer:

This is my personal homepage. I am personally responsible for all opinion and content. NUS is not responsible for anything expressed herein.