In 1961, something momentous happened at a squat, nondescript factory in the tiny town of Ewing, New Jersey. The Unimate, a robotic arm, was fired up for the first time, grabbing pieces of hot metal off an assembly line and welding them onto car bodies while onlookers cheered — the world’s first industrial robot had officially been put to work.

The new machine promised to make a dangerous task, one where workers risked losing limbs or inhaling toxic fumes, easier. While a milestone development, the Unimate was essentially a zombie, repeating the same task day in and day out.

Fifty years on, machines have “grown” much smarter. We now have bots that can chat with us, virtual assistants that take orders and answer our queries, robot pets to ward off loneliness, and self-driving cars that can detect and avoid pedestrians. The list goes on. In short, today’s artificial intelligence (AI) is much more “human-aware” — a sharp contrast to the Unimate and other earlier machines.

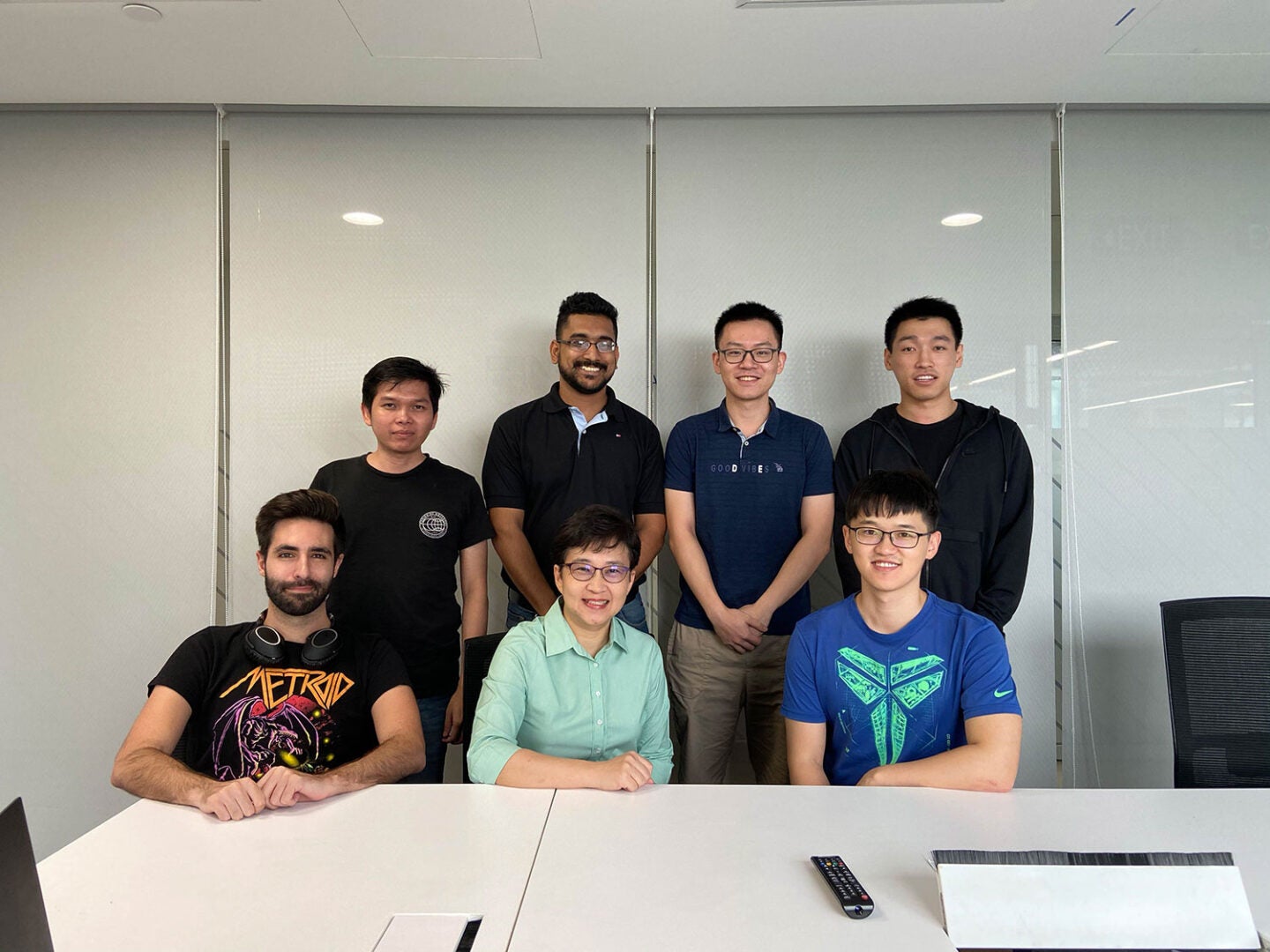

This evolution is a much welcomed one, given how most robots today no longer reside in factories, but rather in the military, homes, shops, and hospitals. With AI set to invade our lives in ever increasing degrees, it is vital that they be designed to “carefully incorporate human values and preferences” so that they can work synergistically with people, says Tze Yun Leong, a professor at NUS Computing who specialises in AI and decision making.

“AI does not work in environments where it just goes about on its own,” she says. “It has to work effectively in an environment where there are humans — to interact with them, to help them, to communicate with them.”

“Human-centric AI is the hottest trend in AI research and development right now,” says Leong. However, it is a challenging field because roboticists and researchers have to figure out how to design robots, algorithms, and software that show an understanding of human needs, preferences, behaviour, emotion, and language — notoriously difficult notions to parse.

But the goal is a worthy one to strive towards, because the more human-aware AI is, the greater the degree to which it can effectively interact and collaborate with people. “Artificial intelligence is about creating and designing technologies that can complement, augment, or extend human capabilities in decision-making in complex situations,” says Leong.

It’s like magic

AI holds vast potential to give humans a leg up in many areas. In the healthcare and biomedical sector, it can help monitor patients, provide care for the elderly and disabled, assist in diagnosis and create treatment plans tailored to the individual, among other possibilities. AI can also speed up disease and drug discovery. For instance, it helped researchers uncover the DNA sequence of SARS-CoV-2, the virus behind Covid-19, as early as two months after the outbreak in Wuhan.

Leong has witnessed some of these applications first-hand. As an undergraduate at the Massachusetts Institute of Technology, she worked on her first research project called the ‘Murmur Clinic: An Auscultation Expert System.’ She was tasked with creating a computer programme that could recognise irregular heart rhythms and diagnose different cardiac conditions. The project turned out to be a success. “While simplified, the system predictions worked very well as evaluated by some real doctors,” she recalls.

It was this experience, alongside meeting some incredibly inspiring teachers, including the lecturer in her very first computing class — who came dressed as a magician complete with a pointy hat and purple cape, waving a wand saying “computing is like magic, you can create whatever you like with it” — that motivated Leong to delve into AI as a career, with a focus on the biomedical and healthcare sector.

Towards smart tutors and smart strategists

Today, Leong’s research centres on creating AI that can assist humans when they have to make decisions in complex situations where data may or may not be available. “We’re trying to build AI systems that can function at a higher level of planning, decision making, and action, incorporating the fundamental functionalities of perception that can process images or languages,” she explains.

“For example, how do you assess the situation, how do you examine what options you have, and what will it take to get the best possible outcome, with or without other (human or AI) guidance,” she says.

One of Leong’s projects involves developing intelligent life-long tutoring systems, where teachers and students learn more effectively with the help of AI. Current technology in this area tends to focus purely on students’ performance, analysing how they answer certain types of questions and pinpointing weak areas to work on in specific topics.

“But learning and mastering skills and knowledge is much more complex than this,” says Leong. You also have to take into account the context and the student himself — What knowledge does he already possess? What is his emotional state? What is his capacity and capability of absorbing information? What is his preferred way of communication?

Inspired by and working closely with the insights from neuroscience and cognitive behavioral theory, she and her students are developing an AI assistant tutor that can “capture this information and come up with a plan together with the teacher to help a student to learn more effectively, in a personalised manner, on different topics,” she says.

Leong’s team is also working on a project that explores how AI can be used to aid decision-making in fast-paced, dynamic situations, using experimental environments in video games like Starcraft II. Such online strategy games involve players employing skillful, adaptive, strategic and tactical planning, and decision making to attain and use the limited resources available (for example, minerals or crops) in order to win.

The research is motivated by and has many applications real-life use cases in cybersecurity, disease discovery, and intervention design — for example managing the rapid and unpredicted nature, mutation, and spread patterns of the COVID-19 virus.

“This is actually something very difficult to achieve, especially in real-time situations where things change very quickly,” says Leong, who is an experienced gamer herself.

These instances would benefit greatly from humans blending their knowledge with an AI system. “In many cases, we cannot just learn from data or observations — there has to be some human expertise to guide the AI to reach the optimal objective,” she says.

“So what we’re looking at is how to incorporate observational data, knowledge or insights drawn from computational models with human values, judgement, and expertise to come up with a more effective system to help solve the task,” says Leong, whose team has been publishing their research in this area of ‘hierarchical and transfer reinforcement learning’, and causal reasoning and learning.

Fostering an AI ecosystem for a better world

In addition to her role at NUS Computing as an AI educator and researcher, Leong also actively participates in professional work on AI policy, governance, and regulation in Singapore and internationally. “Effective application and adoption of AI to “do good” for the benefit of humankind require the underlying infrastructure, policies, and guidelines to be put in place,” she says.

Singapore and other countries, as well as companies developing new tech, have to navigate issues such as data privacy, transparency, programming bias, and other concerns. For instance, Singapore’s Infocomm and Media Development Agency and Personal Data Protection Commission have implemented a Model AI governance framework, while the Health Sciences Authority developed the AI Medical Device regulatory guidelines — both of which are considered as model examples internationally, shared in forums such the World Economic Forum, the World Health Organization, and the Global Partnership on Artificial Intelligence, says Leong proudly.

But the road ahead is still challenging. “There are many technical solutions and practical approaches being developed to address these ethical considerations for AI,” says Leong. “But all of them involve trade-offs…there is no ‘perfect’ solution.”

“The challenge is how do we translate all these principles and guidelines and incorporate them into the technical design of a system that can be put into actual, day-to-day use,” says Leong. “The most critical step, is to educate all the stakeholders in the AI ecosystem — policy makers, owners, designers, developers, managers, users — about the relevant principles and responsible uses of AI. This is where the government, academia, industry, and the civil society should work closely together to harness the power of AI for social and economic growth.”

Leong sits on various Singapore and international expert panels and steering committees that are working on guidelines and frameworks that will hopefully steer countries and companies towards developing responsible and ethical AI systems.

Well aware that AI is a double-edged sword, Leong has this piece of advice for her students: “Learn AI well and use it well — go design new innovations and reimagine the world with powerful and sometimes magical AI tools.”

“But remember that AI is just a technology,” she cautions. “It can only do as much good and as much harm as you want it to — always assess the risks and understand the strengths and limitations of the AI applications that you are creating or using.”