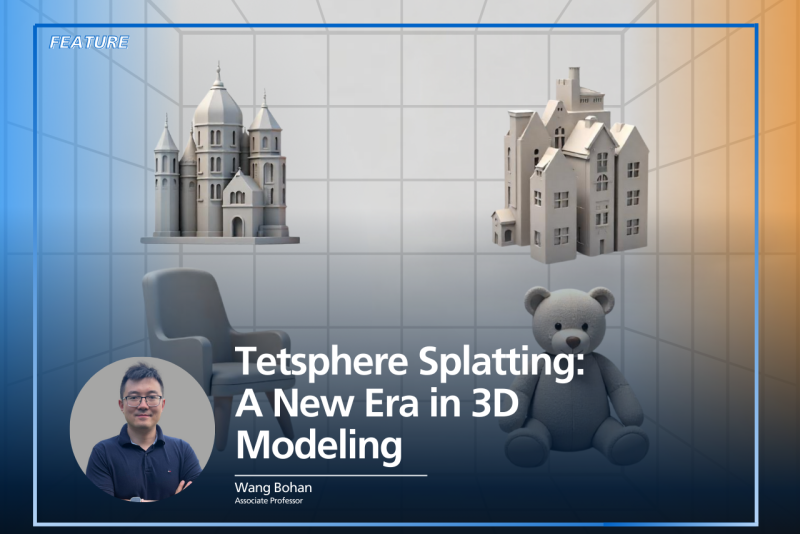

Imagine stepping into a virtual museum, wandering through richly detailed halls filled with lifelike artifacts. Or attending a remote class where anatomical models float before your eyes, ready to be explored from every angle. Or joining a meeting where your remote teammates appear as lifelike 3D avatars seated across from you, sharing the same virtual space. These visions of the future—once confined to science fiction—are quickly becoming real, thanks to massive advancements in 3D imaging and extended reality (XR). But there’s one major problem standing in the way: the data.

The dazzling realism of modern 3D experiences comes at a cost. A single frame of a dynamic 3D scene generated with a technique known as dynamic 3D Gaussian splatting (3DGS) can easily weigh in at hundreds of megabytes. Multiply that by 30 frames per second for smooth motion, and you’re looking at streaming requirements of up to 180 gigabits per second. That’s well beyond the capabilities of most home internet connections. And if the data doesn’t arrive fast enough or in the right sequence, the result isn’t just a laggy experience—it can cause motion sickness, or as researchers call it, “cyber sickness”.

So how do we make these next-generation immersive experiences accessible without needing the bandwidth of a data center? That’s where a new system called LTS (short for Layer, Tile, and Segment Adaptive Streaming), comes in. LTS is a new system developed by NUS Computing researchers (Associate Professor Ooi Wei Tsang and PhD student Shi Yuang) in collaboration with researchers from the National Tsing Hua University and the National Yang Ming Chiao Tung University in Taiwan, and Rutgers University in the USA. The paper “LTS: A DASH Streaming System for Dynamic Multi-Layer 3D Gaussian Splatting Scenes” recently won the Best Paper Award at the 16th ACM Multimedia Systems Conference which was held from March 31st to April 4th in Stellenbosch, South Africa.

The innovation of LTS lies not just in compression but in a complete rethink of how 3D content is streamed, focusing on adaptability, efficiency, and resilience. LTS is like the invisible hand that ensures even the most complex 3D worlds can flow smoothly to your XR headset—even over an average home network.

The Challenge of Streaming in 3D

To understand why LTS is such a breakthrough, it helps to appreciate the nature of the challenge. 3D Gaussian splatting is a relatively new way of rendering 3D environments using a set of tiny “splats”—each carrying color, transparency, and density information—to reconstruct complex, photorealistic scenes. Unlike traditional 3D models made up of rigid polygons, splatting is more flexible and visually rich. It also supports six degrees of freedom, meaning users can move around freely within the scene rather than simply rotating their view from a fixed point.

Compared to earlier 3D methods, splatting offers incredible speed and realism, making it ideal for XR applications. However, this richness comes at a high data cost. While the creation and representation of these scenes has seen considerable research, relatively little work has focused on the question of how to deliver these scenes in real-time to users wearing head-mounted displays (HMDs). LTS fills this critical gap by focusing on streaming—not modeling—unlocking the final piece needed to bring immersive 3D experiences into everyday life.

The Three Pillars of LTS

LTS stands on three key principles: layered streaming, tiled streaming, and segment streaming. Each one tackles a different challenge in streaming dynamic 3D content—balancing quality, relevance, and delivery speed.

The first principle, layered streaming, is implemented through something called D-Lapis-GS. The name might make you think of high-tech engineering, but there’s actually a touch of culinary whimsy baked in. The system is named after Kue Lapis, a colorful Indonesian snack made from stacked layers of sweet, steamed rice flour pudding. Like the dessert, D-Lapis-GS builds its 3D scenes in layers—starting with a basic, low-resolution representation and gradually stacking on more detail. The process begins with a base layer—a rough but complete version of the scene—followed by additional enhancement layers that add more detail and resolution. Think of it like watching a YouTube video where the resolution automatically adjusts to match your internet speed. If you’re on a slow connection or using a less powerful device, you get the base layer. But if your gear and bandwidth allow, the system adds more layers, giving you the full high-definition experience.

This adaptability is critical because not all HMDs are created equal. Some have limited processing power or lower-resolution screens. And not everyone has access to high-speed internet. The beauty of LTS is that it delivers just the right amount of data for each user’s setup, ensuring a smooth, personalized experience. Researchers also fine-tuned how these layers interact—preventing visual glitches as users move between levels of detail, maintaining a seamless visual flow.

The second principle, tiled streaming, deals with efficiency. Rather than sending the entire 3D scene at once, LTS slices it into a grid of smaller 3D chunks called tiles. The system then determines which tiles are visible based on the user’s field of view and only streams those. As the user turns their head or moves around, the system dynamically shifts which tiles it sends—always focusing resources on what the user actually sees. This “viewport-aware” approach not only cuts down on wasted bandwidth, but also improves responsiveness and visual quality where it matters most: in the center of your vision.

Finally, segment streaming tackles reliability. Internet speeds are not always stable—especially over wireless networks. Segment streaming divides the 3D data over time into small, manageable chunks. The system can buffer these chunks and adjust their quality in real-time, depending on how your internet is behaving. If there’s a brief dip in speed, the system can fall back to lower-quality segments or use extra preloaded data to ride out the hiccup. This reduces annoying freezes and keeps the immersive experience flowing uninterrupted.

Engineering a Seamless Experience

The real genius of LTS lies in how these three elements come together. On the server side, a dynamic 3DGS scene is divided into transmission units, or TUs. Each TU corresponds to a particular combination of layer, tile, and segment. These TUs are indexed in manifest files—basically roadmaps that help your device figure out what’s available and how to get it.

On the user’s end, an adaptive bitrate (ABR) algorithm makes the magic happen. This algorithm constantly monitors your device’s field of view, your internet bandwidth, and what data has already been downloaded. Then it decides which TUs to request next and in what order. It prioritizes early time segments (so you’re not waiting for the next moment), lower layers (which higher layers depend on), and tiles at the center of your view (where quality is most important). And it does all this in about 40 milliseconds—a blink of an eye—ensuring that the visual experience keeps pace with your head movements.

Real-World Results

The research team behind LTS ran a battery of tests to evaluate how well the system performs under real-world conditions. Using high-fidelity sequences from the 8i Point Cloud dataset—including scenes of people in motion—they simulated user head movements and varying network conditions using real 5G data. They compared LTS against simpler systems using only one of the three principles—layering, tiling, or no adaptation at all.

The results were clear. LTS reduced missing frames in live streaming by up to 99.7% and cut freeze time in on-demand streaming by up to 92% compared to the baselines. At the same time, it achieved excellent visual quality using up to 90% less data than standard formats. The system was especially strong in high-bandwidth and low-complexity conditions, but even in tougher scenarios, it consistently outperformed the alternatives.

The Future of Immersive Experiences

The implications of this work are far-reaching. With systems like LTS, we’re moving closer to a future where XR experiences are as accessible and seamless as streaming a movie. Virtual tourism, remote education, collaborative design, digital healthcare, and entertainment—all could benefit from richly detailed 3D environments that load effortlessly, even over ordinary internet connections.

Looking ahead, the researchers believe there’s room to make LTS even smarter. Future versions could adjust the number and size of tiles, layers, and segments on the fly, depending on how users move or how fast their connections are. They also point out that conventional visual metrics like PSNR don’t always reflect the user’s real experience. Metrics like motion-to-photon latency (how long it takes for a movement to show up visually) and the risk of cyber sickness will become increasingly important in assessing XR systems.

In a world that increasingly blends the digital and physical, the ability to deliver vivid, responsive, and immersive 3D content is not just a technical curiosity—it’s a necessity. The future of XR, and perhaps even the future of human interaction, will depend on technologies like LTS to bridge the gap between imagination and reality.

Further Reading: Y.-C. Sun, Y. Shi, C.-T. Lee, M. Zhu, W.T. Ooi, Y. Liu, C.-Y. Huang, and C.-H. Hsu (2025). “LTS: A DASH Streaming System for Dynamic Multi-Layer 3D Gaussian Splatting Scenes,” In ACM Multimedia Systems Conference 2025 (MMSys ’25), March 31-April 4, 2025, Stellenbosch, South Africa. https://doi.org/10.1145/3712676.3714445