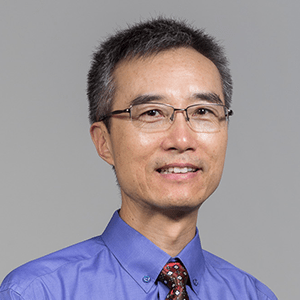

Sound and music have always been a big part of Wang Ye’s life, guiding him through a career that has spanned being a research engineer at Nokia in Finland to an associate professor at NUS’s School of Computing. “Everybody, including myself, likes music,” says Wang, who leads the Sound and Music Computing Lab.

But more specifically, Wang loves to sing. “That’s probably why singing voice processing has become a key research area in my group,” he says with a laugh. The singing voice is fascinating because it is unique among all other instruments, explains Wang. “It is so rich — there is nothing else that contains both lyrics and musical notation.”

Parsing out these bits of information is incredibly useful. For instance, lyric retrieval — the conversion of lyrics into text, otherwise called automatic lyric transcription (ALT) — comes in handy for adding subtitles to music, indexing audio files, and aligning lyrics.

But retrieving information from a singing voice isn’t easy. For a start, unlike the piano and other instruments, the pitch of a human voice is often unstable. For another, the words sung may not always be clear because singers sometimes sacrifice word stress and articulation in order to compensate for melody, tempo, and other musical aspects. Moreover, singing is often accompanied by instruments, which acts as a sort of “contamination” when you’re trying to analyse the lyrics, says Wang.

The last point is especially crucial because existing lyric transcription methods tend to rely purely on audio signals as their input. “But it’s very difficult to get rid of the accompanying music in the audio format,” he says. “Think of rice and sand — it’s very easy to mix them together, but it’s very hard to separate them. It’s the same with music and lyrics.”

Three prongs

The solution to better lyric transcription, Wang realised, was to use other inputs — such as information captured from videos and sensors — to supplement audio signals. “Additional modalities are very helpful,” he explains. “For instance, deaf people can read lips without hearing the music. That’s the trick we’re borrowing here.”

The approach was unorthodox, never before tested in the world of retrieving music information. Wang, however, has always been a trailblazer of sorts: he pioneered Singapore’s first and only undergraduate Sound and Music Computing course over a decade ago (it is still running today, with roughly 40 students electing to read it every semester); and in 2022, he became the first SoC professor to secure funding from the Ministry of Education’s newly launched Science of Learning grant (for a project called Singing and Listening to Improve our Natural Speaking).

In 2022, Wang achieved another first: when he and his students announced they had created the novel Multi-Modal Automatic Lyric Transcription (MM-ALT) system, which utilises three modalities of input — audio, visual, and signals from wearable sensors — to transcribe lyrics from singing voice.

To develop the system, the team first created a dataset they could work from. The effort was led by Wang’s PhD student Ou Longshen, an award-winning violinist. Together, the researchers recruited 30 volunteers and invited them to a soundproof recording studio. There, each participant chose a song from a preset list and was filmed singing it into a microphone, which allowed the team to capture both audio and visual information. Additionally, each volunteer was fitted with an earbud that could sense how their heads, jaws, and lips moved during the recording process.

With this dataset — the first of its type to be collected — Danielle Ong, a trained linguist and part-time research assistant in Wang’s lab, then worked to annotate the lyrics. Once this was completed, PhD student Gu Xiangming used his expertise in computer vision to process the videos. These three components were then integrated and used to develop the new Multi-Modal Automatic Lyric Transcription system.

Looking back, Wang says teamwork was key to their success. “It was a beautiful combination of different students and their respective, yet complementary, experience and talent. We put all this expertise into one pot and cooked a nice meal.”

Top marks

The results they obtained speak for themselves. When compared with audio-only lyric transcription methods, the new MM-ALT system performed demonstratively better: in scenarios where interference from musical instruments was high, the word-error rate (WER) of transcription was approximately 91% for audio-only methods. By comparison, MM-ALT’s technique of using three different modalities yielded a significantly lower WER of roughly 64%.

And overall, the videos proved much more useful compared with the sensor data for boosting transcription accuracy.

With such promising results, Wang encouraged his students to write up a paper to submit to the prestigious ACM International Conference on Multimedia. To his pleasant surprise, the paper was accepted.

“It’s very competitive — the acceptance rate is less than 20% and getting a paper into a top conference typically takes a lot more than two plus months my students had,” he explains. “We started everything from scratch at the beginning of the semester and I didn’t have much hope that they could finish the project with such stellar results.”

Even more impressive, the team was bestowed the Top Paper Award at the conference last October in Lisbon, Portugal. “I’m very proud of the work my students did,” says Wang.

Since then, the team has continued to work on the problem of retrieving information from singing voices — this time focusing on the other type of information it contains: musical notation, rather than lyrics. They recently submitted a paper to IEEE Transactions on Multimedia, which discusses how a new model they developed can extract music notes in the MIDI format based on audio-visual data analysis.

“We want to have a very natural kind of evaluation of the singing voice,” Wang explains. “A singing voice has two parts: lyrics and the notes. Ideally we want to have both outputs simultaneously.”

At the end of the day, he says: “I hope my research will be relevant to society and make a difference, while resonating with my students’ passions.”

Paper: MM-ALT: A Multimodal Automatic Lyric Transcription System