There have been many moments of disbelief throughout the pandemic, but one of the most shocking ones happened last April, when then U.S. President Donald Trump suggested that disinfectants could be a cure for Covid-19.

“I see the disinfectant, where it knocks it out in a minute,” said Trump at a White House briefing. “Is there a way we can do something like that, by injection inside or almost a cleaning? Because you see it gets in the lungs and it does a tremendous number on the lungs. So it would be interesting to check that.”

Although Trump claimed the next day he was just pranking reporters, it was too late — the seeds of damage had already been sown. Poison control centers across the country saw a spike in calls in the days after his comments, with reports of people taking bleach baths, gargling cleaning products, and washing vegetables with chlorine, among others.

Trump’s baseless claim is just one of many rumours and lies that have emerged about Covid-19. Such misinformation can have dire consequences, warned the World Health Organization’s director-general Tedros Adhanom Ghebreyesus last year. “We’re not just fighting an epidemic; we’re fighting an infodemic. Fake news spreads faster and more easily than this virus, and is just as dangerous.”

“Some fake news is disinformation with malicious intent,” says Min-Yen Kan, an associate professor at NUS Computing whose research includes natural language processing and information retrieval. Apart from pandemics, it impacts politics, racial harmony, and other realms. “Fake news can disturb social behaviour, public fairness, and rationality,” he says.

As a result, news outlets and social media sites now devote large amounts of time and money to combating the scourge of fake news. “But it’s not always easy to do,” says Kan. “New things come out constantly and fact-checking takes time and considerable human effort.”

That is a big problem, especially when it comes to breaking news. “Fake news is particularly click-baity — it spreads a lot faster than real news so it’s important to catch it quickly,” he says.

Three signals

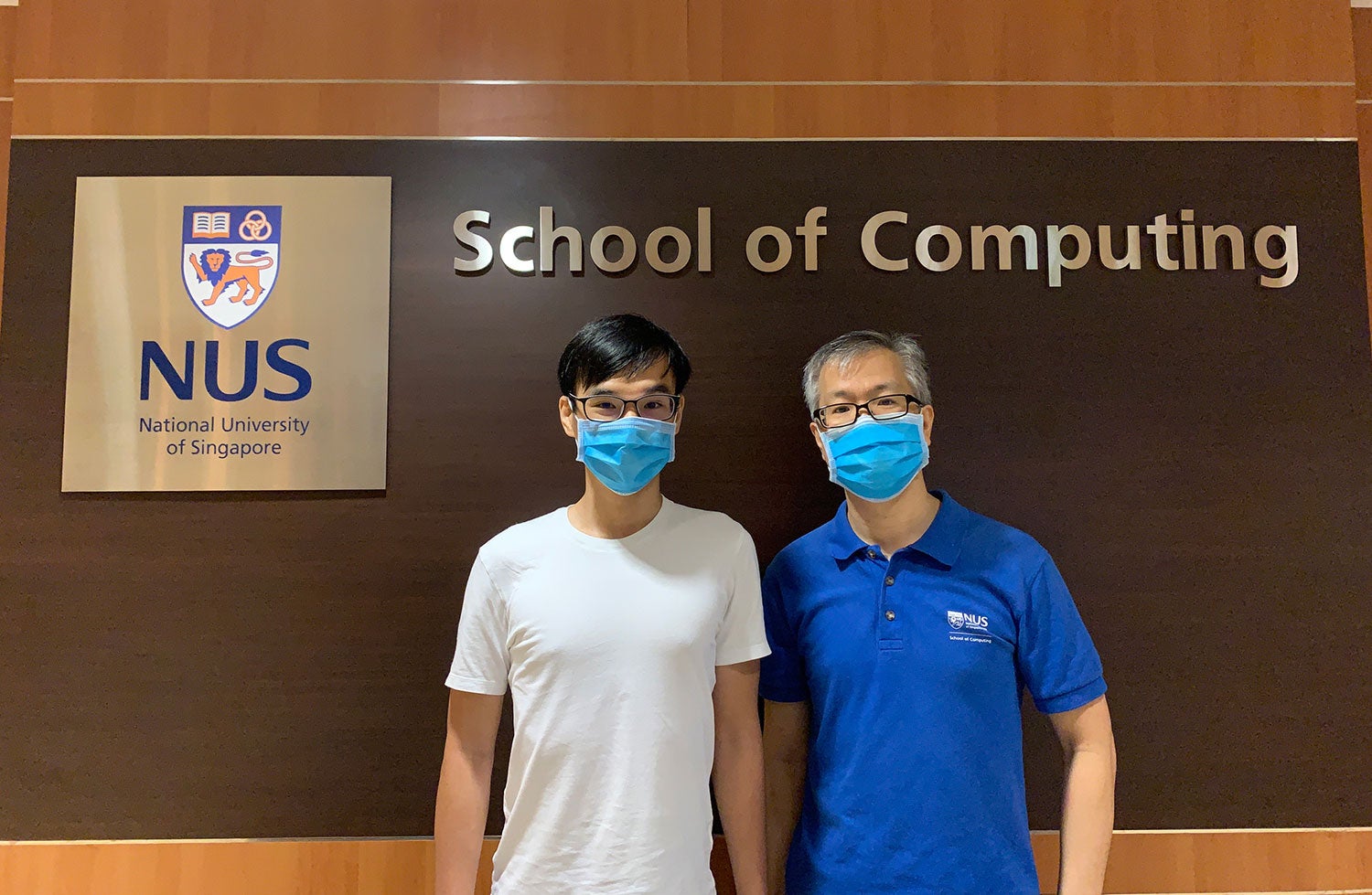

One of Kan’s talented students, Van-Hoang Nguyen, wondered: Is there a way to assess a news article in real time to try to get a sense of its accuracy right away without needing to rely on fact-checkers, who may take a lot of time to verify things?

The answer, he soon discovered, was yes. Then an undergraduate attached to Kan’s lab, Nguyen had taken numerous AI and machine learning courses as part of his computer engineering degree.

“Machines help us automate a lot of processes, so I started thinking: ‘If we can somehow use machines to automatically detect fake news, it would be very beneficial to society,’” recalls Nguyen, who now works for PayPal and is pursuing his Masters part-time at NUS Computing.

“We can’t always wait for the evidence to arrive in order to fact-check the news,” he says. “Sometimes we need to look at the context around that news in order to verify it.”

A piece of news doesn’t just stand on its own. The information ancillary to it — where it is published, who is sharing it on social media, and how these people interact with others online — all offer useful clues that point to its truthfulness.

For instance, if the news is shared by a group of conspiracy theorists, “then it’s an indication that this news is not reliable,” says Nguyen. “On the other hand, if it’s shared by a reputable publisher like the BBC or CNN, it indicates that the information is likely to be credible.”

It’s also helpful to look at the types of statements people make when sharing news, he says. “Do they support or deny it? Is it a positive or negative sentiment? Are there swear words and hate speech involved?”

In addition, examining a person’s social media profile can give you a good sense of what their beliefs might be, says Nguyen. For example, someone whose profile description states ‘Vaccines are bad. It is what makes you have autism and more’ might raise red flags.

Apart from studying the individual, you can also look at how he interacts with others online. This includes what kinds of accounts they follow, as well as who follows them back, says Kan. “Follower–followee relationships can tell you how reputable some of these sources are.”

The sum is more than the parts

Appreciating how useful these contextual clues are, Nguyen and Kan — together with their collaborators Dr Preslav Nakov from the Qatar Computing Research Institute and Associate Professor Kazunari Sugiyama from Kyoto University — built a fake news detection system they called FANG, short for FActual News Graph.

FANG’s prediction algorithm makes use of three pieces of information, called signals: knowledge about the news source, social media user, and their interactions with others. It works by representing these as nodes on a graph and modelling how news spreads through a large, interconnected network.

It’s a novel approach, says Kan, because while “many different works have used various pieces of the puzzle, nobody has integrated them together before.”

“So this work actually encapsulates several different threads into one,” he says. “And what we found was that the signals are synergistic. If you just had the parts alone, you wouldn’t get such a good performance, but because we put the signals together, the sum was more than the parts.”

When tested on a Twitter dataset — comprising close to 55,000 users who engaged with 448 fake news articles and 606 real ones — the researchers found that FANG outperformed three other detection models.

“The main benefit of FANG is that it’s highly accurate compared to the other methods,” says Nguyen. “And it’s very efficient — you only need limited labelled data to train and optimise the model. That also makes it very scalable too.”

The team presented their findings at the prestigious ACM International Conference on Information and Knowledge Management last October, where they won an award for Best Paper.

Their algorithm, which has been cited by Facebook researchers, is now freely available for others to use on GitHub, the code-sharing platform for developers. They hope that others, too, may benefit from FANG. Already, Nakov is working on integrating the tool into the fake news detector he is building in Qatar.

“The main takeaway is that using all the contextual information about news helps us to potentially identify fake news faster than otherwise possible,” says Kan. “It’s a complementary method to fact-checking.”

Nguyen adds: “A general opinion of AI is that the machine is not supposed to replace people but to assist them.”

“So we still need actual human fact-checkers, but we hope to make their job much easier.”

Paper: FANG: Leveraging Social Context for Fake News Detection Using Graph Representation