The Hidden Influence of Touch: How Our Digital Interfaces Quietly Reshape the Way We Think

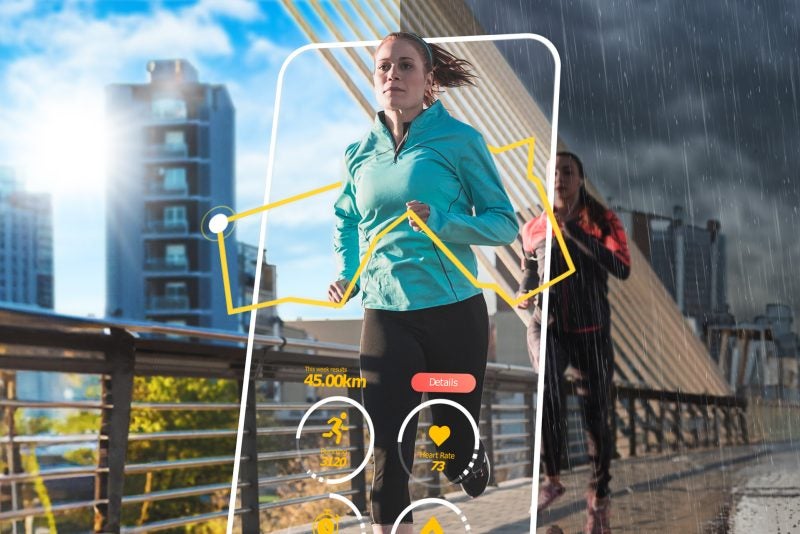

You probably don’t even notice it anymore. One moment you’re scrolling through Instagram on your phone, the next you’re clicking a mouse on your laptop to finish a work document. We transition between digital devices with barely a thought. But what if those seemingly small physical differences, like the direct tap of your finger on a touchscreen versus the indirect click of a mouse, are not so small after all?

What if they are quietly reshaping the way our brains process information, nudging us toward certain kinds of thinking and decision-making without our awareness?

That is the provocative question raised by a fascinating line of research conducted by NUS Computing’s Provost’s Chair Professor Teo Hock Hai and his collaborators, all of whom are former NUS Computing PhD alumni – Dr. Peng Xixian (Assistant Professor at Zhejiang University), Dr. Wang Xinwei (Senior Lecturer at the University of Auckland) and Dr. Guo Yutong (Assistant Professor at the Chinese University of Hong Kong (Shenzhen)). Their work suggests that our digital interfaces are not neutral tools. They are subtle but powerful architects of thought, influencing whether we focus on concrete, detail-oriented tasks or abstract, big-picture reasoning.

Given that mobile devices now account for over 60% of global online traffic, and the touchscreen display market is projected to exceed $240 billion by 2032, this is not a trivial matter. Understanding how our interfaces shape our cognition could have profound implications for education, commerce, health, and even the development of creativity in younger generations.

From Tools to Cognitive Shapers

To appreciate the significance of this research, it helps to revisit two foundational psychological theories – embodied cognition and construal level theory (CLT).

Embodied cognition argues that our bodily experiences directly influence the way we think. The movements of our hands, the feel of an object, the physicality of interaction all feed back into our cognitive processes. A touchscreen, with its direct tap, swipe, and pinch, creates a sense of immediacy and ownership that a mouse pointer, with its layer of indirection, cannot.

CLT, meanwhile, distinguishes between two modes of mental representation:

- Concrete construals: detail-focused, context-specific, concerned with the how.

- Abstract construals: broad, generalised, concerned with the why.

Bringing these theories together, the researchers proposed a bold hypothesis: touch interfaces bias us toward concrete thinking, while mouse-based interfaces allow more abstract thought to flourish.

What the Brain Reveals

The first set of experiments went straight to the source, the brain.

Using EEG scans, participants engaged with a convertible desktop computer that could be operated either by touch or by mouse. They were given a category inclusiveness task – deciding whether items such as apples (clear exemplars) or cucumbers (borderline cases) counted as “fruit.”

The results were striking. When using touch, participants were stricter. They were less likely to include borderline items like cucumbers, showing a more concrete, detail-oriented focus.

The EEG readings confirmed this shift at a neural level. Touch use increased theta band synchronisation in the parietal cortex, associated with action-oriented, sensorimotor processing. At the same time, it reduced alpha band desynchronisation, linked to abstract thought. In other words, the brain itself was tilting toward concreteness when the finger, rather than the mouse, drove the interaction.

Beyond the Behavioural Spillovers

But they didn’t stop at brain waves. They wanted to know: do these shifts spill over into everyday tasks?

In a second study, participants first completed an online shopping task; either by touchscreen or by mouse. Later, they filled out a behaviour identification form, where they had to describe simple actions. “Making a list,” for instance, could be described concretely (“writing things down”) or abstractly (“getting organised”).

Consistently, touchscreen users gave more concrete responses. What’s more, the effect carried over from the shopping task into this unrelated exercise. Simply engaging with a touchscreen primed participants for detail-oriented, how-focused thought that persisted beyond the initial activity.

This suggests that our tech habits may create subtle but lasting shifts in our cognitive defaults. Spend an hour swiping through a shopping app on your iPad, and you may find yourself approaching your next task (e.g., drafting a strategy report) with a more practical, detail-fixated mindset.

Perception Itself is Altered

The third study pushed the boundary further. Could interfaces even change how we literally see the world?

Using a classic similarity-matching task, participants viewed large shapes composed of smaller component shapes (for example, a big letter H made of tiny letter Fs). They then had to match the target to either another shape with the same global structure (big H) or one with matching local components (tiny Fs).

Touch users consistently gravitated toward the local match. Once again, the trees outweighed the forest. The detail-first mindset induced by touch appeared to extend even to visual perception.

Decisions in the Real World

The implications become especially powerful when translated into real-world decision contexts.

Prof. Teo’s team drew on the construal fit principle, which holds that people are more persuaded by information that matches their current cognitive mode. Concrete thinkers prioritise feasibility – how easy or practical something is; while abstract thinkers emphasise desirability – the value or importance of the outcome.

In a study of consumer choice, participants had to pick between two cameras: one highly feasible but less desirable (simple to use but with limited features) and one highly desirable but less feasible (feature-rich but complex to operate).

Touch users leaned strongly towards the feasible option. Their interface nudged them to prize ease of use over aspirational outcomes.

The team then extended this to areas of public importance. In one experiment, people read cybersecurity messages framed either around feasibility (“simple steps to protect your network”) or desirability (“protect your privacy and prevent identity theft”). On touch devices, feasibility-framed messages were markedly more persuasive.

In another experiment with an online skin cancer detection tool, messages emphasising how easy the tool was to use significantly increased actual adoption among touchscreen users. Here, the choice of words and interface literally altered health behaviours.

Why This matters

Taken together, the findings paint a compelling picture:

- Neural activity: Touch primes the brain for sensorimotor, detail-focused processing.

- Cognitive style: Touch nudges people toward concrete construals.

- Perception: Touch biases us toward local, detail-level features.

- Decision-making: Touch increases preference for feasible, how-focused options.

- Persuasion and behaviour: Messages framed around feasibility resonate more on touch, even altering health decisions.

This matters because touchscreen use is no longer a niche activity. It dominates daily life, from the phones in our pockets to the tablets in our classrooms. If touch subtly tilts us toward concrete thinking, the cumulative effect on society, through our shopping habits, our communication strategies, our educational approaches, even our creativity, could be profound.

Implications for the Future

The practical applications of this research are wide-ranging. Here are a few examples of where it might matter most:

- Education: Tablets are increasingly common in classrooms. If touch biases children toward concrete thinking, educators may need to deliberately balance tasks that also foster abstract reasoning. Otherwise, prolonged reliance on touch could hinder the development of higher-order, creative thought.

- Public Health Campaigns: Designing health apps and campaigns for mobile platforms may require framing messages around feasibility – emphasising step-by-step guidance – rather than lofty appeals to values. Doing so could significantly boost adoption of preventive measures.

- E-commerce: Online retailers could tailor product descriptions based on interface. On mobile, highlighting ease of use and practicality might be more persuasive; on desktops, aspirational features could take precedence.

- Workplace Productivity: As remote and hybrid work continues, the choice of device may shape how employees approach tasks. Touch devices could be best for detail-oriented execution, while desktops might support broader, strategic planning.

- Interface Design for Emerging Tech: As augmented reality glasses, wearables, and even brain-computer interfaces enter the mainstream, we will need to anticipate how each new mode of interaction shifts cognition. The lessons from touch versus mouse offer a critical template for future design.

A Silent Architect of the Mind

The real revelation of this research is simple but unsettling: our devices do not just deliver information. They condition how we process it. They are silent architects of thought, shaping whether we focus on the granular “how” or the overarching “why.”

This doesn’t mean touch is bad and mouse is good. Both modes of thinking are essential. But awareness matters. By understanding these subtle nudges, designers, educators, policymakers, and even ordinary users can make more intentional choices.

The next time you tap your phone screen, consider this: your device might be priming you to focus on the immediate, the feasible, the practical. And the next time you step back from the screen to consider the big picture, you may want to ask yourself whether you should also step away from the touchscreen.

As technology continues its relentless evolution, from the glass rectangles of today to the immersive interfaces of tomorrow, the central question endures: what new ways of thinking will our tools quietly teach us?

Read the full research: https://doi.org/10.1287/isre.2023.0191