Powering Up on the World Stage:

NUS Students Take on Their First On-Site Supercomputing Challenge

When six students from the NUS School of Computing rolled crates of servers into a vast convention hall in St. Louis, they were doing far more than preparing for a competition. They were stepping into the world of High-Performance Computing (HPC) – where building, operating, and optimising powerful computing systems under pressure is part of the challenge.

Competing as Team Kent Ridge, the students made their first-ever on-site appearance at the Student Cluster Competition (SCC), held during Supercomputing 2025 (SC25) in November. Over an intense 48-hour period, the team designed, deployed, and managed their own computing cluster, running a demanding suite of real scientific and machine learning applications.

It marked a significant milestone for the team – and for NUS’ growing presence in international supercomputing competitions.

From Virtual to Physical

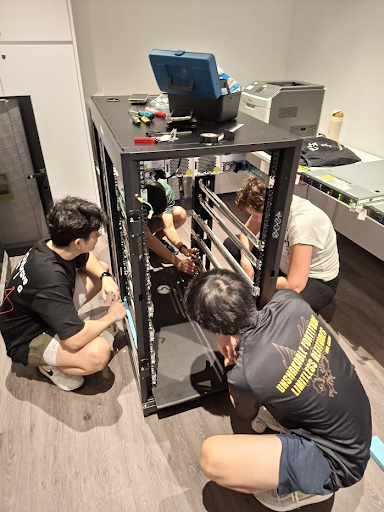

SCC is widely regarded as one of the most rigorous training grounds for student HPC talent. Unlike virtual formats, the on-site competition requires teams to take full responsibility for every layer of the system: hardware assembly, networking, software configuration, performance tuning, and troubleshooting – all under strict time and resource constraints.

At SC25, Team Kent Ridge competed against seven other finalist teams from leading universities worldwide. This marked the team’s first physical participation in the competition, requiring them to operate a cluster deployed and managed entirely by the students themselves.

Despite working with a comparatively modest hardware setup, the team successfully achieved its primary goal: submitting valid results for every required application and benchmark. This allowed NUS to finish ahead of more experienced teams equipped with significantly stronger systems. For example, one team that NUS surpassed competed with eight NVIDIA H200 Graphics Processing Units (GPUs) on a single node, while NUS operated eight NVIDIA H100 GPUs distributed across four nodes, introducing additional networking and scheduling challenges.

Even with these constraints – and with Central Processing Unit (CPU) and GPU workloads competing for the same resources – the team completed all requirements, demonstrating strong technical maturity, resilience, and teamwork in a competition known for its complexity.

Inside the 48-Hour Sprint

Time management quickly emerged as the most pressing challenge. Many SCC applications require large amounts of memory, limiting how many workloads can run concurrently on a single system.

Applications such as the Exascale Climate Emulator (ECE) placed heavy demands on system memory, which in turn affected the performance of the team’s networked storage using the BeeGFS parallel file system. These constraints forced careful scheduling decisions throughout the competition.

In the reproducibility challenge, the team encountered an unexpected hurdle when the provided application failed to run on their non-Remote Direct Memory Access (RDMA) system. Rather than abandoning the task, the students patched and adapted the code themselves – a valuable lesson in scientific computing rigour and verification.

Other technical discoveries followed. The team found that Structural Simulation Toolkit (SST) workloads did not scale well horizontally across nodes, prompting them to vertically scale the application on a single node instead. For the High-Performance Linpack (HPL) and HPL mixed-precision benchmarks, prior experience helped – but the students were surprised to find that their RDMA configuration performed worse than standard Transmission Control Protocol (TCP) in certain cases.

Perhaps most notably, the team devised a custom solution to run MLPerf machine learning benchmarks across multiple nodes. Existing implementations did not support their configuration, so the students implemented a combined tensor and data parallelism strategy using gRPC remote procedure calls, while carefully avoiding out-of-memory errors. The team later contributed these findings back to the MLPerf community.

“HPC is not always a glamorous job,” said team leader Win Way Yan, a Year 2 Computer Science student. “A lot of time is spent building applications and troubleshooting rather than truly optimising code. But this is a character-building experience – the debugging and problem-solving mindset applies everywhere.”

Built on Collaboration

The SC25 team was advised by Dr Cristina Carbunaru and Dr Sriram Sami from the NUS School of Computing. Preparation for SC25 involved weekly technical meetings, paired ownership of applications, and extensive collaboration.

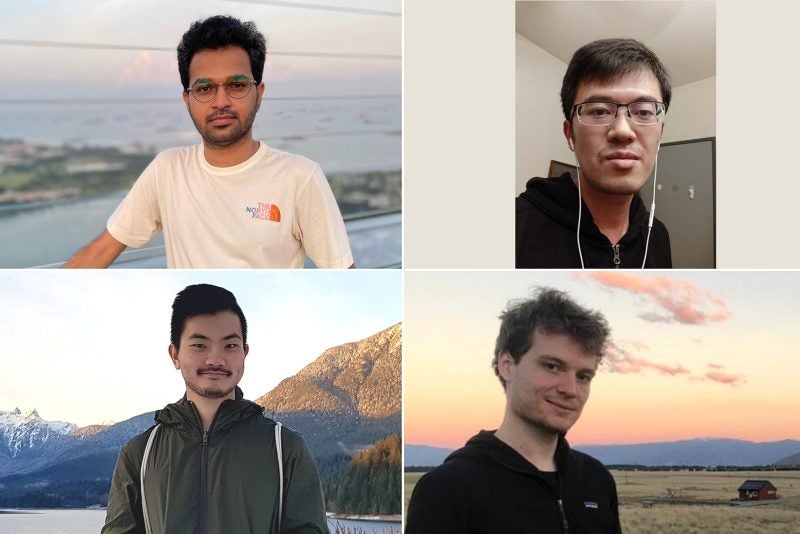

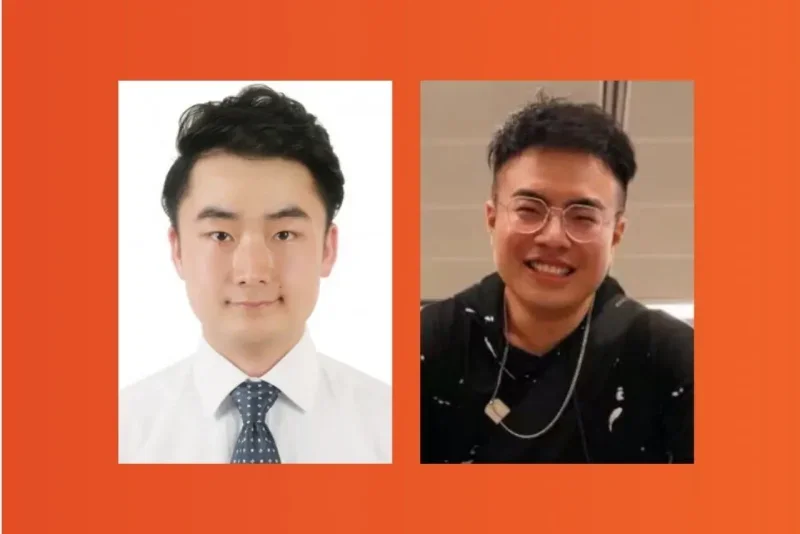

Team Kent Ridge comprised Taanish Bhardwaj, Gabriella, Amy Ling, Win Way Yan, Yeo Zhi Shen, and Xinyang Yu – students from Computer Science and Computer Engineering whose combined strengths spanned software, systems, and hardware. Together, they navigated the demands of a physical high-performance computing competition by stepping into complementary roles and supporting one another through challenges ranging from BIOS configuration and network routing to compiler tuning.

\Despite being new to physical HPC competitions, the students assembled a complete cluster from scratch within one week, without vendor support – a notable achievement for a first-time on-site team. Throughout preparation and competition, students naturally stepped into complementary roles, supporting one another through challenges ranging from BIOS configuration and network routing to compiler tuning.

“Participating in the Student Cluster Competition is an invaluable educational experience,” said Dr Cristina Carbunaru. “Such competitions are a powerful complement to formal coursework. Watching the team take ownership of an entire cluster – from hardware to software to benchmarking – and work through challenges collectively has been incredibly rewarding.”

Learning Beyond the Classroom

On an institutional level, NUS’ participation in SC25 reflects its commitment to developing the HPC landscape within the University and across Singapore. For students in the School of Computing’s Parallel Computing specialisation, the competition provided rare hands-on exposure to HPC hardware, industry tooling, and real-world scientific applications beyond the standard curriculum.

Beyond technical skills, the experience also offered insights into emerging hardware architectures, evolving GPU development trends, and the broader international HPC community through industry-led workshops at SC25.

From First Steps to Real Momentum

The SC25 experience has already opened the next chapter. Team Kent Ridge has qualified for in-person participation at the Student Cluster Competition at the International Supercomputing Conference 2026 (ISC26), to be held in Hamburg, Germany, in June 2026.

Selected from 31 applicants for just 10 in-person slots, NUS will be the only Singapore team competing at ISC26. The selection reflects the international HPC community’s confidence in the team’s potential and its upward trajectory.

More importantly, SC25 has helped the team and its advisors sharpen their understanding of what it takes to compete at the highest level – from hardware architecture and software tooling to manpower allocation and logistics. With these lessons in hand, Team Kent Ridge is no longer just gaining exposure. They now know what it takes to compete at this level – and how to do it better next time.