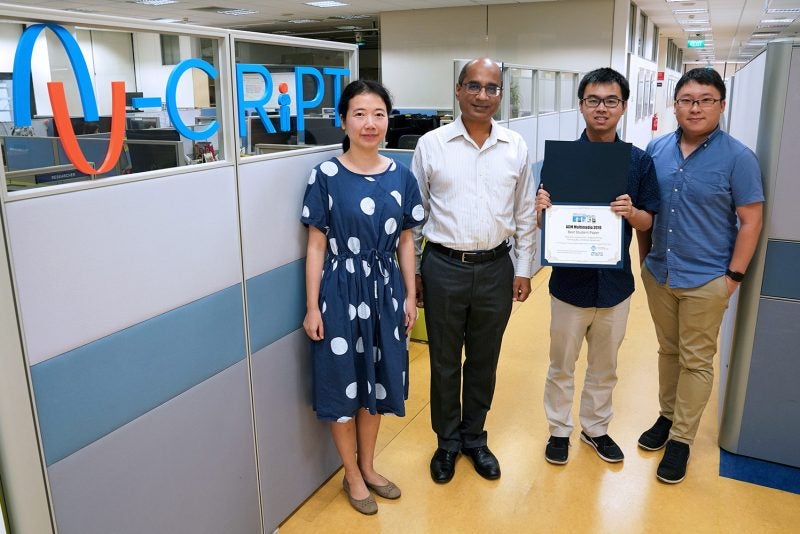

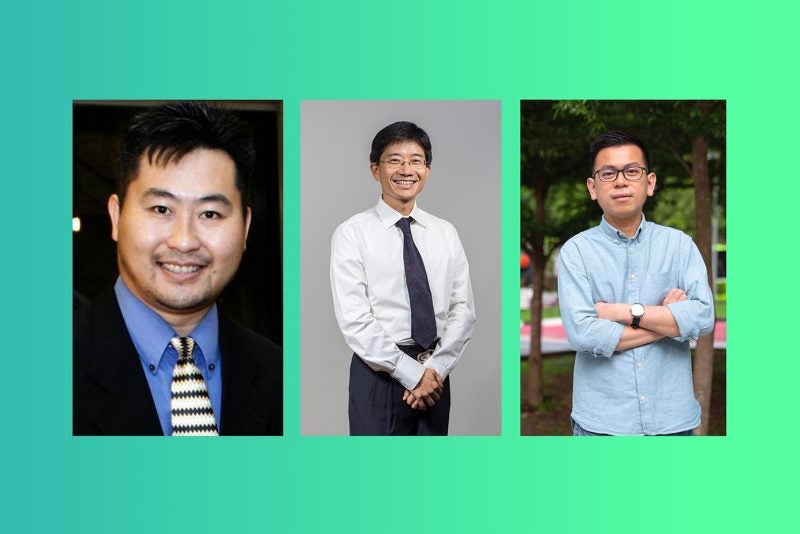

3 November 2020 – Professor Chua Tat Seng, Kwan Im Thong Hood Cho Temple Chair Professor at NUS Computing and Director of the NUS-Tsinghua Extreme Search Center (NExT++), won the Best Paper award at the ACM Multimedia Conference. The conference was held online from 12 – 16 October 2020, and is a leading international forum for researchers focusing on advancing the research and applications of multiple media such as images, text, audio, speech, music, sensor and social data.

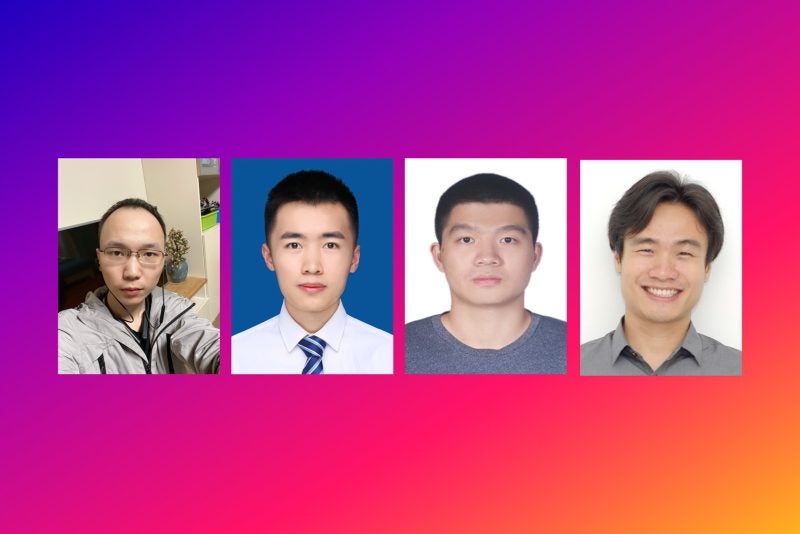

The research team comprised of Professor Chua, Hongru Liang, a visiting research intern at NUS Computing, Wenqiang Lei, post-doctoral research fellow at NExT++, Paul Yaozhu Chan, research engineer at A*STAR’s Institute for Infocomm Research (I²R), Professor Zhenglu Yang from Nankai University’s College of Computer Science, and Professor Maosong Sun from Tsinghua University’s School of Information Science and Technology.

In their paper, the team discuss modeling symbolic music comprehensively, integrating pitch, rhythm and dynamics information at a note level, instead of representing music notes using pitch information. Symbolic music refers to music stored in a format that has explicit information such as note onsets and pitch.

The team present a first-ever comprehensive model that forms the basis for a wide range of music tasks, such as music retrieval, genre classification, phrase completion, accomplishment assignment, and music generation.

This model can be pre-trained on large-scale music datasets, and easily fine-tuned for different tasks. It successfully demonstrated three different music tasks – melody completion, accompaniment suggestion and genre classification – in the paper.

“Music in a way is similar to language, as it is also made up of a sequence of music notes, similar to how speech is made up of words and sentences,” explained Professor Chua. “The only difference is that music is more complex, as a note comprises information on pitch, rhythm and dynamic, and the sequence comprises not just the note sequence in the form of melody, but also the accompanying harmony information.”

At the music sequence level, it is expressed as the melody and enhanced with different harmonies. A combination of these, at both note and sequence levels, model the music representation comprehensively.

“The model will enrich research and applications on symbolic music, which is one of the basic representations of stored music,” said Professor Chua.

Professor Chua likened the team’s model to the Bidirectional Encoder Representations from Transformers, or BERT for short, a revolutionary machine-learning technique for natural language processing (NLP). The field of NLP focuses on programming computers to process, read, and analyse large amounts of natural language data.

BERT, which has produced state-of-the-art results in tasks such as question answering and natural language inference, was developed by Google researchers to better understand user searches. The model does not process each word one by one. Instead, it processes the full context of a word by looking at the sentences and words before and after it.

Professor Chua and his team were inspired by the corresponding research and development of BERT, which has widely broadened the effectiveness and scope of the field of NLP research.

“Our comprehensive model for music will play a similar role as BERT did for NLP: it will enrich a wide variety of music modeling and applications. Moreover, the resulting framework will be useful for many multi-dimensional and temporal media, like video,” said Professor Chua.

The team beat 19 other research papers to win the Best Paper award, the highest award to be conferred on any paper presented at the conference.

“We are honoured to be receiving this award,” said Professor Chua on behalf of the team. “It is particularly significant as music is not a mainstream topic in this conference. We are delighted that the community is able to recognise the significance of this work, and its potential impact in revolutionising music modelling and applications. I also believe that this framework will be useful for many other multi-dimensional and temporal media, such as videos.”

Paper:

PiRhDy: Learning Pitch-, Rhythm-, and Dynamics-aware Embeddings for Symbolic Music