22 January 2019 – Associate Professor Roger Zimmermann, NUS Computing alumni Dr Yin Yifang and Dr Rajiv Ratn Shah, and their collaborators, won the Best Poster Runner-Up Award at the 20th IEEE International Symposium on Multimedia (ISM). The conference was held in Taichung, Taiwan, from 10 to 12 December 2018.

The IEEE ISM conference is the flagship conference of IEEE Technical Committee on Multimedia and an international forum for researchers to exchange information regarding the advancements in multimedia computing. The team won the award for their speech system, MyLipper, that reconstructs words and sentences based on a person’s lip movement in a given silent video. “The research was about giving a voice to people who cannot speak,” said A/P Zimmermann, on behalf of his team.

“Lipreading has a lot of potential applications in domains such as surveillance, video conferencing, healthcare, and entertainment,” A/P Zimmermann added. However, the team realised that current lipreading systems tend to be ineffective due to their limited ability to only convert speech into text. Hence, the team proposed a multi-view lipreading system that converts speech to audio. The team’s model takes in a silent video, from multiple angles, and produces audio based on the mouth movements detected in the video. According to A/P Zimmermann, their proposed model showed a marked improvement in performance, compared to single-view speech reconstruction models. They also found that their model could work in almost real-time and is still able to function in speaker dependent, speaker independent, out of vocabulary and language independent settings.

“Creating this system was very challenging because lipreading is a challenging task, even for people with hearing impairments,” said A/P Zimmermann. “However, advancements in artificial intelligence has helped us model the correlation between lip movements and their corresponding sounds. Our work is one of the first for speech reconstruction that leverages on multiple views.”

“We were very excited to have won the award,” A/P Zimmermann added. “It was a great experience to not only see audience members react when they realise it is possible to reconstruct speech from silent videos, but to also obtain this appreciation for our work from the multimedia community.”

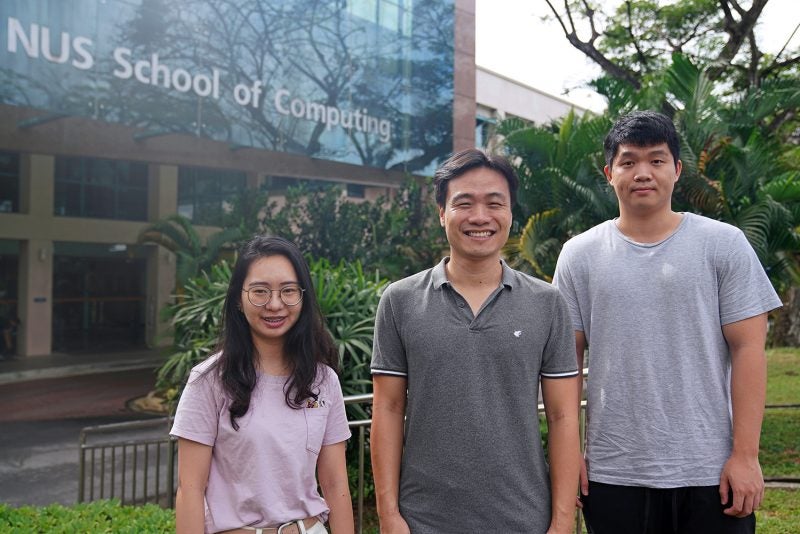

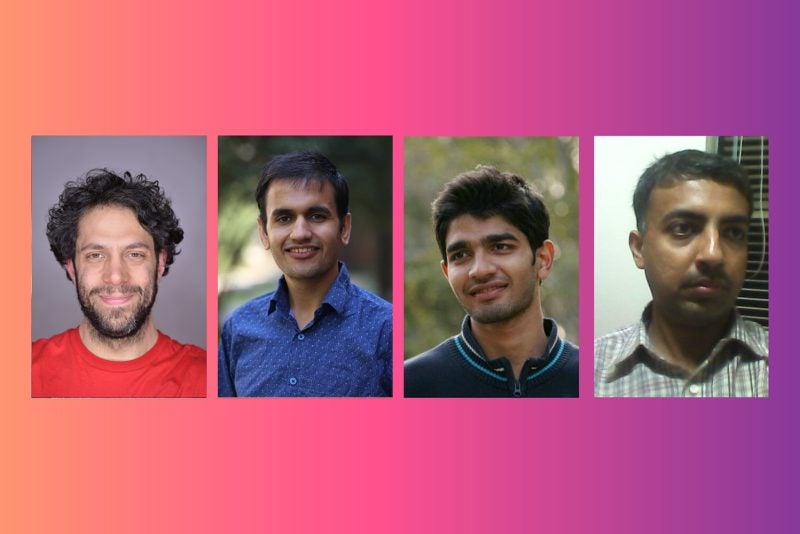

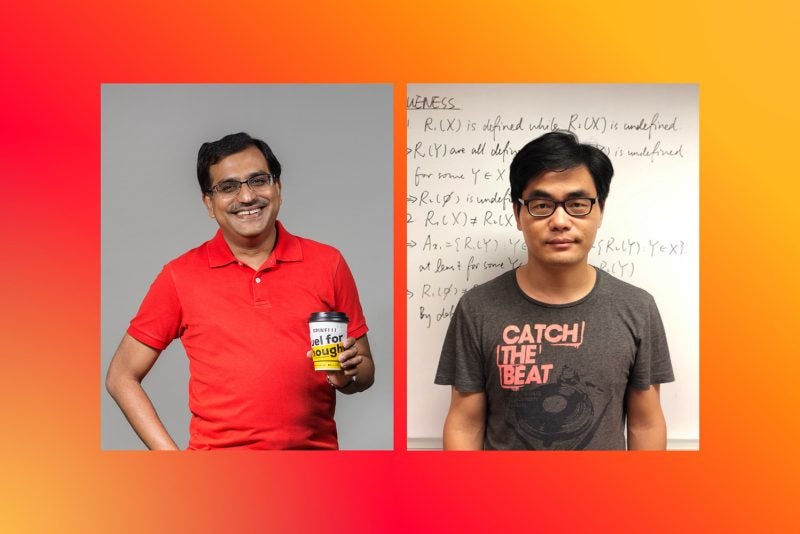

About the team

A/P Zimmermann is an Associate Professor at NUS Computing. His research interests include streaming media architectures, Dynamic Adaptive Streaming over HTTP (DASH), and Software Defined Networking.

Dr Yin Yifang and Dr Rajiv Ratn Shah received their PhDs in Computer Science from NUS Computing in 2016 and 2017, respectively. The pair were under the supervision of A/P Zimmermann during their course of study. Dr Yin is currently a Research Fellow at the Grab-NUS AI Lab anchored at the NUS Institute of Data Science. Dr Shah is an Assistant Professor at the Indraprastha Institute of Information Technology (IIIT) Delhi. A/P Zimmermann, Dr Yin, and Dr Shah previously won the Best Paper Award at the 7th ACM SIGSPATIAL International Workshop on GeoStreaming (IWGS 2016).

Dr Rajiv Ratn Shah is also the founder of the Multimodal Digital Media Analysis Lab (MIDAS) at IIIT Delhi. The trio, with MIDAS researchers Rohit Jain, Mohd Salik, and Yaman Kumar, authored the award winning paper, “MyLipper: A Personalised System for Speech Reconstruction using Multi-View Visual Feeds”.