08 March 2022 – A team comprising a Master’s student and two graduates won the Best Student Paper award at the 17th International Conference on Computer Graphics Theory and Applications (GRAPP 2022), which took place online in February this year. GRAPP is a conference that brings together researchers, engineers and practitioners interested in both theoretical advances and applications of computer graphics.

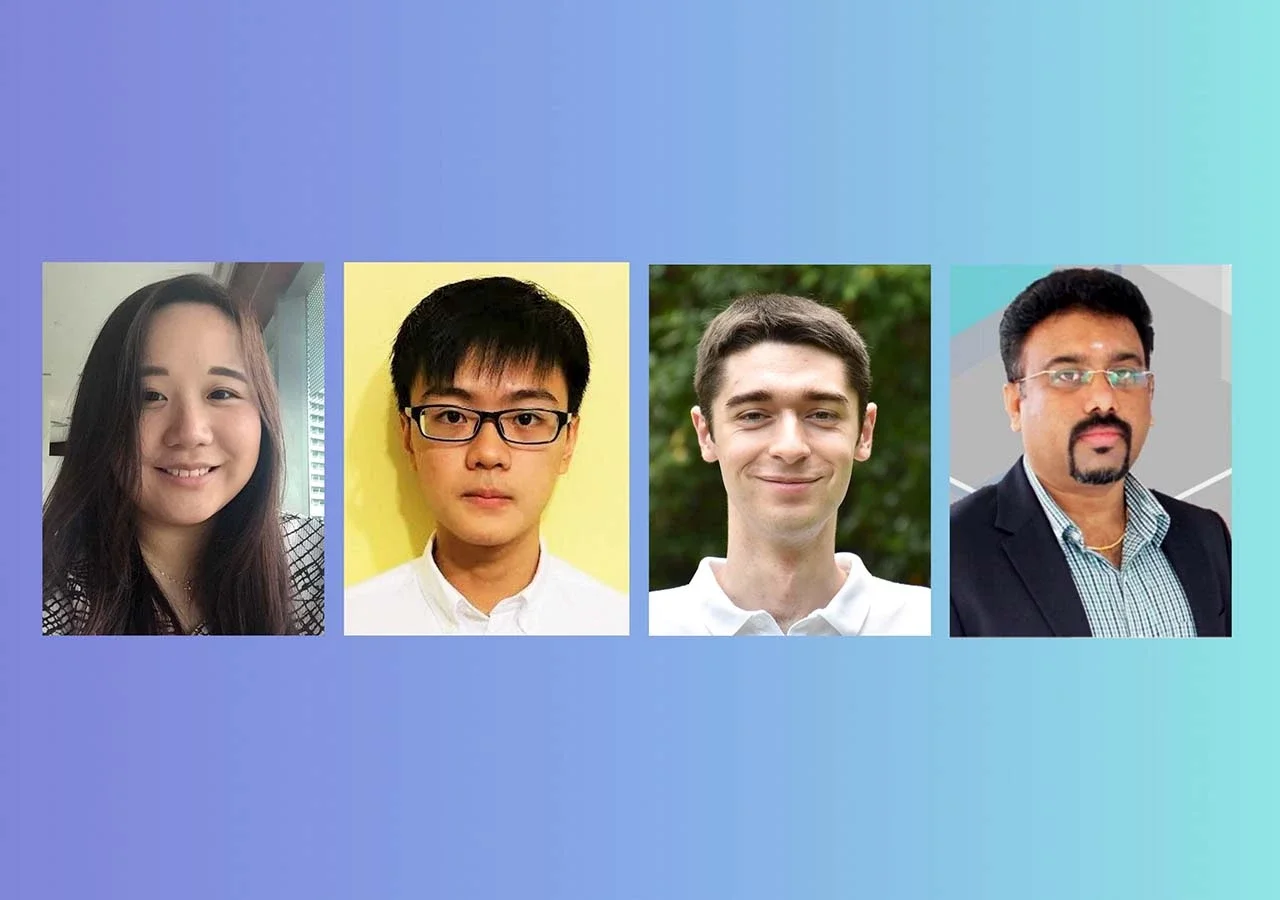

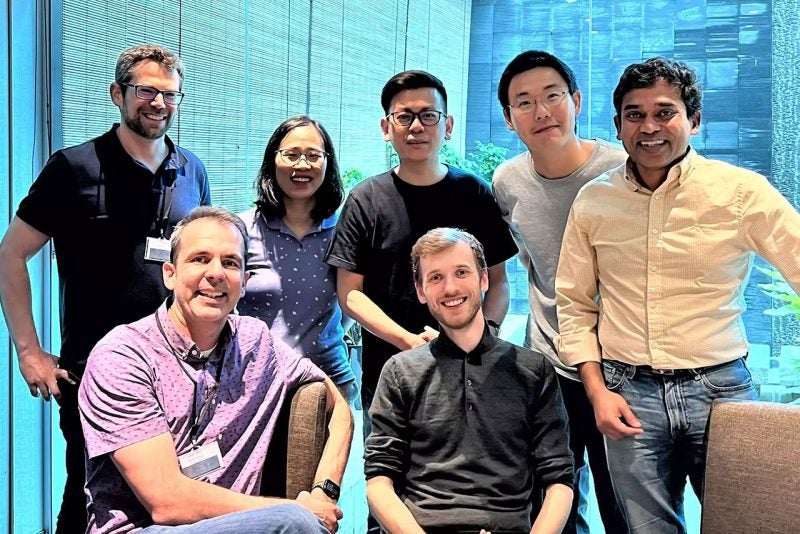

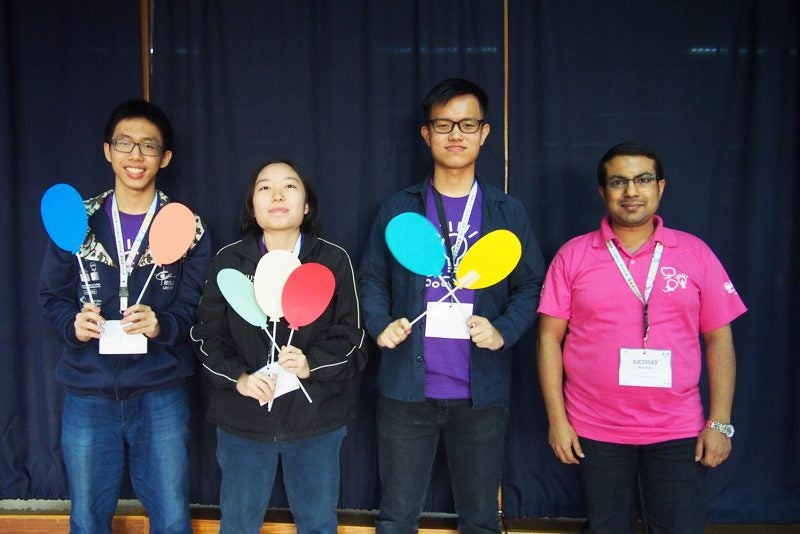

Master of Science in Computer Science student Tan Yu Wei, Computer Science graduate Chua Yun Zhi Nicholas, and Master of Computing in Computer Science graduate Biette Nathan Jean Emmanuel, along with NUS Computing Senior Lecturer Dr Anand Bhojan, took home the award for their paper on real-time rendering of depth of field effect in games.

In their paper, A Hybrid System for Real-time Rendering of Depth of Field Effect in Games, the team developed a way to improve the cinematic look of real-time applications, such as video games and the metaverse.

One key component of cinematic quality is the depth of field effect in cameras, which makes objects out of focus. Depth of field refers to the distance between the nearest and farthest objects that are in sharp focus in an image. One example of depth of field is the blurring of the background in a close-up shot of a character.

“For the depth of field effect, games usually use a filter to blur the image after it has been generated. This leads to an inaccurate blur especially for out-of-focus objects close to the camera which should appear semi-transparent,” explained Dr Bhojan on behalf of the team.

“To fix this issue, we wanted to use ray tracing to simulate the light propagation around these out-of-focus objects, which can give more accurate semi-transparencies closer to the effect of real-world cameras. With new graphics cards, we can perform ray tracing efficiently and still maintain interactive frame rates for games. Our technique is significant, as it is the very first proposal for a hybrid rendering solution for this issue of semi-transparency,” he said.

“The approach facilitates easy separation of ray tracing and rasterization pipelines, which has the potential to be a key technique in bringing cinematic quality and realism to mobile metaverse devices such as metaverse headsets and glasses,” he explained.

One challenging aspect of the project was to implement the classic technique currently used in games, and improve it with their new ray tracing approach. The team had to integrate the techniques together, and devise ways to fix the many artifacts introduced without incurring too much computation overhead.

Dr Bhojan added that the team is working on improving effects such as motion blur. They also hope to improve graphics on other platforms with their new ray tracing approach.

“We are also looking to cater to interactive 3D media applications on mobile and VR or AR platforms, or the metaverse, which cannot perform ray tracing efficiently. We plan to do so by ray tracing on the cloud instead, and leveraging 5G technology and edge computing to send the data over to the client in real-time,” Dr Bhojan said.

The idea initially began as a simple dissertation by Biette.

“We were overjoyed to learn that we had won the award. The idea came about 3 years ago and we have gone through multiple rounds of improvements since. The award feels like a recognition of our hard work and perseverance,” said Dr Bhojan.