Hearing Every Voice:

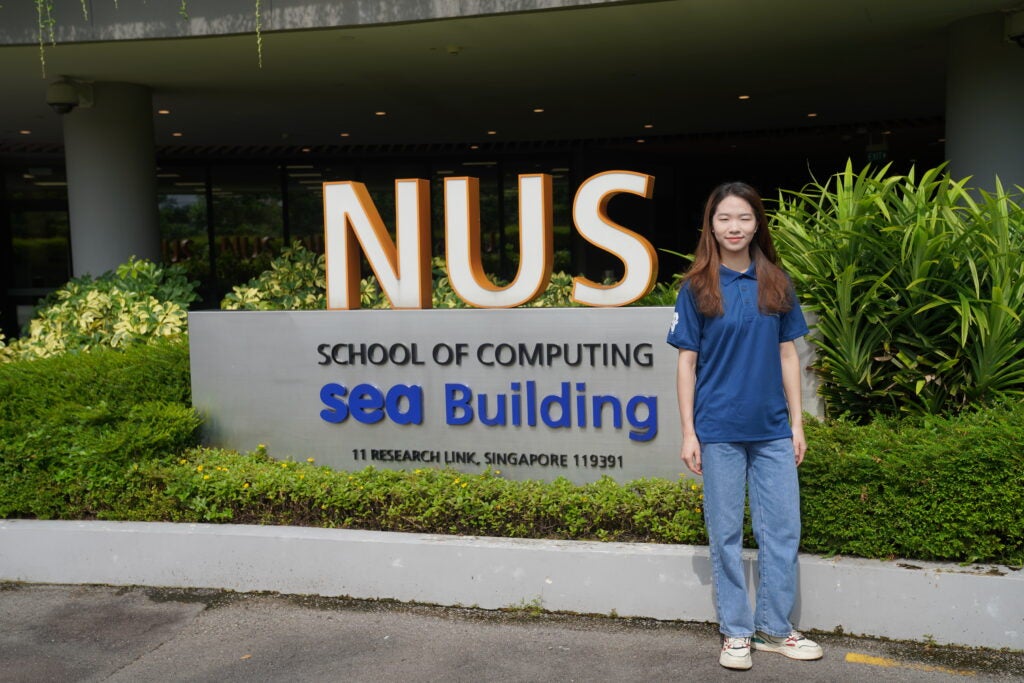

How Sun Jiaen Is Improving Speech Recognition at AAAI-26

Sun Jiaen thinks about artificial intelligence in practical terms: Who does it work for, and who does it leave out?

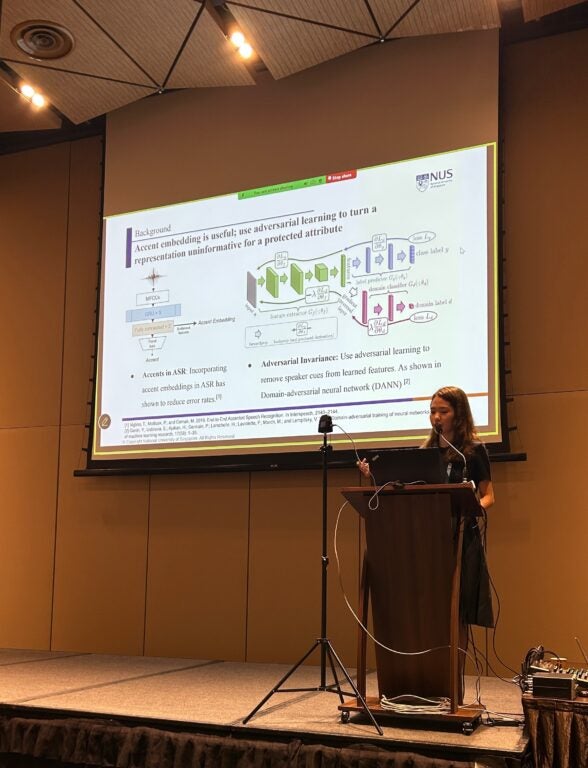

A Year 3 Computer Science undergraduate at the National University of Singapore (NUS), Jiaen has had her Research Proposal accepted to the AAAI-26 Undergraduate Consortium, with full funding support from the conference. At the consortium, she presented her work on De-Speakerising Accented Automatic Speech Recognition (ASR), which examines how speech systems often struggle with accents and unfamiliar speakers, leading to uneven performance across users.

Finding Her Way Into Computing

Jiaen did not enter Computing because of a single turning point. Instead, it was a gradual realisation of how useful the discipline could be.

“I was first drawn to NUS for its reputation and faculty,” she shares. “But once I started building things and testing them with real users, I saw how Computing could be applied to everyday problems.”

Working with tools like Python, R, and React helped her see code as a way to express ideas and understand behaviour, not just as theory. That sense of usefulness confirmed her decision to study Computer Science.

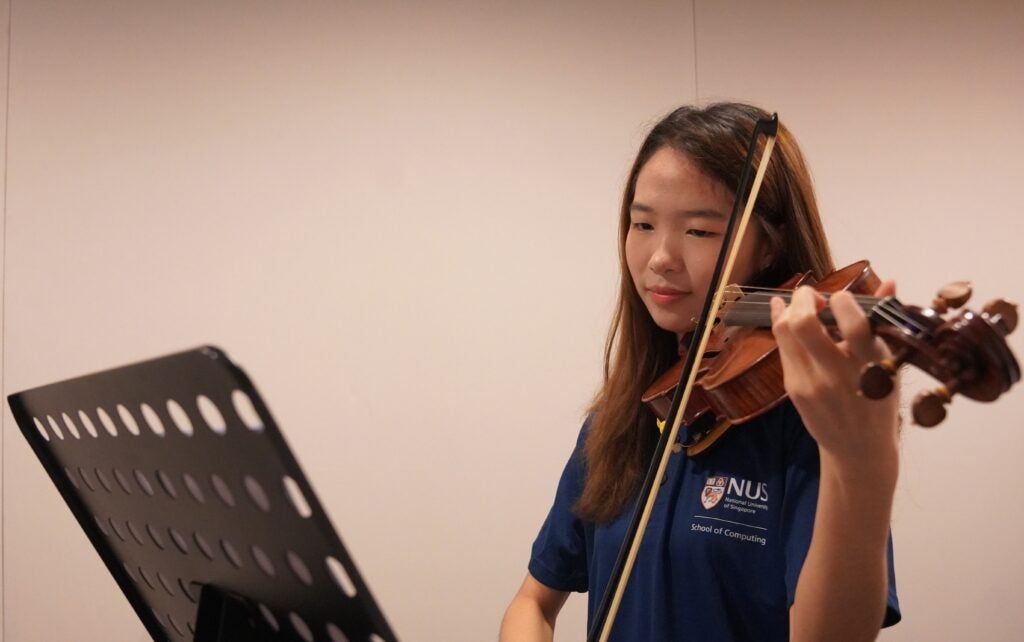

Outside of school, Jiaen is a trained violinist and enjoys music, cooking, and animation. Music helps her reset after long days of work and has shaped how she approaches problem-solving. It also led her to the Sound and Music Computing Lab, where she now researches sound, language, and human–computer interaction.

Addressing Bias in Speech Systems

Jiaen’s research gained international attention in 2025 when it was accepted for the AAAI-26 Undergraduate Consortium.

Her project examines speaker entanglement in accented ASR systems, a phenomenon where models become overly tied to a speaker’s vocal identity rather than the words being spoken. When a new speaker with the same accent appears, system performance can drop sharply.

The issue first surfaced during her Undergraduate Research Opportunities Programme (UROP) work on the Singing and Listening to Improve Our Natural Speaking (SLIONS) project. Supervised by Associate Professor Wang Ye, Jiaen was drawn to SLIONS because it allowed her to explore model development, software systems, and human-computer interaction in a single project. While her first semester involved broad exploration, it helped her recognise where her strengths lay: evaluation and HCI – a focus she carried into her second semester.

“We were testing a Mandarin speech model, and for certain speakers it suddenly produced text in other languages,” she recalls. “Nothing had changed except the speaker.”

Preparing her AAAI proposal was far from straightforward. The tight timeline meant developing a clear research direction in the middle of midterm season. Instead of rushing to a solution, Jiaen adopted a measurement-first approach. She spent time reviewing existing literature, consulting lab seniors, and refining a plan to diagnose the problem properly before attempting to fix it.

That process shaped her proposal, which introduces clearer ways to measure speaker entanglement and reduce it during training, allowing speech systems to focus on language rather than vocal identity.

Being accepted into AAAI was encouraging beyond the research itself.

“Meeting other students and working with my mentor made me feel part of a larger research community,” she says.

Research with Real Impact

Jiaen’s work is closely tied to real-world needs. Through SLIONS, an NUS-led project developed in collaboration with NUS Medicine, she helped build tools to support communication in healthcare settings – particularly for elderly patients who speak primarily Mandarin.

Her role spanned both technical and human aspects of research, from system development to running user studies and trials.

“The first time I ran a study on my own was challenging,” she shares. “But it showed me that I could manage both people and technical work.”

As SLIONS expands to support stroke survivors with aphasia, Jiaen sees the project moving beyond research into something that can make a lasting difference in people’s lives.

Learning Through Leadership

Alongside research, Jiaen is active in student leadership. As Director of Academic Liaison in the NUS Students’ Computing Club, she works with industry partners such as Singtel, NCS, and A*STAR to organise programmes for students.

These experiences have shaped how she thinks about collaboration and impact. “Impact does not come from working alone,” she reflects. “It comes from planning well, trusting others, and doing your part.”

She also credits guidance from Associate Professors Wang Ye and Bimlesh Wadhwa, as well as seniors at the Sound and Music Computing Lab for helping her grow in confidence and clarity.

What Comes Next

Jiaen will soon head to Canada for an overseas exchange – her first experience studying in North America.

Looking ahead, she hopes to work in roles that bridges research and industry, building technology that is practical, fair, and genuinely useful to the people who rely on it.

In improving how machines listen, Jiaen is focused on a simple goal: making sure technology understands people as they are.