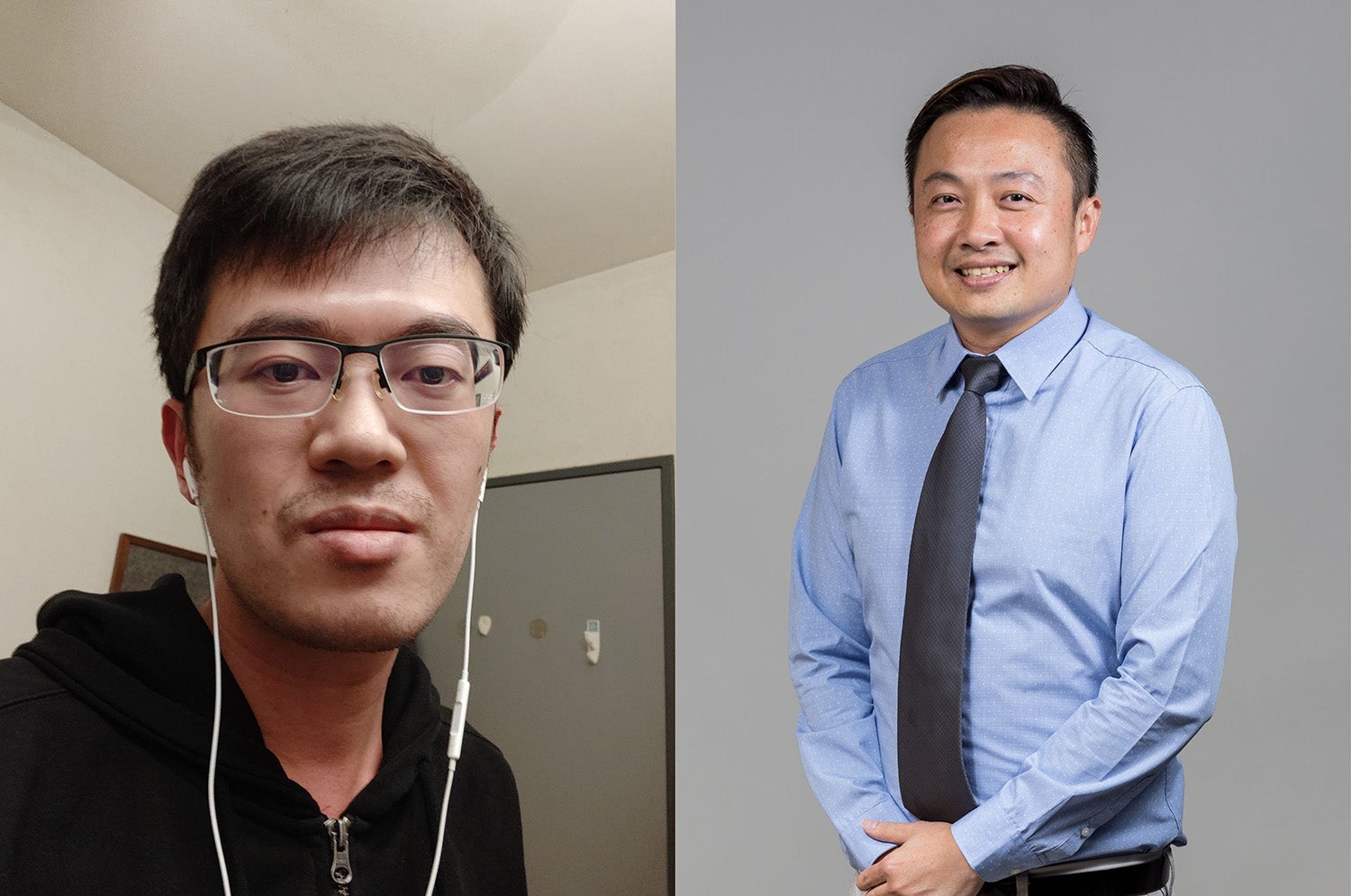

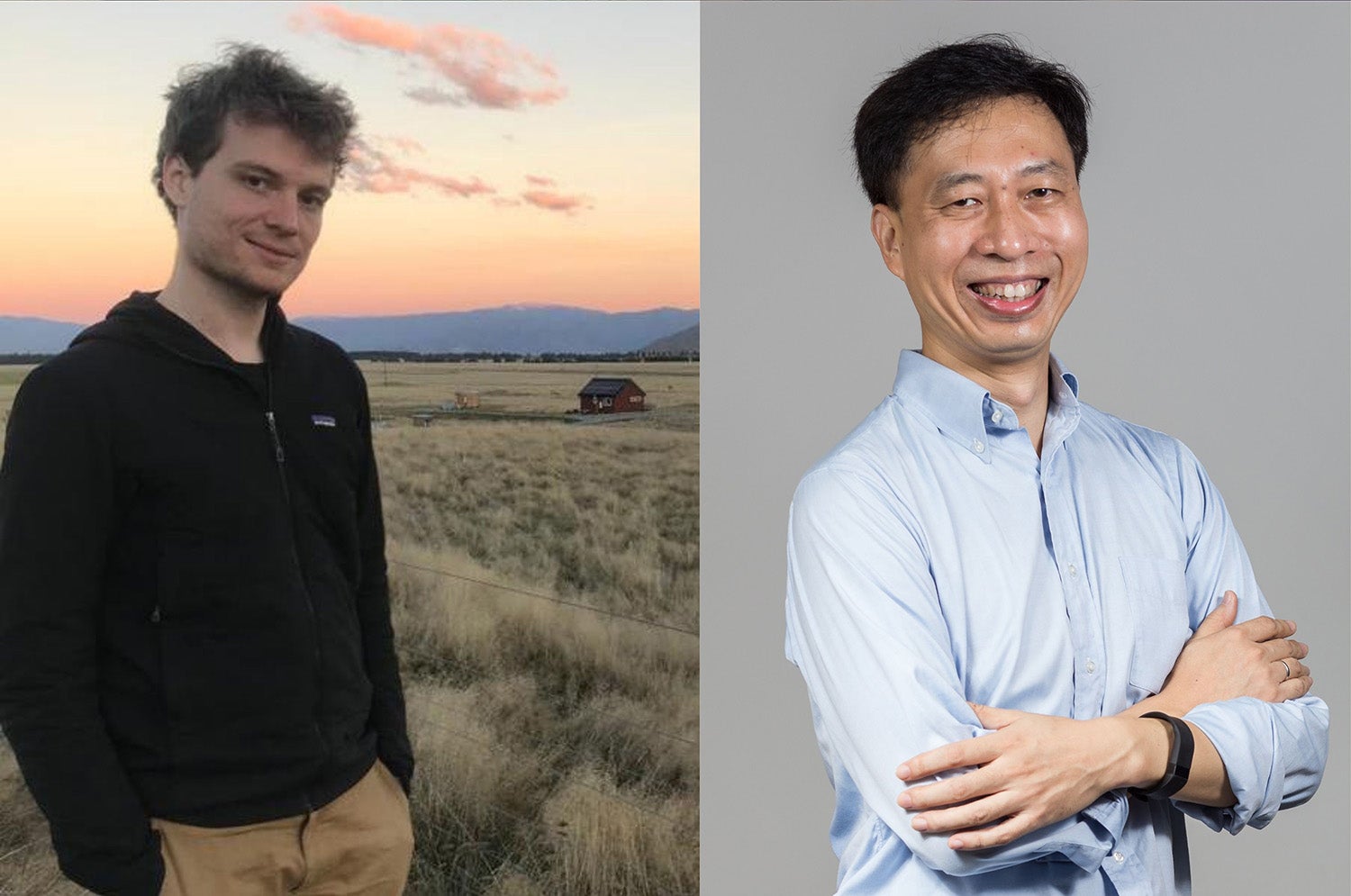

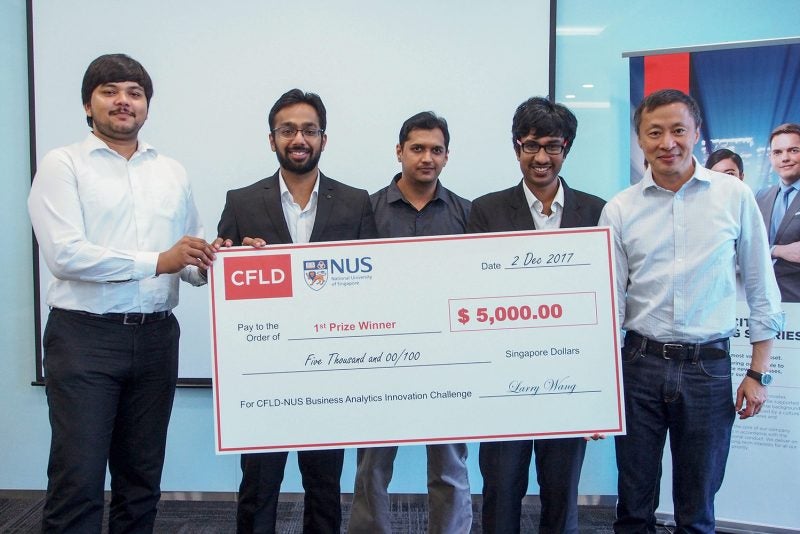

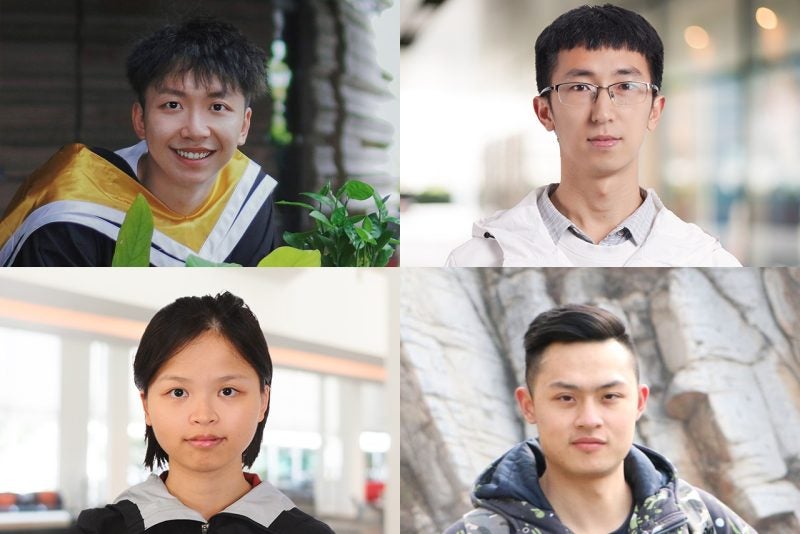

27 August 2021 – NUS Computing Computer Science (CS) PhD graduate Dr Dai Zhongxiang, CS PhD students Abdul Fatir Ansari and Samson Tan, as well as NUS Graduate School (NUSGS) Integrative Sciences and Engineering PhD student Peter Karkus, were awarded the Dean’s Graduate Research Excellence Award in August this year

The award is given to senior PhD students who have made significant research achievements during their PhD study. Graduate students may only receive the award, which includes a S$1,000 cash prize, once during their PhD candidature.

Improving training for deep generative models

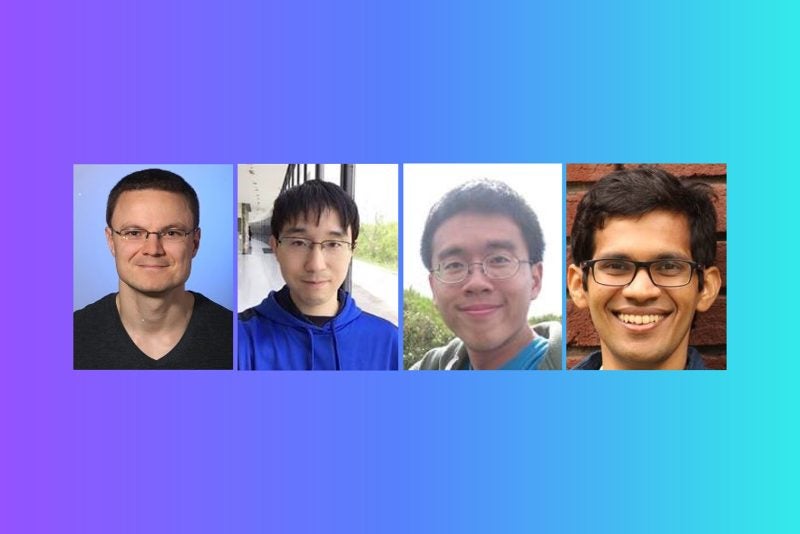

Under the supervision of Assistant Professor Harold Soh, Computer Science PhD student Abdul Fatir Ansari worked on improving training for deep generative models.

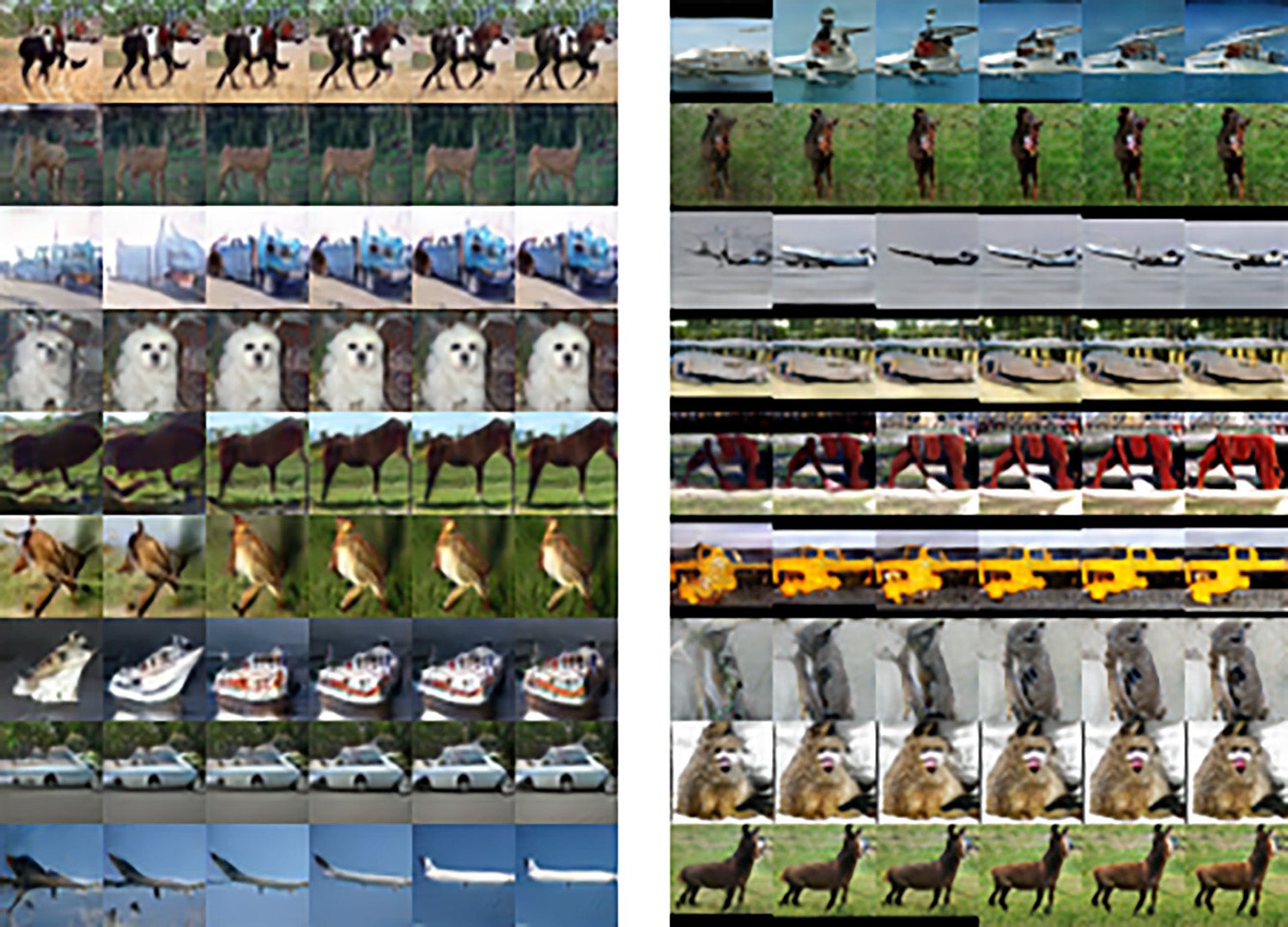

Deep generative models have received widespread attention in recent years and researchers have had success using them for applications such as generating high-fidelity images, image to image translation, and text to image translation. These models learn how the data is generated, but Ansari explained that it is challenging for the model to learn just by referencing observed data, as there may be various hidden variables, with complex dependencies between them, that impact the observed data.

“To this end, we have made multiple contributions to the area of deep generative models. In one of our papers, we proposed to train generative models by minimising the distance between characteristic functions of the real data and generated data distributions. This comes with attractive theoretical properties and practical training benefits,” said Ansari.

“In another recent work, we proposed a method called Discriminator Gradient flow (DGflow) to improve samples from pretrained generative models by employing ideas from optimal transport literature. The method improves a sample from the generative model by refining it along the gradient flow of entropy-regularized f-divergences between the generator distribution and the real data distribution,” He added.

Ansari believes that the general methods he proposed in his research, and the model’s multiple potential applications, has made his work stand out.

“During these four years, the aspect that was particularly challenging was catching up with the requisite background in computer science and mathematics – I had no formal background in computer science, as I majored in civil engineering. At the same time, learning about new techniques and then applying them to my research area has been the aspect of research that I have enjoyed the most,” Ansari added. “I was elated but mostly surprised because I did not expect to get this award. I am incredibly grateful to Harold for all the support during these years, and for being the ideal PhD advisor.”

Making Bayesian optimisation more efficient

Machine learning is a branch of artificial intelligence that enables a computer system to identify patterns in data and help make decisions, or predict outcomes. Its use in numerous real-world applications have been tremendously successful. For example, it is used in medical diagnosis, fraud detection or spam filtering.

However, the performance of machine learning models critically depends on a number of ‘hyperparameters’, a parameter which is used to control the model’s learning.

These hyperparameters are usually tuned via trial and error which requires significant human effort, but with Bayesian optimisation, these hyperparameters can be automatically tuned more efficiently, saving time and effort.

Beyond its typical use in hyperparameter tuning, Bayesian optimisation can also be used in a wide range of interesting applications. For example, it has even been used to find the best ingredients for making cookies.

Computer Science PhD graduate Dr Dai Zhongxiang’s research involved making Bayesian optimisation more efficient by exploiting additional information.

He likened the scenario to competitive chefs trying to figure out the best recipe for baking cookies, yet who do not wish to share their ingredients with each other.

“We essentially figured out a way to help them collaboratively optimise their ingredients without requiring the chefs to disclose their secret recipe. In other words, every chef can now use additional information from the other chefs to find the best ingredients quickly, without sharing their own cookie-making ingredients with each other,” Dai explained.

Dr Dai, who was supervised by NUS Computing Associate Professor Bryan Low Kian Hsiang and Professor Patrick Jaillet from the Massachusetts Institute of Technology, had his research accepted by various top machine learning conferences such as the International Conference on Machine Learning (ICML) and the Conference on Neural Information Processing Systems (NeurIPS).

“I always try to make sure that my work is both theoretically grounded and practically useful. This is usually not easy for machine learning research, and hence can be valuable if done properly,” He added. “My advisors, Prof Bryan and Prof Patrick, have played the biggest roles in helping me get on the right track to do machine learning research. This certainly could not have been possible without their help.”

Improving real-world reliability of Natural Language Processing systems

For his PhD research, Computer Science PhD student Samson Tan set out to improve the real-world reliability of Natural Language Processing (NLP) systems.

NLP is a subfield of artificial intelligence where researchers study how to build AI systems that can understand human language. These systems go on to make decisions based on their understanding of the human language, which could be spoken or written. One example of an NLP system is a chatbot on a website that helps people with their queries.

However, in a research setting, NLP models are often evaluated on benchmark datasets that may not fully reflect the challenges of the real world. For instance, depending on where a person comes from, not everyone may speak or write English in the same way.

“The reality is that there are many valid ways to speak a language depending on a person’s age, geographical location, ethnicity, socioeconomic class and the context. Additionally, people – even native speakers – make mistakes in spelling, grammar, and so on. Failing to account for language variation will result in products that don’t live up to the hype, or even discriminate against people due to the way they speak or write,” explained Tan.

Tan’s research thus focuses on making NLP systems more reliable in the presence of language variation by using adversarial machine learning techniques.

He proposed using adversarial attacks to test the systems’ ability to handle real-world phenomena such as inflectional variation, where endings of words are changed (e.g. ‘go’ versus ‘going’), and code-mixing, where multiple languages are used (e.g. ‘I go and jalan-jalan’, which means to go out for a walk in Malay).

“These are common occurrences in English varieties like Singlish, but existing NLP systems are ill-equipped to deal with them. Since much of the focus on bias and fairness in NLP has been on gender and racial attributes, I used these findings to highlight how these gaps may potentially lead to linguistic discrimination, where a social group is discriminated against by NLP systems due to their way of speaking or writing,” added Tan.

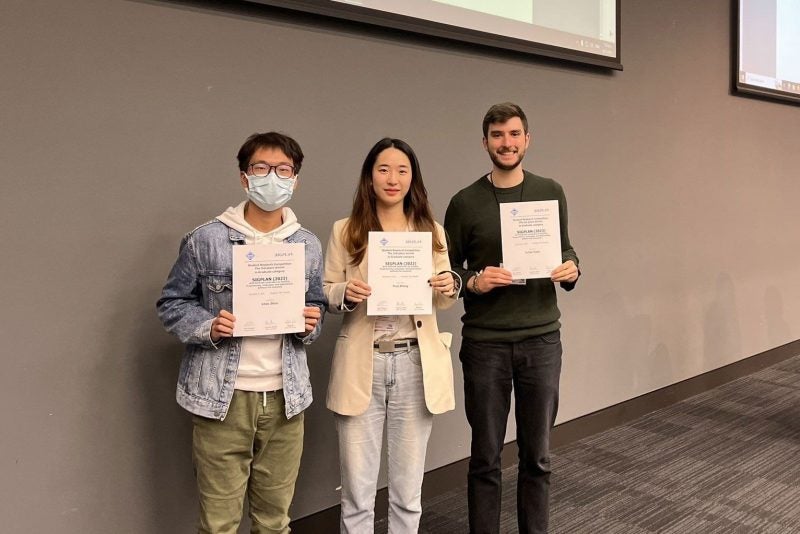

Tan’s work was accepted at prestigious conferences such as the Association for Computational Linguistics (ACL) in 2020, and the North American Chapter of the Association for Computational Linguistics (NAACL) this year.

Tan also proposed methods, some based on these adversarial attacks, to improve the performance of NLP models on these linguistic phenomena.

“In my latest paper presented at the ACL this year, I generalised the ideas from these past papers into a framework for estimating the reliability of NLP systems under any form of real-world variation. The core idea is to simulate the variation present in the expected deployment environment by mixing and matching perturbation distributions that model specific types of real-world phenomena, like the examples mentioned earlier,” He explained.

“We can then use adversarial techniques to measure the model’s average- and worst-case performance in the presence of each phenomenon. Based on this principle of dimension-specific evaluation, I proposed a framework for implementing a requirement specification and testing process in development teams. Being able to rigorously test systems helps engineers catch issues before deployment and opens the door to enforceable standards and regulations.”

Tan is supervised by NUS Computing Associate Professor Kan Min-Yen and Assistant Professor Shafiq Joty from Nanyang Technological University (NTU). He is completing his PhD as part of the Economic Development Board’s (EDB) Industrial Postgraduate Programme, and is also part of the Salesforce Research team.

Tan, who will be graduating next year, was inspired to delve into language variation partly due to Singlish, and the way English is used in Singapore.

“I recall auditing a class on language variation at the start of my PhD and it resonated with my experience living in Singapore, where there is significant language variation in everyday life. To my surprise, this phenomenon was not well studied in NLP especially for English, which was doubly surprising with the number of varieties it has,” Tan said. “Additionally, as mentioned, much of the existing focus on bias and fairness in NLP has been on gender and racial attributes, but not linguistic bias, even though it is unique to NLP and not found in other fields of AI.”

Tan believes that the real-world implications brought on by his thesis made his work stand out from other research.

“Additionally, I tend to favour interdisciplinary work and collaborations, which often lends a unique perspective to the final work. For example, for my paper which was accepted at the ACL conference this year, I collaborated with a linguist, AI ethicist, and professor of public policy from the Lee Kuan Yew School of Public Policy (LKYSPP). One of our reviewers, who gave us the maximum score and nominated it for an award, particularly liked that we discussed not only the technical and linguistic issues, but also the policy implications of our proposed framework,” He added.

Apart from his PhD research, Tan is also co-chair of a working group on tokenisation, which is part of a larger, open-source international project called BigScience. BigScience currently has more than 500 researchers from all over the world coming together to train a large language model with 200B+ parameters.

Integrating classic robotics with deep learning – the Differentiable Algorithm Network

For his PhD research, Integrative Sciences and Engineering PhD student Peter Karkus developed a novel robot learning architecture called the Differentiable Algorithm Network (DAN), that helps robots work towards gaining near human-level intelligence.

“The key feature of the DAN is that it encodes existing robotics knowledge in deep neural networks, which enables it to learn complex decision-making tasks even in previously unknown environments. Through my PhD, I have worked on various applications of this generic architecture and introduced new methods to a range of important robotic tasks, including navigation with only an onboard camera, localisation on a map, simultaneous localisation and mapping, and planning under partial observability. Some of these components have been also integrated in a real-world robot system – if you have been on campus recently, you might have seen Spot the Boston Dynamics robot dog, who learned to navigate COM1,” said Karkus.

Karkus’s research project received a fair bit of interest in the robotics and AI communities, as his work unifies two competing general approaches to robot system design: the classic model-based planning approach, and the modern data-driven learning approach.

“The choice between the two approaches for robotics has been a topic of great interest and the source of many debates,” said Karkus.

“Since the early days of robotics, the dominant approach for building robots have been model-based. In this approach, the robot first needs a model of how the world works, and then it uses an algorithm to reason with the model, choosing what action to take based on the predicted future. The issue is that the world is amazingly complex, thus our models can never be perfectly accurate, and our algorithms need to make approximations to complete in finite time. To build a robot, engineers have to simplify the tasks based on their assumptions about the task, which limits what the robot can achieve, and often makes the overall system brittle. A good example for this brittleness is in the videos of the 2015 DARPA Robotics Challenge, which features many entertaining robot failures,” explained Karkus.

On the other hand, the data-driven, model-free approach to robot systems design enables the robot to make decisions without explicit models or algorithms. Instead, this approach uses deep learning, where neural networks are trained with large amounts of data.

Deep learning has been transformative in computer vision and natural language processing, and it has also successful in various robotic domains. However, Karkus explained that robots can only learn simple tasks through deep learning, like pushing an object on the table. If the task becomes more complex (e.g. getting a robot to cook a three-course dinner) the amount of data required for deep learning becomes too large.

“Our DAN architecture integrates the model-based and model-free approaches by encoding model-based algorithmic structure into deep neural networks, and training them end-to-end with data. The encoded structure allows the robot to learn complex tasks even from limited training data, and end-to-end training provides robustness against imperfect modelling assumptions,” Karkus said.

He added: “Our unified DAN architecture provides a plethora of new opportunities for robotics researchers and practitioners to combine existing robotics knowledge with deep learning techniques, and I am hopeful that this work will help to develop more capable and useful robots for the benefit of societies in the future.”

Karkus is supervised by NUS Computing Provost’s Chair Professor David Hsu.

Said Karkus on his win: “I feel honoured and humbled to receive this award, and I am grateful for all the amazing people I have been fortunate to work with on this project. Beyond my supervisor, Prof David, a great number of people have contributed to my research project from our group at NUS, including Prof Lee Wee Sun, Xiao Ma and Shaojun Cai.”

Karkus, who is a student of the NUSGS, also had the chance to work with professors from the Massachusetts Institute of Technology (MIT) under NUSGS’s 2+2 program.

The program features partnerships with world-leading overseas universities and research institutes. Students in this program have the opportunity to engage in globally progressive research, both within Singapore and at any of the program’s partner institutions. Students can choose to spend nine months to two years in any reputable lab in any country.

“Thanks to the 2+2 program, I was also able to spend nine months at MIT working with Prof Leslie Kaelbling and Prof Tomás Lozano-Pérez, who have been fundamental to the development of this work. I have also benefited greatly from research internships, working with Rico Jonschkowski and Anelia Angelova at Google, and Theophane Weber and Nicolas Heess at DeepMind,” he added.

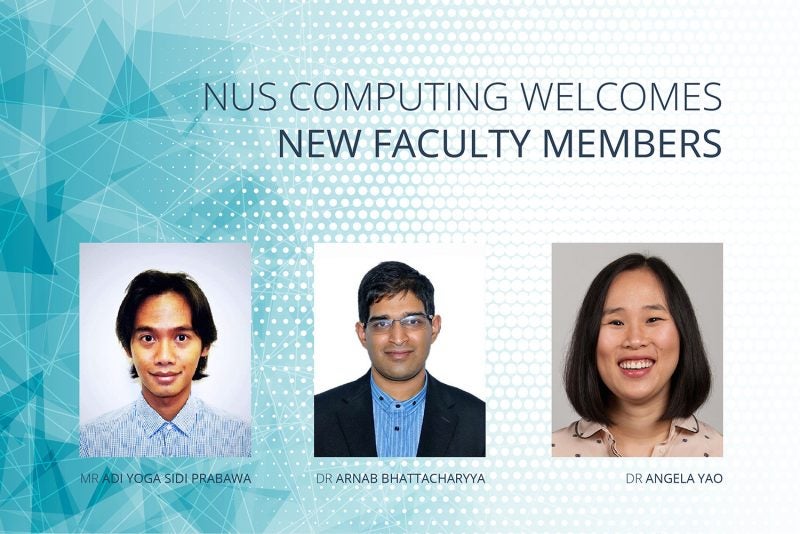

Assistant Professor Yair Zick: Ethics in Artificial Intelligence