Our brain can easily estimate the 3D position and the orientation of the objects we see. This task, however, is non-trivial for computers and robots. Computer scientists have been working on the problem of recovering the 3D position and orientation of objects in data captured from image sensors for years. This problem, formulated as the ‘pose estimation’ problem, is important because recovering the position and orientation of objects in a scene is often a necessary step to understanding the scene.

Charades for computers?

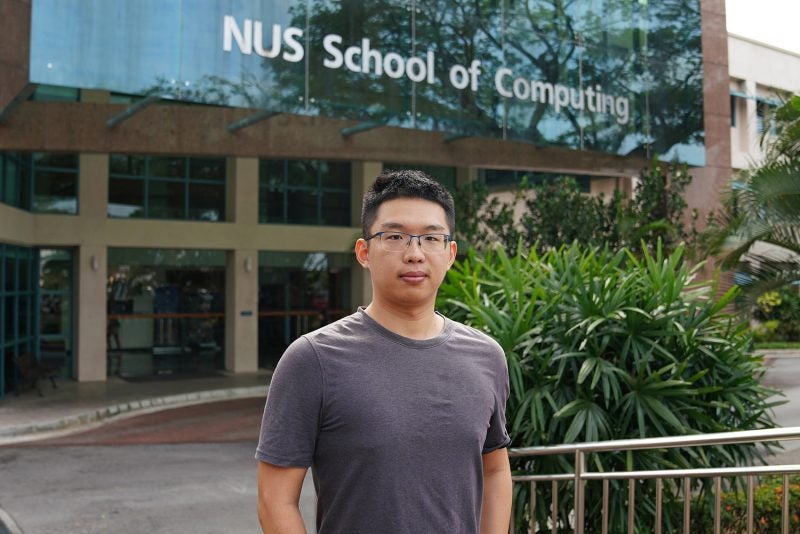

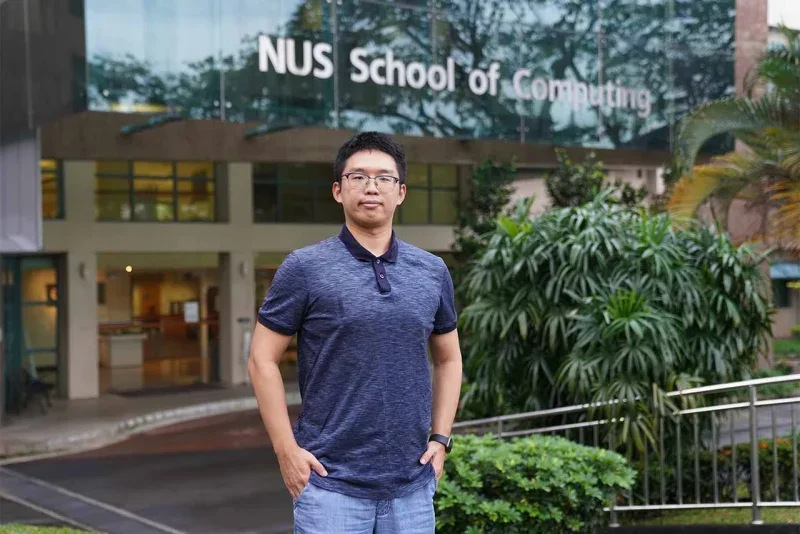

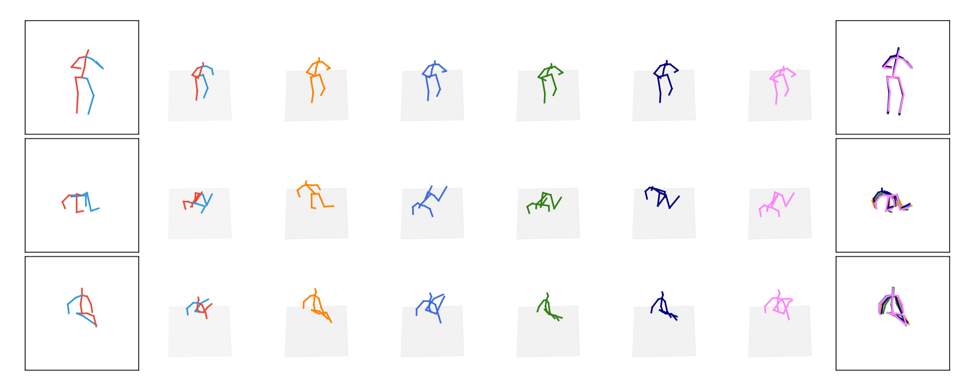

3D human pose estimation from a 2D monocular image is an ill-posed and inverse problem with multiple solutions. This is due to the loss of depth information and possible occluded/missing joints when the 3D human is projected on a 2D image without depth information. Assistant Professor Lee Gim Hee and his student, Li Chen, investigate the problem of recovering multiple possible 3D human poses from a 2D monocular image. They take a “two-stage” approach that first detect the 2D joint positions of the human body, followed by estimating the 3D depth of the respective 2D joints. Dr. Lee and Li Chen use a neural network called mixture density network, or MDN, to estimate the parameters of possible 3D human poses accurately, even in challenging situations where some of the limb joints are occluded or missing. This innovation leads to state-of-the-art performance for the 3D human pose estimation problem from a 2D image.

The figure above shows the input and output of the algorithm. The first column is the input 2D joints. The second column is the ground truth 3D pose, and the third to seventh columns are the hypotheses generated by the method. The last column is the 2D reprojections of all five hypotheses. The corresponding 2D projection and 3D pose are drawn in the same color.

Talk to the hand

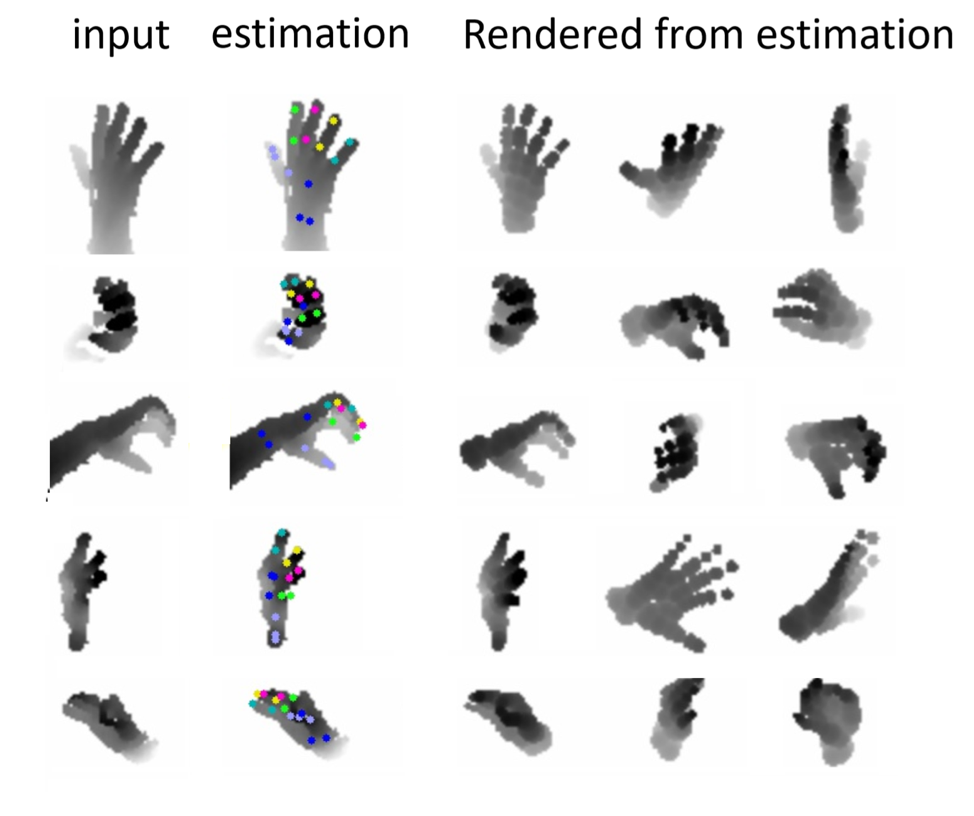

Assistant Professor Angela Yao recently tackled the problem of pose estimation for human hands from depth images, which has a range of applications, from helping machines understand body language to enabling a gesture-based human-computer interface. Along with her collaborators Chengde Wen, Thomas Probst, and Luc Van Gool from ETH Zurich, Dr. Yao developed a method for 3D hand pose estimation using a deep neural network. A typical deep neural network requires a huge amount of data for training (which is hard to obtain for 3D hand poses). Dr. Yao’s approach overcomes the need for data by synthesizing the training data from multiple renderings of a 3D hand model at random poses. The key breakthrough of her approach is that the proposed method self-optimizes any discrepancy between the 3D hand model used for training and the real human hand that the neural network is asked to recognize (in terms of shape, appearance, etc). Their results show that they can achieve an accuracy comparable to those methods that use many labelled images of hand poses for training.

The figure above shows the input depth images in the first column and the estimated hand pose in the second column. Columns 3-5 show the rendering of the estimated hand pose from different angles.

Learn like a kid

A similar strive towards reducing the amount of data needed for training a deep learning model manifested in the problem of ‘few-shot learning.’ A machine learning model often requires a large number of training samples for good performance. In contrast, humans can learn new concepts and master new skills faster and more efficiently from a small data set. For example, kids can easily tell dogs and cats apart after seeing them only a few times. So, the question is “Can we design a machine learning model to have the same ability to learn fast and efficiently?” Professor Chua Tat Seng and his collaborators, Dr. Sun Qianru, Mr. Yaoyao Liu, and Professor Bernt Schiele developed a machine learning technique called meta-transfer learning. This technique allows neural networks that have been trained on a large-scale datasets to adapt to few-shot learning, i.e. learning with a very small amount of training data. To enable more efficient converging, the researchers use a strategy in which they choose to train the neural networks to solve tasks that are considered ‘difficult’, i.e., tasks with high failure rates in previous trials. This strategy forces the neural network to “grow faster and stronger through hardship”.

For instance, suppose we want to train neural networks to identify if a given picture contains a Tasmanian Tiger. Only a few pictures of this species exist, thus only a few training samples can be produced. Meta-transfer learning allows us to train a neural network to identify other animals first using hard examples (e.g, distinguishing between dogs and wolves), then learn from the few existing photos of Tasmanian Tigers to identify a Tasmanian Tiger from a given picture.

This technique is general and can be applied to other situations where large amounts of data are not easily available.

Computer Vision at NUS Computing

The work done by the faculty members above were among the research papers presented at the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), held from June 16th – June 20th in Long Beach USA. The full list of research papers from the NUS Department of Computer Science presented at CVPR 2019 are:

- Meta-Transfer Learning for Few-Shot Learning

Qianru Sun (National Univ. of Singapore, MPI for Informatics)*; Yaoyao Liu (Tianjin University); Tat-Seng Chua (National University of Singapore); Bernt Schiele (MPI for Informatics) - Learning to Detect Human-Object Interactions with Knowledge

Bingjie Xu (National University of Singapore)*; Wong Yongkang (National University of Singapore); Junnan Li (National University of Singapore); Qi Zhao (University of Minnesota); Mohan Kankanhalli (National University of Singapore) - Emotion-Aware Human Attention Prediction

Macario II O Cordel (De La Salle University)*; Shaojing Fan (National University of Singapore); Zhiqi Shen (National University of Singapore); Mohan Kankanhalli (National University of Singapore) - Learning to Learn from Noisy Labeled Data

Junnan Li (National University of Singapore)*; Wong Yongkang (National University of Singapore); Qi Zhao (University of Minnesota); Mohan Kankanhalli (National University of Singapore) - Propagation Mechanism for Deep and Wide Neural Networks

Dejiang Xu (National University of Singapore); Mong Li Lee (National University of Singapore)*; Wynne Hsu (National University of Singapore) - Robust Point Cloud Reconstruction of Large-Scale Outdoor Scenes

Ziquan Lan (NUS)*; Zi Jian Yew (National University of Singapore); Gim Hee Lee (National University of Singapore) - Disentangling Latent Hands for Image Synthesis and Pose Estimation

Linlin Yang ( University of Bonn); Angela Yao (National University of Singapore)* - Generating Multiple Hypotheses for 3D Human Pose Estimation with Mixture Density Network

Chen Li (National University of Singapore)*; Gim Hee Lee (National University of Singapore) - Towards Natural and Accurate Future Motion Prediction of Humans and Animals

Zhenguang Liu (Zhejiang Gongshang University)*; Shuang Wu (Nanyang Technological University); Shuyuan Jin (NUS); Qi Liu (National University of Singapore); Shijian Lu (Nanyang Technological University); Roger Zimmermann (NUS); Li Cheng (University of Alberta) - Scale-Aware Multi-Level Guidance for Interactive Instance Segmentation

Soumajit Majumder (University of Bonn)*; Angela Yao (National University of Singapore) - Self-supervised 3D hand pose estimation through training by fitting

Chengde Wan (ETHZ)*; Thomas Probst (ETH Zurich); Luc Van Gool (ETH Zurich); Angela Yao (National University of Singapore)